In this NetApp training tutorial, I will discuss the NetApp ONTAP clustered hardware architecture. In the last post, we covered the scalability limitations that existed in legacy 7-Mode, the main problems being that there is a maximum of two controllers in the same system and it does not support active-active load balancing for the same data sets. Scroll down for the video and also text tutorial.

NetApp ONTAP Clustered Hardware Architecture – Video Tutorial

Rohit Kumar

Thanks to your courses I have cracked multiple job interviews on NetApp. I was previously working as a Technical Support Engineer for NetApp and I’m a NetApp Administrator at Capgemini now. Thank you for the awesome tutorials!

It's much easier to understand the clustered hardware architecture of Clustered ONTAP and ONTAP 9 if you see the 7-Mode architecture first. Have a read of my post covering 7-Mode architecture here: https://www.flackbox.com/netapp-7-mode-hardware-architecture

NetApp realised the scalability issues of 7-Mode and wanted an operating system that overcame them. They had a choice of how they were going to do this. They could either develop a new OS from scratch, upgrade 7-Mode, or acquire another company that already had the capability. They went for the latter option, because upgrading 7-Mode would have required a complete rewrite of the operating system. NetApp gained the clustered operating system ability with the acquisition of Spinnaker Networks in 2003.

NetApp ONTAP Hardware Architecture

Clustered ONTAP (renamed to just ‘ONTAP’ from version 9) is very similar to 7-Mode with one key difference that we'll get to in a minute.

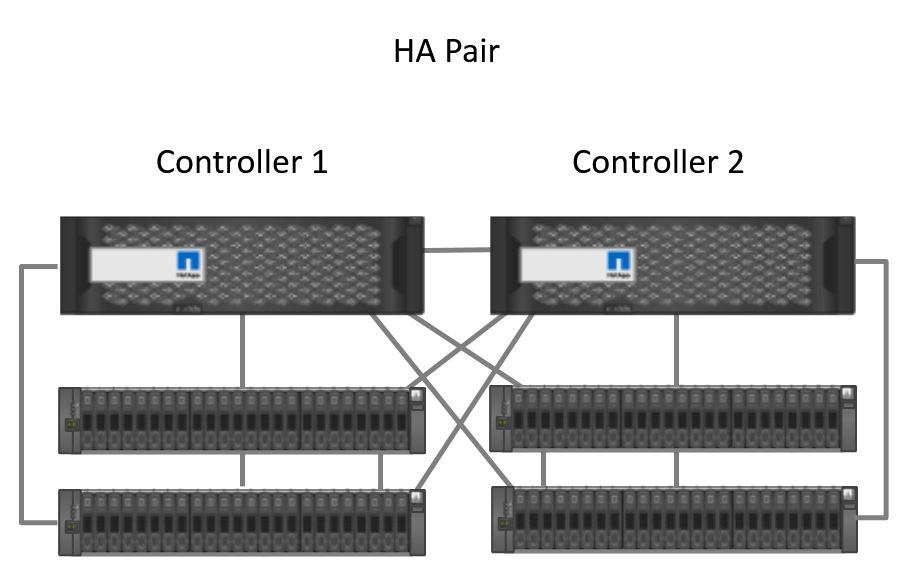

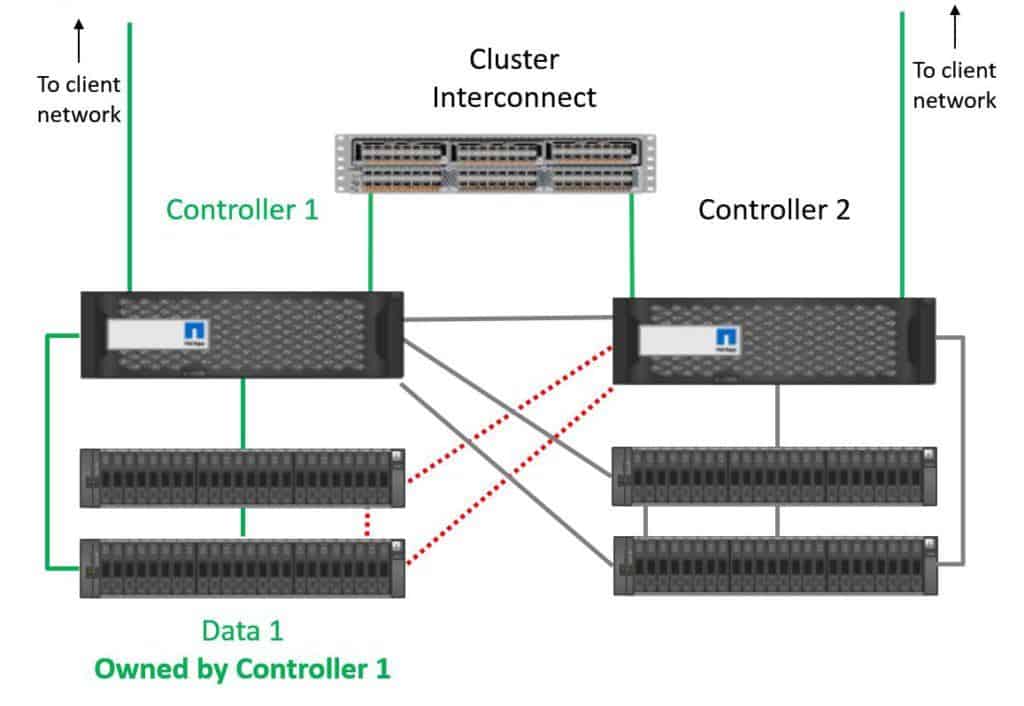

Cluster mode is also comprised of controllers that are paired together in an HA pair. You can see in the diagram below we've got Controller 1 and Controller 2 which are configured in an HA pair exactly the same way as if it was a 7-Mode system.

Clustered High Availability Pair

Controller 1 is connected to its shelves with SAS cables, Controller 2 is connected to its shelves, and they're also connected to each other's shelves for High Availability.

They have an HA connection between them for NVRAM mirroring and so they can detect if the other controller goes down. That part of the architecture is exactly the same as in 7-Mode and High Availability works in the same way too.

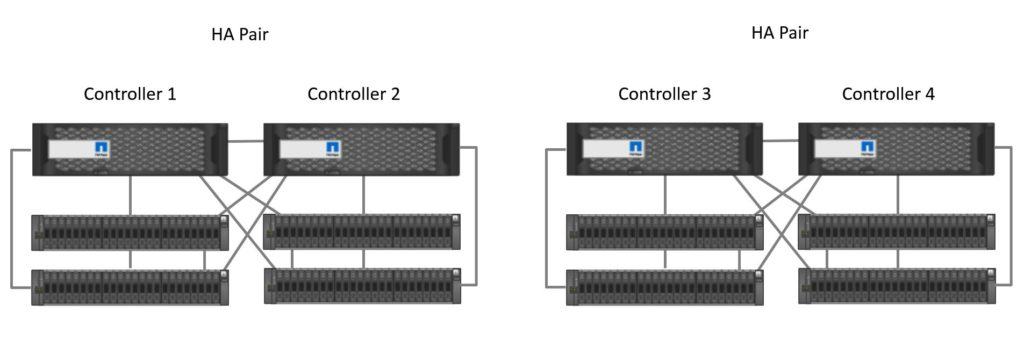

Now we get to the difference between 7-Mode and Clustered. We can add additional controllers to the same clustered system. Clustered ONTAP can scale up to 24 nodes if you're only using NAS protocols (CIFS / NFS), and up to 8 nodes if you’re using SAN protocols (Fibre Channel / iSCSI / FCoE) or a combination of SAN and NAS.

In the diagram we add Controller 3 and Controller 4 to the cluster. They are also configured as a High Availability pair and cabled the same way to each other’s disk shelves.

ONTAP Cluster with 4 Nodes

We don't connect every single controller to every other controller's disk shelves, because that would require an unfeasible amount of cabling and ports on our controllers and disk shelves. We have our controllers connected up in HA pairs the same way as in 7-Mode. Controller 1 with Controller 2, and Controller 3 with Controller 4 etc.

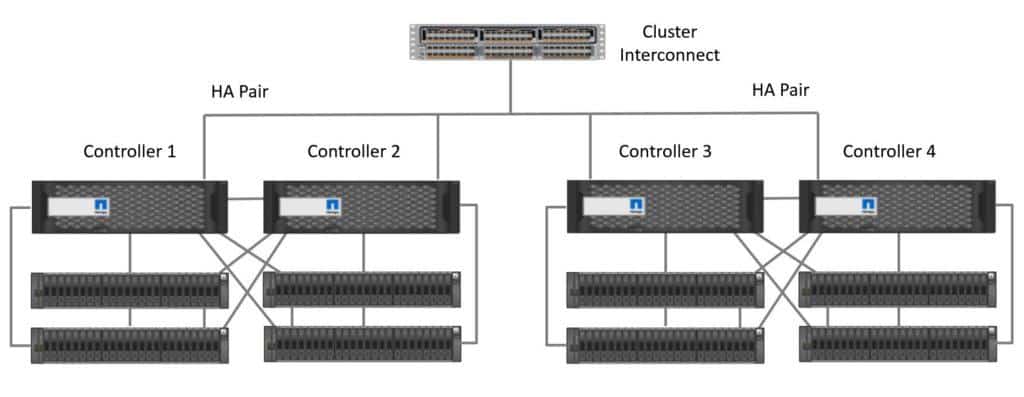

The nodes are all part of the same cluster so they need to be able to communicate with each other. This is enabled by the cluster interconnect, shown in the diagram below.

Cluster Interconnect Network

The cluster interconnect is the main hardware architecture difference between 7-Mode and Clustered ONTAP. It consists of a pair of redundant 10Gb Ethernet switches. Each node in the cluster is connected to both switches. The cluster network switches are dedicated to connectivity between the nodes, we can't also use them for client data access or management traffic.

Client Data Access

Disks are owned by one and only ever one controller. This is the same in 7-Mode and Clustered ONTAP.

In the diagram below Data Set 1 is owned by Controller 1. Clients can access the data over a port on Controller 1. The difference between cluster mode and 7-Mode is that clients can also access the same data set through any other controller in the cluster. If the client accesses the data through a network port on a different controller than the one that owns the disk, the traffic will go over the cluster interconnect.

Active-Active Load Balancing

This gives us active-active load balancing for the same data set through our controllers. Note that this is on the client network side only however, we still only use the SAS cables on the controller which own the disks.

7-Mode only supports active-standby redundancy for the same data set, client access always goes through the controller which owns the disks.

Whenever nodes are communicating directly with each other, the traffic goes over the cluster interconnect. As well as client access through a different node than owns the disks, this could be our administrative settings being replicated between all nodes, or administrative moves of data between controllers.

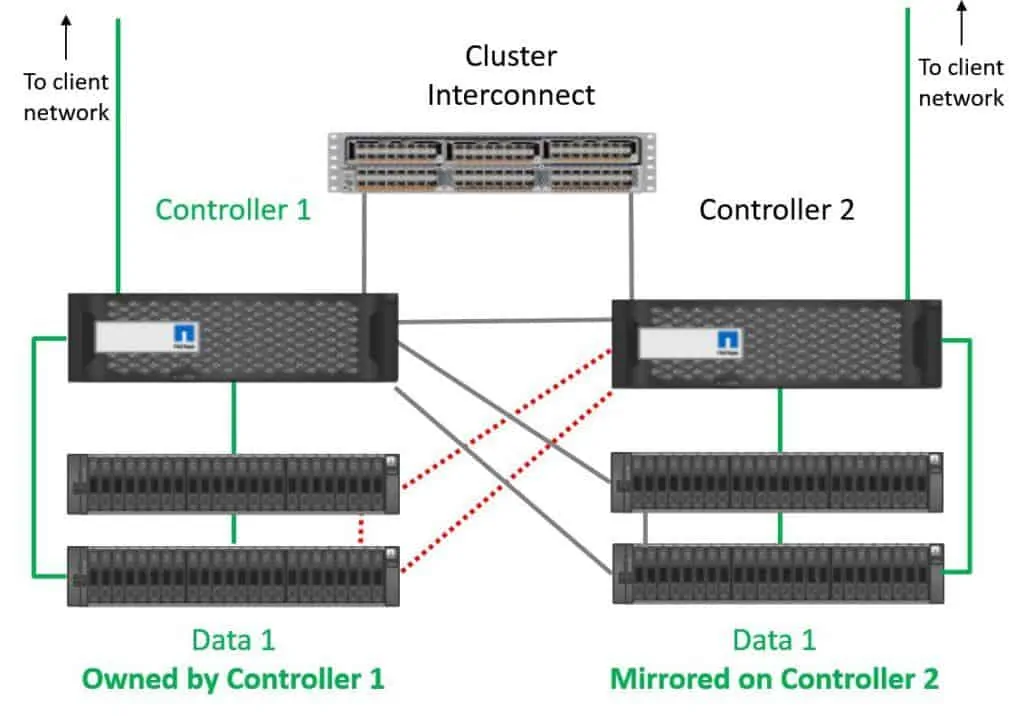

Another option we have for client access is to mirror the data throughout the different controllers in our cluster. That means the data can be accessed directly on any of the controllers without having to go over the cluster interconnect.

Mirrored Data

Clustered ONTAP Scalability Enhancements

Capacity Scaling: The cluster supports up to 24 nodes if you're using NAS protocols only, up to 8 nodes if you’re using SAN or a combination of both.

Nodes, disks and shelves can be added non-disruptively, so you can start small and grow bigger over time. We could start with a single node or a 2-node cluster and then upgrade to 4 nodes, 6 nodes etc. Nodes are configured in HA pairs so, apart from a single node cluster, there must be an even number of nodes.

The controllers in an HA pair have to be the same model, but you can have different model controllers in the same cluster. For example, Controller 1 and Controller 2 could be one model and controller 3 and controller 4 could be a different model.

Performance Scaling: Each node added to a cluster adds linear performance scaling for CPU, memory and throughput of the system.

Operational Scaling: A cluster is managed as a single system. This reduces complexity and operational costs. If we wanted to have 24 nodes with 7-Mode, we would have 12 different systems to manage.

Multi-Tenancy: Clustered ONTAP supports multi-tenancy through the use of Storage Virtual Machines (SVMs), which were called Vservers in older versions of Clustered ONTAP. 7-Mode also supports multi-tenancy through the use of vfilers, but Clustered ONTAP was designed to support multi-tenancy from the start and the implementation is cleaner with support for SVM level administrators.

Data Migration: Data can be moved non-disruptively between all nodes within the cluster over the cluster interconnect. You can easily move data to rebalance it more evenly over the cluster or to move it to higher or lower performance disks.

More Info

ONTAP Data Management Software Datasheet

Clustered ONTAP for 7-Mode Administrators

The Zerowait blog is updated with great content on current NetApp platforms

Click Here to get my 'NetApp ONTAP 9 Storage Complete' training course.