In this NetApp training tutorial, I will explain Flash Cache which is one of NetApp’s Virtual Storage Tiering technologies. Flash Pool, the other VST technology, is covered in the next post in this series. Scroll down for the video and also text tutorial.

Flash Cache – NetApp VST (Virtual Storage Tier) Video Tutorial

William Mark

One of the best courses I’ve taken. Neil is clear and highly knowledgeable. He explains complex Netapp concepts and breaks them down into digestible easy to understand chunks. I’ve taken NetApp Boot camps that are too fast paced but this is perfect.

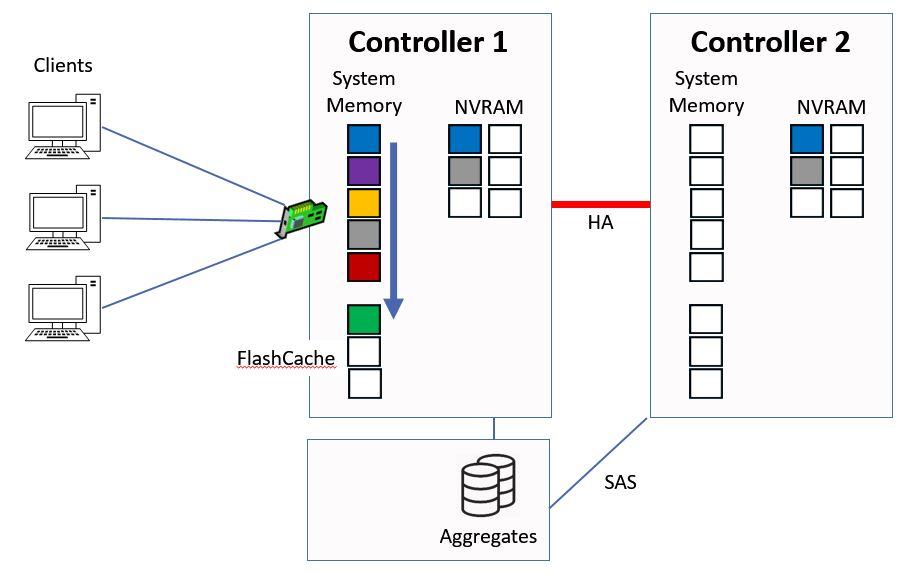

This post follows on from the previous video which detailed how reads and writes are handled by WAFL, NVRAM and the System Memory Cache.

VST Virtual Storage Tier caching

Typically, as data gets older it doesn't need to be accessed so much, and we don't need to have it on as high performance storage. Storage Tiering and Hierarchical Storage Management are concerned with placing data on an appropriate ‘tier’ of storage, such as high performance SSD drives for our frequently accessed ‘hot’ data, or lower performance SATA drives for our older data. Storage tiering uses algorithms to classify data and place it on the appropriate storage tier automatically.

NetApp don't have any traditional storage tiering software natively in ONTAP, but they do have the Virtual Storage Tiering technologies of Flash Cache and Flash Pool. These are both caching technologies which store the data which will benefit most from high performance on flash storage. Data is moved to spinning HDD storage when it is aged out of the cache.

Flash Cache Walkthrough

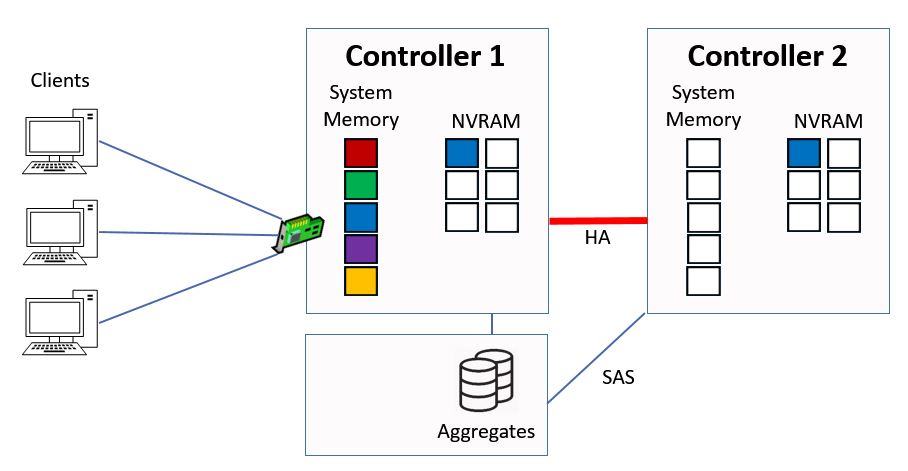

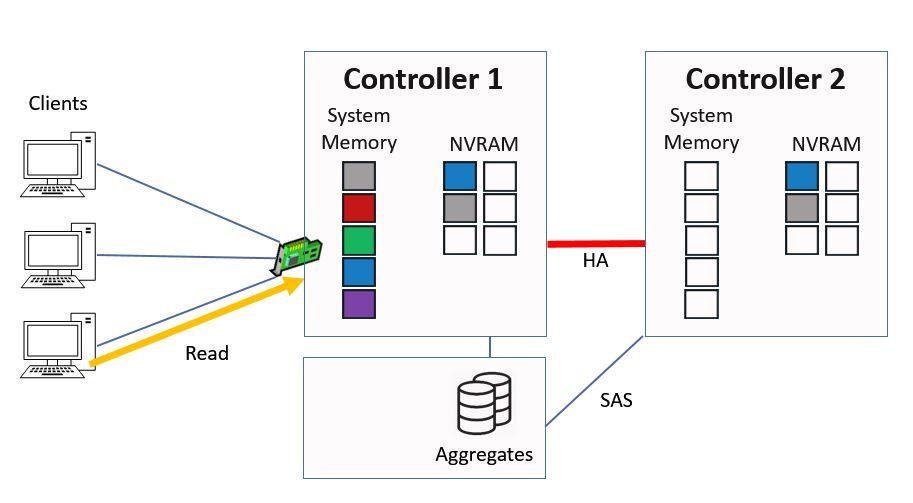

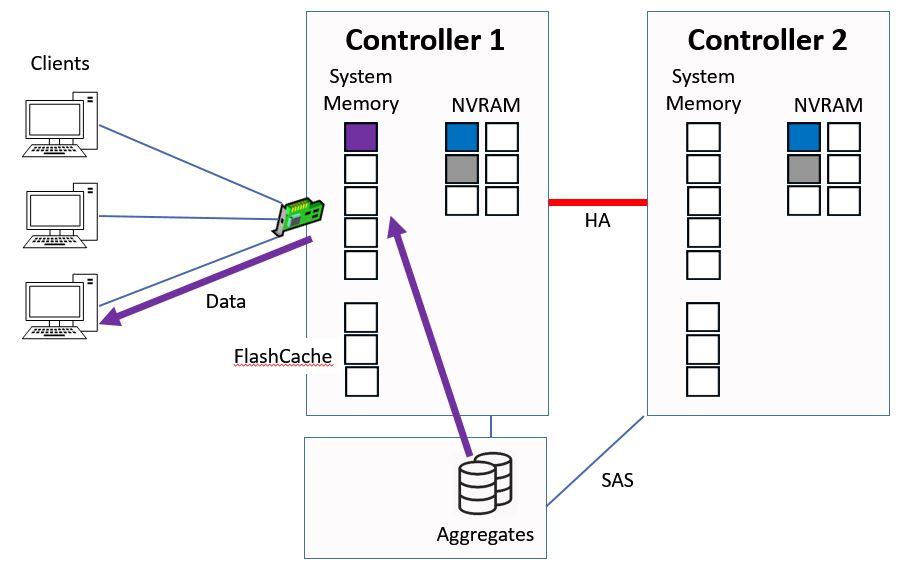

Let's take a look and see how Flash Cache works. This is where we left off at the end of our last lesson:

Where we left off

Notice that the system memory cache is full. Next up we have another write request comes in, this time for grey data.

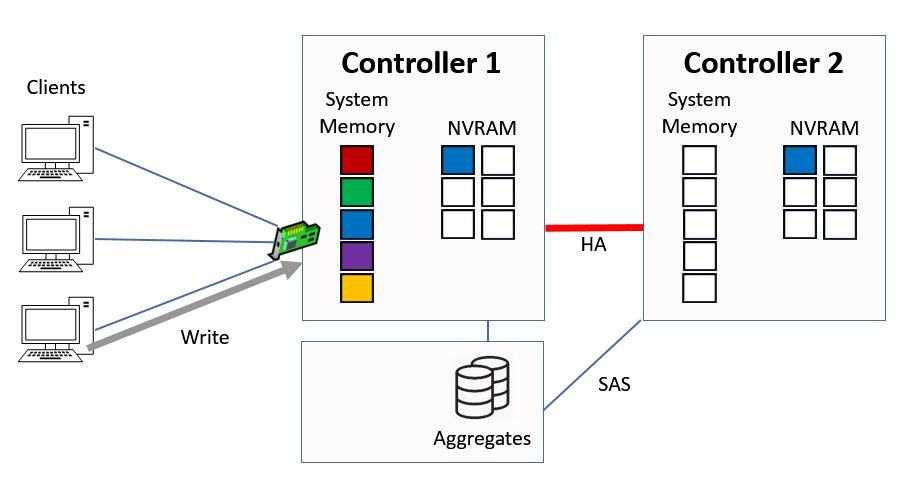

Client Sends Write Request

As usual, it gets written to system memory on Controller 1, and to NVRAM on both Controller 1 and its high availability peer of Controller 2.

Written to System Memory and NVRAM

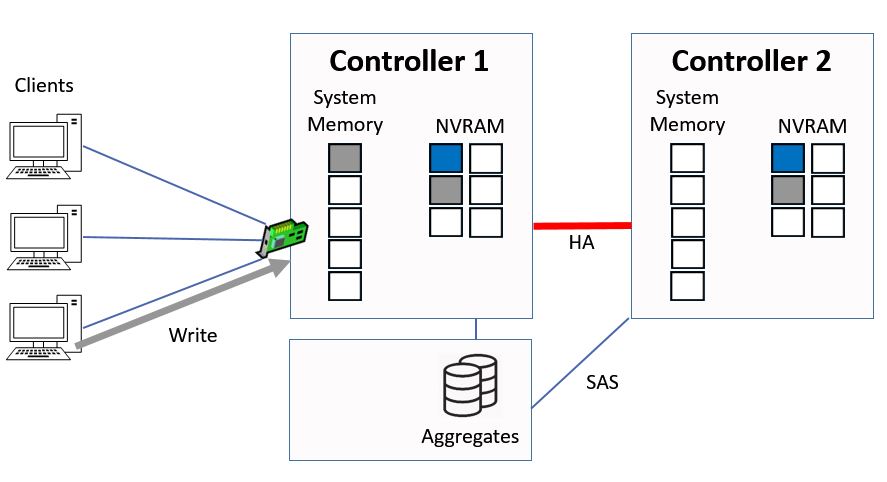

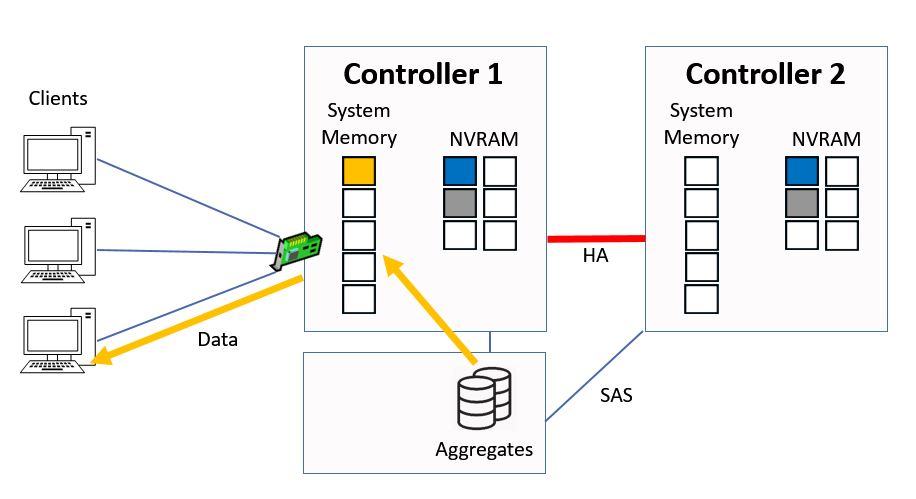

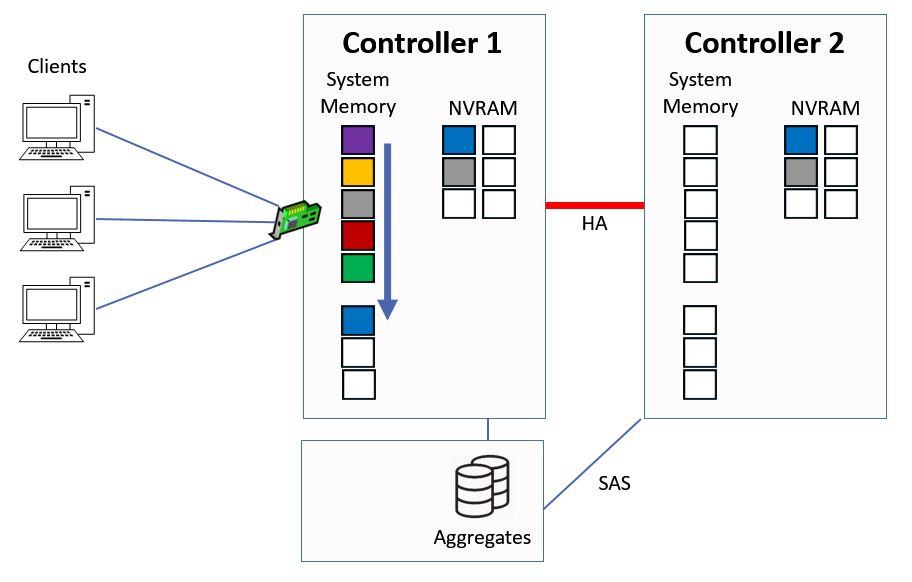

The contents of system memory all get bumped down a slot. Because the system memory was already full, the yellow data that was on the bottom gets bumped out of the cache.

Data is Evicted from Cache

A client then sends in a read request for that yellow data.

Client Sends Read Request

Because it's not in the cache any more the system has to fetch it from disk.

Controller 1 Serves Data From Disk

The data will then go into the top slot in system memory and everything else gets bumped down a slot again. Now we lose the purple data from our cache.

Data is Cached in System Memory

If data is in the cache, it's going to get back to the client much quicker than if we have to fetch it from disk, so wouldn't it be great if we had more cache in our system? That's where Flash Cache comes in.

Flash Cache 2 has evolved from the original Performance Acceleration Module (PAM) and Flash Cache 1 cards. It's a PCI Express card that fits into a spare expansion slot in your controller chassis. It currently comes in sizes of 512GB, 1TB, or 2TB.

Flash Cache 2

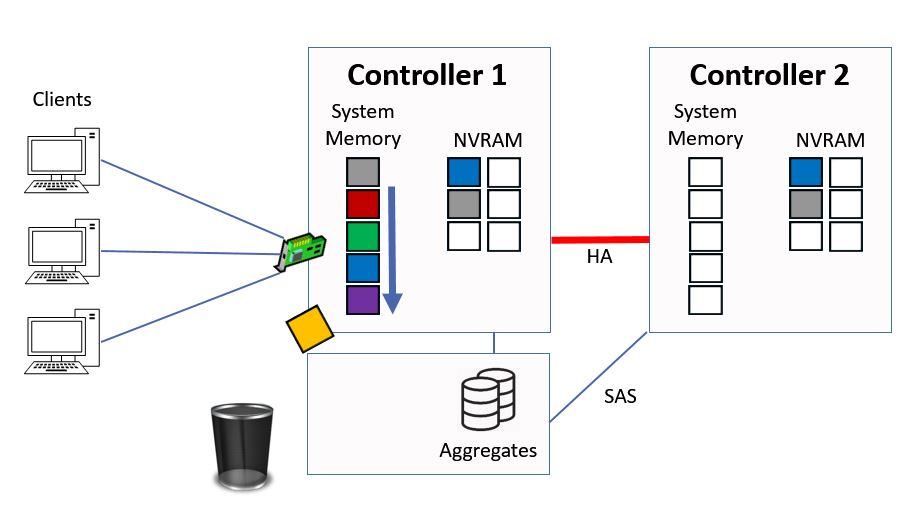

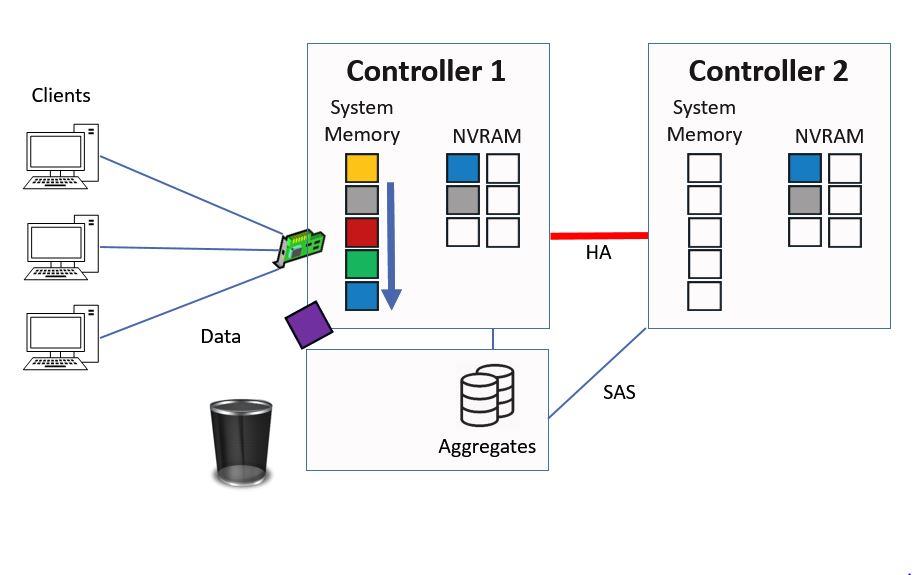

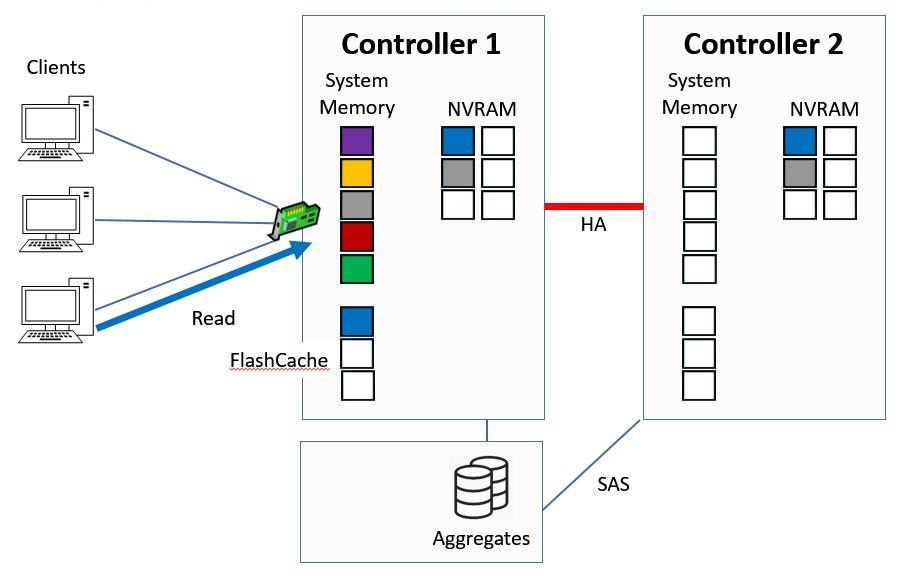

Back to our example again, we’ve now installed Flash Cache in our controller and a client sends in a read request for purple data.

Client Sends Read Request

The system will fetch the data from disk because it wasn't in the cache. It goes into the top slot in system memory and gets sent to the client.

Controller 1 Serves Data From Disk

All the existing data that was in the memory cache gets bumped down a slot, but now our blue data which was in the bottom slot gets bumped down into the Flash Cache memory rather than lost from the cache.

Data is Cached

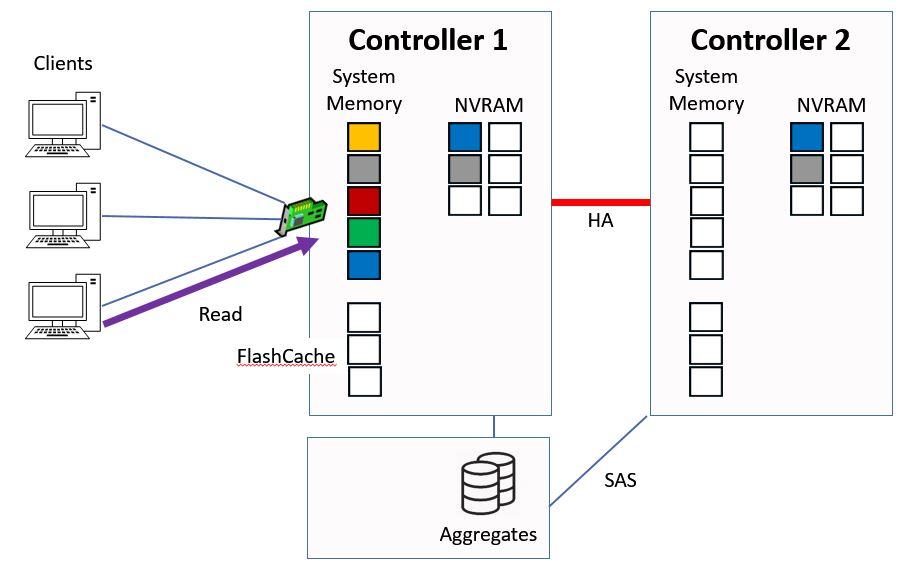

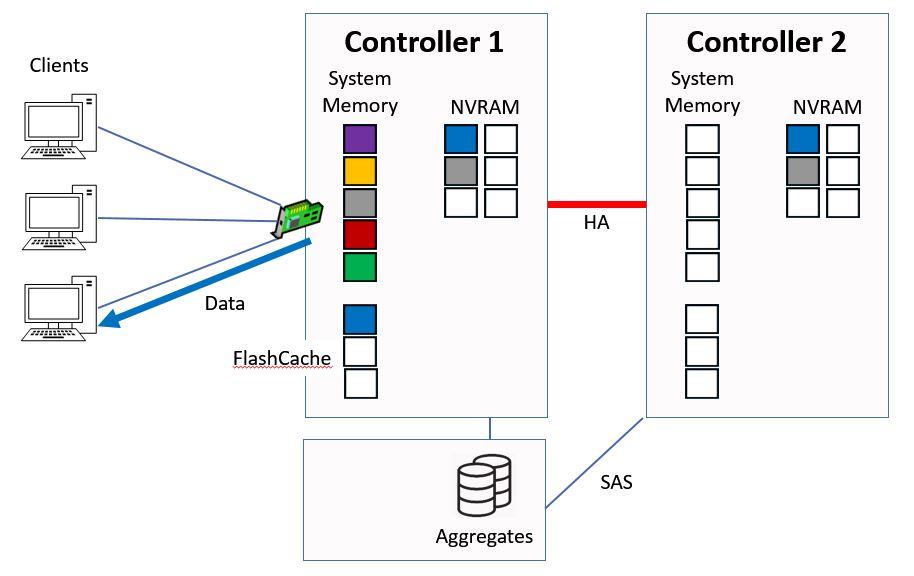

Another client request comes in for the blue data.

Client Sends Read Request

It can be fetched from the fast Flash Cache rather than from disk.

Controller 1 Serves Data From Memory

The blue data goes into the top slot in memory as usual, just as if we'd fetched it from disk, and everything else gets bumped down a slot in the cache.

Data is Cached

Flash Cache very simply expands the amount of memory cache that we have on a controller. The memory cache uses a simple first in, first out revolving door algorithm where any data that has been marked to be cached goes into the top slot and everything else gets bumped down a slot.

System memory is the fastest and always gets used first. As data gets bumped out of system memory, it gets bumped into Flash Cache.

Flash Cache Characteristics

When a read request is received by the system, it looks for the data in system memory first, then Flash Cache, and then disk.

Flash Cache is plug and play. We don't need to do any configuration at all, we can just take the PCI Express expansion card and fit it into a spare slot on the controller.

Flash Cache improves performance for random reads. It can’t be used to improve write performance because it is volatile memory and does not survive a power outage. Writes need to be saved to permanent storage such as SSD or HDD to ensure we don’t get data loss. (ONTAP WAFL is already optimized for write performance as explained in the previous post.)

Sequential reads tend to be large and already get comparatively good performance from HDD’s. We don't want to waste the available room that we have in Flash Cache for data which will not get a large performance benefit. Flash Cache is optimized to improve random read performance.

Flash Cache is a controller-level cache and improves performance for all aggregates on a controller. If there’s a graceful shutdown or failover then the cache is saved in a snapshot and reapplied when the controller comes back online. If there is an unplanned outage however the cache will be lost.

Part 1 of this series where I covered how reads and writes are handled by the system is available here: WAFL, NVRAM and the System Memory Cache

Join me for the final Part 3 here: Flash Pool – NetApp VST Virtual Storage Tier

Additional Resources

Flash Cache Best Practice Guide

Click Here to get my 'NetApp ONTAP 9 Storage Complete' training course.