In this NetApp training tutorial, you’ll learn about RAID groups and aggregates, how they relate to each other, and considerations for how best to configure them. Scroll down for the video and also text tutorial.

RAID Groups and Aggregates on NetApp ONTAP Video Tutorial

Alejandro Carmona Ligeon

This is one of my favorite courses that I have done in a long time. I have always worked as a storage administrator, but I had no experience in Netapp. After finishing this course, even co-workers with more experience started asking me questions about how to set up or fix things in Netapp.

RAID Groups

Your disks are grouped into RAID groups, and then the RAID groups are assigned to aggregates. The RAID group configuration is an attribute of the aggregate. In System Manager, you'll see a page for your disks and you'll see a page for your aggregates, but there is not a separate page for RAID groups.

The RAID group configuration is found on the aggregate page because it's an attribute of your aggregates. It also specifies how many data drives you have for capacity and how many parity drives you have for redundancy. The RAID groups can be RAID 4, RAID-DP, or RAID-TEC in ONTAP.

RAID Types – RAID 4

RAID 4 uses a single parity drive, so it survives a single drive failure, but if two drives failed in that RAID group, then you would lose it and you'd have to restore from a backup.

With RAID 4, the minimum size is two disks for aggr0. For normal data aggregates, the minimum size is four disks. RAID 4 is not commonly used.

It's more really there for backward compatibility because with the history of ONTAP, when it was first available, it only supported RAID 4 and then RAID-DP came out later. They needed to support the existing RAID 4 aggregates on systems that were being upgraded to the later versions so there's still support for RAID 4.

You will sometimes still see RAID 4 being used in smaller systems where it's necessary to maximize the available capacity and you don't want to have too many parity drives taking up space.

RAID Types – RAID-DP

The next type is RAID-DP which stands for dual parity. Dual parity means that there are two parity drives and because there are two parity drives, it will survive two drive failures.

With RAID-DP, the minimum size is three disks for aggre0 and the minimum size is five disks for your normal data aggregates. RAID-DP is the default type if the disk size is less than 6 TB.

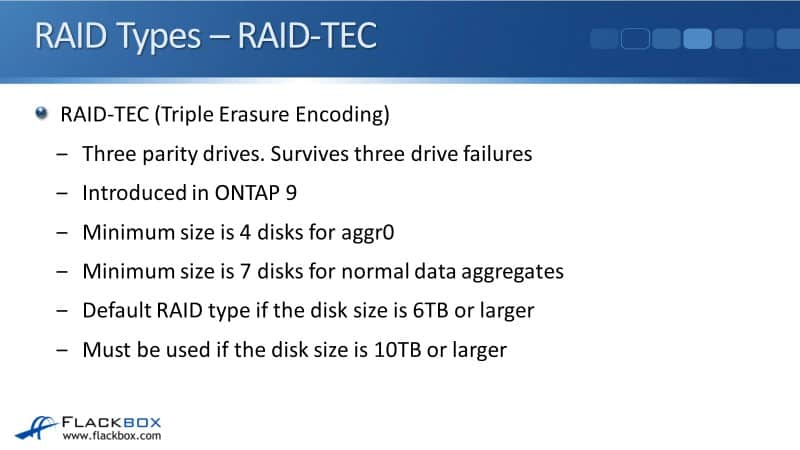

RAID Types – RAID-TEC

Finally, the last type we have is RAID-TEC. The TEC stands for Triple Erasure Encoding. So RAID 4 was one parity drive, RAID-DP is two parity drives, and RAID-TEC is three parity drives. So this survives three drive failures and RAID-TEC was introduced in ONTAP 9.

With RAID-TEC, the minimum size is four disks for aggr0. And for your normal data aggregates, the minimum size is seven disks. RAID-TEC is the default RAID type if the disk size is 6 TB or larger, and it must be used if the disk size is 10 TB or larger.

It is because when having a drive failure with larger size drives, it's going to take longer to re-calculate that from parity and get your spare drive going into the RAID group up and running. Thus, it takes longer to repair or recover from a failed drive.

That would mean that it's more likely that another drive will fail during that time. It's just the laws of probability. The longer something is down for, the more likely it is that another drive will go down at the same time as well.

Since there's a higher probability of having multiple drive failures when we've got larger drives, we would want to have more redundancy there. That's why we go for that extra parity drive with RAID-TEC.

Converting RAID Types

When converting RAID types, you can non-disruptively convert aggregates between the different RAID types. This is not commonly done though. Normally when you're setting things up in the first place, you're going to need to know what RAID type you're going to want to use. You're going to set that, and it's not going to change.

A reason that you would convert RAID types is; if you were converting aggregates with larger than 4 TB disks from RAID-DP to RAID-TEC following an upgrade to ONTAP 9.

Back in ONTAP 8, RAID-TEC did not exist. NetApp just developed that when ONTAP 9 came out and because larger drives were becoming available now. That's why there was a need to have RAID-TEC.

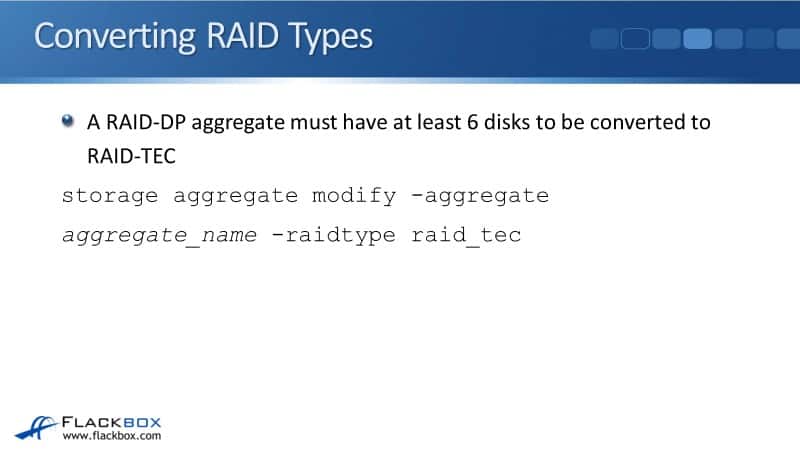

A RAID-DP aggregate must have at least six disks to be converted to RAID-TEC and you can see the command to do it is:

storage aggregate modify -aggregate

aggregate_name -raidtype raid_tec

RAID Groups

The disks in a RAID group must be the same type. All the disks in the same RAID group are going to be all SAS or SATA or SSD. They should also be the same size and speed.

The size and speed are not enforced. However, you must do enforce it because if you have drives of differing size or speed in the same RAID group, then they're all going to operate at the size or speed of the lowest drive.

For example, you have a RAID group made up of 1 TB drives, and then it's also got a 500 GB drive in there. Then you're only going to have the capacity of 500 GB for all the drives.

You're going to be wasting a load of space so you don't want to do that. You want to make sure that they are all one terabyte, so that way you get the full capacity used.

This is the same with speed as well. If you've got one drive in the RAID group operating at a lower speed, they're all going to run at that lower speed. So in your RAID group, you want your disks to be the same type, the same size, and the same speed. You also want to have spare drives available in the system as well.

Spare Disks

It would be inefficient when spare drives are not assigned to a particular aggregate. Your spare drives are at the cluster level, and they're able to replace a failed drive that happens anywhere in the cluster.

So if you've got a drive that fails in aggr1, and a drive that fails in aggr2, then the spare drives are pooled for the entire cluster as a whole. Both aggr1 and aggr2 have got the same access to those spare drives.

Spare drives can be used if you have a dry failure to replace that failed drive. The other reason you have spare drives is if we want to create a new normal aggregate. So if a drive fails, the system will automatically replace it with a spare disk of the same type, size, and speed, and the data will be rebuilt from our RAID parity.

There will be a performance degradation until the rebuild is complete because the system has to think to do the rebuild from the parity information. You should have at least two spare disks of each type, size, and speed of your disks in the system.

Aggregates

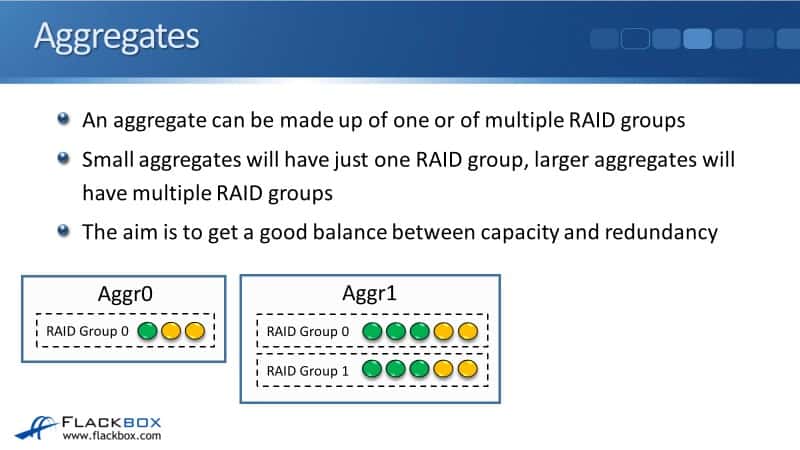

An aggregate can be made up of one or multiple RAID groups. If you've got a small aggregate, then it's going to have just one RAID group in there. You wouldn't need to have multiple groups. Larger aggregates will have multiple RAID groups.

The aim is to get a good balance between capacity and redundancy in your aggregates. Looking at the diagram here, you can see that we've got Aggr0 which has just got three disks, where the yellow drives are parity drives and the green is a data drive. I'm using RAID-DP that's made up of just one RAID group.

Then we've got Aggr1 and it's made up of two different RAID groups. In the diagram, there are only five disks in each RAID group however in real life, you'd have more than five disks.

Maybe we would have 16 drives in each of the RAID groups. If you have a large aggregate with lots of drives in there, it is going to be made up of multiple RAID groups to give you enough redundancy to give you enough parity drives.

So, you could have an aggregate made up of a single RAID-DP group of 16 disks. With RAID-DP, you've got two parity drives. That would give you 16 data drives for capacity and two parity drives for redundancy and that example is a good balance between capacity and redundancy.

For instance, the actual total capacity would be 16 terabytes when using one terabyte, but only 14 terabytes would be usable because two of our drives are being used for parity. But we do want to have those parity drives there to give us redundancy in case we have a drive failure.

The more drives there are, the higher the chance of multiple failures at the same time. That's just the laws of probability. We wouldn't want an aggregate with 48 drives to have just a single RAID group because that would give us 46 data drives and two parity drives. There's too much chance of having multiple drive failures.

Thus, the more drives that you've got, the more chance there is of having multiple failures at the same time. With more data drives, you want to have more parity drives through the use of our RAID groups in case you have failures.

For example, we had 48 drives, we don't want that to be made up of just one RAID group. Since that would give us 46 data drives and two parity drives, so there's not enough redundancy. It would be better to use three RAID groups having 16 disks each. That would give us 42 data drives and six parity drives which means that we're giving up some capacity.

If we use just one RAID group, then we would get 46 data drives. Using 1 TB disks as an example, if we had one RAID group then we would have 46 data drive. That would give us 46 TB of usable capacity and the 2 TB will be used for parity.

However, there is still not enough redundancy there, So what we should do is split those 48 drives into three RAID groups. We want the RAID groups to be equal size, so we would use 16 each. There are two parity drives in each of those RAID groups. That gives us 42 data drives thus 42 terabytes of usable capacity.

So it went down from the 46 we had before but now we've got six parity drives. This would also mean that we've got more safety against multiple failures now which gives us a good balance between the capacity and the redundancy.

We also need to consider performance when we are creating our aggregates as well. The more disks that we have in an aggregate the better the performance because we can read and write from multiple drives at the same time. Sometimes it would be recommended to add more drives than the necessary to hold the capacity of data.

For instance, we've got 5 TB worth of data. Maybe we wouldn't make the aggregate just 5 TB in size. Maybe we would make it 10 TB in size, knowing that we're actually never going to use that other 5 TB.

The reason why we're doing it is to get more disks into the aggregate so we can read and write to them at the same time, which would give us better performance. So it depends on the particular application and the particular workload that you're running. It can be a reason to make the aggregate larger than what you actually need to get better performance.

Active/Standby Systems

The next thing to talk about is active standby systems. On smaller entry-level two-node systems, something that's sometimes done is to assign all disks to a particular node so you can make one large aggregate.

Let's say we've got an entry-level system and it has internal disks in there. For example, it's an entry-level platform with 24 internal drives. By default, you would have two data aggregates there. Assuming that it's split into 12 and 12.

Using RAID-DP, since we've got two aggregates, we would end up with four parity drives being used giving us 20 usable disks. You could get higher capacity utilization by just using one aggregator.

A bad example would be an entry-level system with 12 disks. Rather than splitting the 12 disks into two aggregates of size six each and having to have four parity drives, you could just have one larger aggregate with the 12 drives. Now you've only got two parity drives rather than four, so that gives you more usable capacity.

The system will be acting as active standby if you do that because you've got one aggregate and aggregates are always owned by one and only one node. So let's assume that it’s owned by node 1. The node 1 will be active while node 2 is going to be on stand by.

You're not going to get a good performance because you are only using one of your nodes rather than using the two. You will be able to access the aggregate through network interfaces on either node, but the client read and write requests over the SAS connection will only be processed by one node.

Growing and Shrinking Aggregates

Lastly, you can grow but you cannot shrink aggregates on the fly. So you can have an aggregate that is currently unused. It has volumes on there where clients are reading and writing the data. You could add disks to that aggregate and it will be completely non-disruptive and also transparent to your clients.

So you can grow aggregates on the fly but you cannot shrink aggregates on the fly. So, if you did want to make an aggregate smaller, you can still do it non disruptively.

What you would need to do with all the volumes that were on the aggregate is to move them to a different aggregate. You would then destroy the original aggregate and recreate a new one with a smaller size made up of fewer disks.

RAID Groups and Aggregates on NetApp ONTAP Configuration Example

This configuration example is an excerpt from my ‘NetApp ONTAP 9 Complete’ course. Full configuration examples using both the CLI and System Manager GUI are available in the course.

Want to practice this configuration for free on your laptop? Download your free step-by-step guide ‘How to Build a NetApp ONTAP Lab for Free’

Disks and Aggregates

- View only the SSD disks in the cluster. How many SSD disks are there?

cluster1::> storage disk show -type ssd

Usable Disk Container Container

Disk Size Shelf Bay Type Type Name Owner

---------------- ---------- ----- --- ------- ----------- --------- --------

NET-1.29 520.5MB - 24 SSD spare Pool0 cluster1-01

NET-1.30 520.5MB - 25 SSD spare Pool0 cluster1-01

NET-1.31 520.5MB - 26 SSD spare Pool0 cluster1-01

NET-1.32 520.5MB - 27 SSD spare Pool0 cluster1-01

NET-1.33 520.5MB - 28 SSD spare Pool0 cluster1-01

NET-1.34 520.5MB - 29 SSD spare Pool0 cluster1-01

NET-1.35 520.5MB - 32 SSD spare Pool0 cluster1-01

NET-1.38 520.5MB - 16 SSD spare Pool0 cluster1-01

NET-1.39 520.5MB - 17 SSD spare Pool0 cluster1-01

NET-1.40 520.5MB - 18 SSD spare Pool0 cluster1-01

NET-1.41 520.5MB - 19 SSD spare Pool0 cluster1-01

NET-1.42 520.5MB - 20 SSD spare Pool0 cluster1-01

NET-1.43 520.5MB - 21 SSD spare Pool0 cluster1-01

NET-1.44 520.5MB - 22 SSD spare Pool0 cluster1-01

14 entries were displayed.

There are 14 SSD disks.

2. Aggregate 0 on C1N1 uses which disks?

cluster1::> storage disk show -aggregate ?

aggr0_cluster1_01

aggr0_cluster1_02

cluster1::> storage disk show -aggregate aggr0_cluster1_01

Usable Disk Container Container

Disk Size Shelf Bay Type Type Name Owner

---------------- ---------- ----- --- ------- ----------- --------- --------

NET-1.1 1020MB - 16 FCAL aggregate aggr0_cluster1_01

cluster1-01

NET-1.9 1020MB - 16 FCAL aggregate aggr0_cluster1_01

cluster1-01

NET-1.10 1020MB - 17 FCAL aggregate aggr0_cluster1_01

cluster1-01

NET-1.36 1020MB - 16 FCAL aggregate aggr0_cluster1_01

cluster1-01

4 entries were displayed.

3. Rename the Aggregate 0 aggregates as aggr0_C1N1 and aggr0_C1N2.

cluster1::> storage aggregate rename -aggregate aggr0_cluster1_01 -newname aggr0_C1N1

[Job 30] Job succeeded: DONE

cluster1::> storage aggregate rename -aggregate aggr0_cluster1_02 -newname aggr0_C1N2

[Job 31] Job succeeded: DONE

4. Create a new aggregate owned by C1N1 named aggr1_C1N1. Use RAID-TEC and the minimum number of FCAL disks.

cluster1::> storage aggregate create -aggregate aggr1_C1N1 -diskcount 7 -disktype FCAL -raidtype raid_tec -node cluster1-01

Info: The layout for aggregate "aggr1_C1N1" on node "cluster1-01" would be:

First Plex

RAID Group rg0, 7 disks (block checksum, raid_tec)

Usable Physical

Position Disk Type Size Size

---------- ------------------------- ---------- -------- --------

tparity NET-1.37 FCAL - -

dparity NET-1.2 FCAL - -

parity NET-1.11 FCAL - -

data NET-1.45 FCAL 1000MB 1.00GB

data NET-1.3 FCAL 1000MB 1.00GB

data NET-1.12 FCAL 1000MB 1.00GB

data NET-1.46 FCAL 1000MB 1.00GB

Aggregate capacity available for volume use would be 3.52GB.

Do you want to continue? {y|n}: y

[Job 32] Job succeeded: DONE

5. What is the available size of the new aggregate?

cluster1::> storage aggregate show -aggregate aggr1_C1N1

Aggregate: aggr1_C1N1

Storage Type: hdd

Checksum Style: block

Number Of Disks: 7

Mirror: false

Disks for First Plex: NET-1.2, NET-1.11,

NET-1.45, NET-1.3,

NET-1.12, NET-1.46,

NET-1.37

Disks for Mirrored Plex: -

Partitions for First Plex: -

Partitions for Mirrored Plex: -

Node: cluster1-01

Free Space Reallocation: off

HA Policy: sfo

Ignore Inconsistent: off

Space Reserved for Snapshot Copies: -

Aggregate Nearly Full Threshold Percent: 95%

Aggregate Full Threshold Percent: 98%

Checksum Verification: on

RAID Lost Write: on

Enable Thorough Scrub: off

Hybrid Enabled: false

Available Size: 3.51GB

Checksum Enabled: true

Checksum Status: active

Cluster: cluster1

Home Cluster ID: dbdbf221-22b4-11e9-996b-000c296f941d

DR Home ID: -

Press <space> to page down, <return> for next line, or 'q' to quit... q

6. What is the RAID group size?

cluster1::> storage aggregate show -aggregate aggr1_C1N1

Aggregate: aggr1_C1N1

Storage Type: hdd

Checksum Style: block

Number Of Disks: 7

Mirror: false

Disks for First Plex: NET-1.2, NET-1.11,

NET-1.45, NET-1.3,

NET-1.12, NET-1.46,

NET-1.37

Disks for Mirrored Plex: -

Partitions for First Plex: -

Partitions for Mirrored Plex: -

Node: cluster1-01

Free Space Reallocation: off

HA Policy: sfo

Ignore Inconsistent: off

Space Reserved for Snapshot Copies: -

Aggregate Nearly Full Threshold Percent: 95%

Aggregate Full Threshold Percent: 98%

Checksum Verification: on

RAID Lost Write: on

Enable Thorough Scrub: off

Hybrid Enabled: false

Available Size: 3.51GB

Checksum Enabled: true

Checksum Status: active

Cluster: cluster1

Home Cluster ID: dbdbf221-22b4-11e9-996b-000c296f941d

DR Home ID: -

DR Home Name: -

Inofile Version: 4

Has Mroot Volume: false

Has Partner Node Mroot Volume: false

Home ID: 4082368507

Home Name: cluster1-01

Total Hybrid Cache Size: 0B

Hybrid: false

Inconsistent: false

Is Aggregate Home: true

Max RAID Size: 24

Flash Pool SSD Tier Maximum RAID Group Size: -

Owner ID: 4082368507

Owner Name: cluster1-01

Used Percentage: 0%

Plexes: /aggr1_C1N1/plex0

RAID Groups: /aggr1_C1N1/plex0/rg0 (block)

RAID Lost Write State: on

RAID Status: raid_tec, normal

RAID Type: raid_tec

SyncMirror Resync Snapshot Frequency in Minutes: 5

Is Root: false

Space Used by Metadata for Volume Efficiency: 0B

Size: 3.52GB

State: online

Maximum Write Alloc Blocks: 0

Used Size: 156KB

Uses Shared Disks: false

UUID String: 17597c5c-9184-44b0-81f7-46b68f9413e6

Number Of Volumes: 0

Press <space> to page down, <return> for next line, or 'q' to quit... q

Flash Pool and Storage Pools

7. Enable Flash Pool on aggr1_C1N1. Use 4 SSD drives and RAID-4.

cluster1::> storage aggregate modify -aggregate aggr1_C1N1 -hybrid-enabled true

cluster1::> storage aggregate add-disks -aggregate aggr1_C1N1 -disktype ssd -diskcount 4 -raidtype raid4

Info: Disks would be added to aggregate "aggr1_C1N1" on node "cluster1-01" in the following manner:

First Plex

RAID Group rg1, 4 disks (block checksum, raid4)

Usable Physical

Position Disk Type Size Size

---------- ------------------------- ---------- -------- --------

parity NET-1.38 SSD - -

data NET-1.39 SSD 500MB 527.6MB

data NET-1.40 SSD 500MB 527.6MB

data NET-1.41 SSD 500MB 527.6MB

Aggregate capacity available for volume use would be increased by 1.32GB.

Do you want to continue? {y|n}: y

8. Verify Flash Pool has been enabled on the aggregate. What is the cache size?

cluster1::> storage aggregate show -aggregate aggr1_C1N1

Aggregate: aggr1_C1N1

Storage Type: hybrid

Checksum Style: block

Number Of Disks: 11

Mirror: false

Disks for First Plex: NET-1.2, NET-1.11,

NET-1.45, NET-1.3,

NET-1.12, NET-1.46,

NET-1.37, NET-1.38,

NET-1.39, NET-1.40,

NET-1.41

Disks for Mirrored Plex: -

Partitions for First Plex: -

Partitions for Mirrored Plex: -

Node: cluster1-01

Free Space Reallocation: off

HA Policy: sfo

Ignore Inconsistent: off

Space Reserved for Snapshot Copies: -

Aggregate Nearly Full Threshold Percent: 95%

Aggregate Full Threshold Percent: 98%

Checksum Verification: on

RAID Lost Write: on

Enable Thorough Scrub: off

Hybrid Enabled: true

Available Size: 3.51GB

Checksum Enabled: true

Checksum Status: active

Cluster: cluster1

Home Cluster ID: dbdbf221-22b4-11e9-996b-000c296f941d

DR Home ID: -

DR Home Name: -

Inofile Version: 4

Has Mroot Volume: false

Has Partner Node Mroot Volume: false

Home ID: 4082368507

Home Name: cluster1-01

Total Hybrid Cache Size: 1.46GB

Hybrid: true

Inconsistent: false

Is Aggregate Home: true

Max RAID Size: 24

Flash Pool SSD Tier Maximum RAID Group Size: 8

Owner ID: 4082368507

Owner Name: cluster1-01

Used Percentage: 0%

Plexes: /aggr1_C1N1/plex0

RAID Groups: /aggr1_C1N1/plex0/rg0 (block)

/aggr1_C1N1/plex0/rg1 (block)

RAID Lost Write State: on

RAID Status: mixed_raid_type, hybrid, normal

RAID Type: mixed_raid_type

SyncMirror Resync Snapshot Frequency in Minutes: 5

Is Root: false

Space Used by Metadata for Volume Efficiency: 0B

Size: 3.52GB

State: online

Maximum Write Alloc Blocks: 0

Used Size: 176KB

Press <space> to page down, <return> for next line, or 'q' to quit... q

9. How much did the available capacity increase on the aggregate?

The capacity is still 3.51GB. Flash Pool does not increase the available capacity of an aggregate.

10. Create a storage pool named StoragePool1 using 6 SSD disks. Use the ‘simulate’ option if using the CLI, note that creation will fail in System Manager when using the ONTAP simulator.

cluster1::> storage pool create -storage-pool StoragePool1 -disk-count 6 -simulate true

Info: Storage pool "StoragePool1" will be created as follows:

Disk Size Type Owner

---------------- ---------- ------- ---------------------

NET-1.42 520.5MB SSD cluster1-01

NET-1.43 520.5MB SSD cluster1-01

NET-1.44 520.5MB SSD cluster1-01

NET-1.29 520.5MB SSD cluster1-01

NET-1.30 520.5MB SSD cluster1-01

NET-1.31 520.5MB SSD cluster1-01

Allocation Unit Size: 702MB

Additional Resources

How RAID groups work: https://library.netapp.com/ecmdocs/ECMP1368859/html/GUID-5133D218-97F8-4043-B2DC-00B3C89A8948.html

Aggregates and RAID groups: https://docs.netapp.com/us-en/ontap/concepts/aggregates-raid-groups-concept.html

Libby Teofilo

Text by Libby Teofilo, Technical Writer at www.flackbox.com

Libby’s passion for technology drives her to constantly learn and share her insights. When she’s not immersed in the tech world, she’s either lost in a good book with a cup of coffee or out exploring on her next adventure. Always curious, always inspired.