In this NetApp tutorial, I’ll wrap up the section by covering the best practices for VMware vSphere on ONTAP storage. Scroll down for the video and also the text tutorials.

NetApp VMware vSphere on ONTAP Best Practice Video Tutorial

Scott Nelson

Hi Neil, I purchased your NetApp course and was amazed by how the material that you delivered was so easy to understand. I had been working with NetApp and had never seen the material presented the way you did it. I think you were meant to teach others and I can tell just by the way you present the material and how you include good hands on practice in your training.

Throughout my career I have taken lots of professional classes that have cost thousands of dollars a class and I prefer your classes over all of those. You have a special gift to take difficult subject matter and make it simple for others to understand.

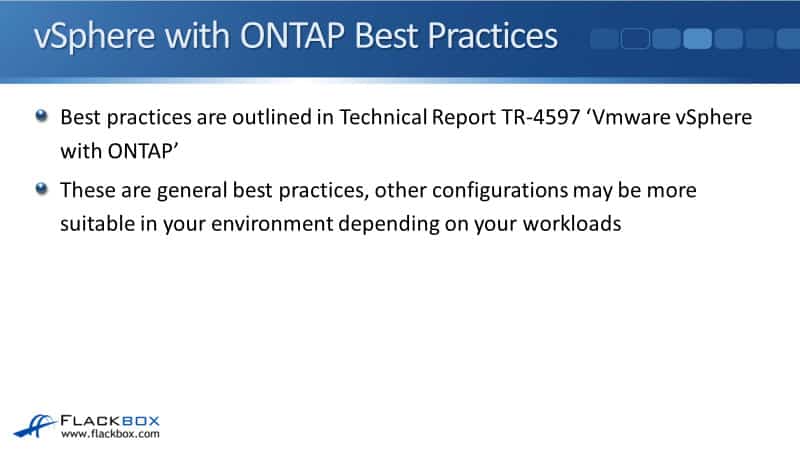

Best practices are covered in the NetApp Technical Report TR-4597. That’s VMware vSphere with ONTAP, and most of the best practices that I will be talking about come directly from that technical report.

It includes general best practices, and other configurations may be more suitable for your environment depending on your particular workloads. In general, these are the settings you’re going to want to use.

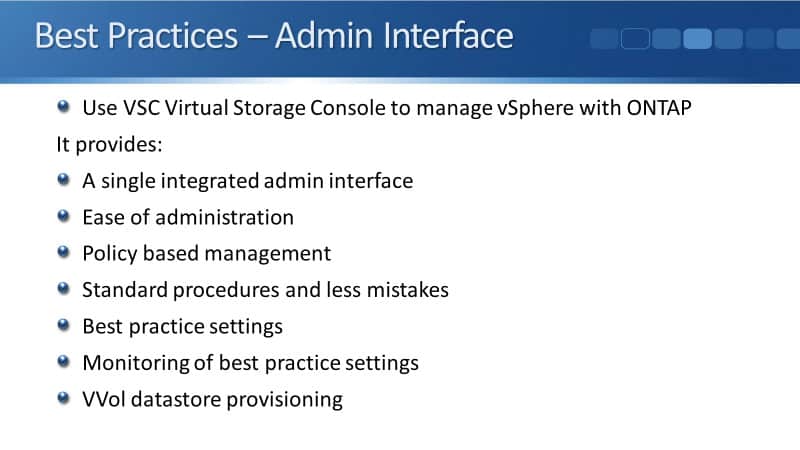

Best Practices – Admin Interface

First, Virtual Storage Console (VSC) is used to manage vSphere with ONTAP since it provides a single integrated admin interface. It is a NetApp plug-in that plugs into VMware vSphere, and it allows you to manage your ONTAP storage for vSphere from the vSphere client.

It gives you the ease of administration. It also supports policy-based management and standard procedures. Because of this, there will be fewer mistakes, requiring less troubleshooting. It also allows you to push best practice settings to your ESXi host, and it monitors that those settings are still configured.

If somebody somehow changes those settings by accident, it will alert you. So you’ll know about it, and you can set them back. Also, if you’re using VVols, you need to use VSC for the VVol datastore provisioning.

Best Practices – VSC Configuration

Use the NetApp Interoperability Matrix to confirm compatibility between software versions. The version of vSphere you’re running, the version of ESXi running on your host, and the version of ONTAP running on your storage should all be compatible.

Moreover, install the NFS VAAI plug-in on ESXi hosts if you use NFS for your datastores. Install the Guest OS scripts on your virtual machine guests or VMware tools. Also, enable the VASA provider, which is enabled by default in the latest version of VSC. Lastly, register OnCommand API Services with the VASA provider if using VVols to see the VVol dashboard and reports.

Traditional Datastores or VVols

Should you use traditional datastores or VVols? There’s slow uptake of VVols, but VVols provide virtual disk granular storage. They provide enhanced features, such as VMware-managed NetApp snapshot copies, and support Adaptive QoS. Those are things that you don’t get with traditional datastores, so you should look at implementing VVols in your environment.

Storage Protocol Choice

You can use NFS or any SAN protocol with VMFS for your vSphere datastores for your storage protocol choice. NetApp testing has shown little performance difference between the different protocols.

Considerations for which protocol you’ll use are the current storage infrastructure and staff skills. What are you using for any existing storage already? Is that going over IP or fiber channel, for example, and the cost of any upgrades.

If you are using maybe NAS protocols for your home folders, etc., you’re probably going to use NAS and then roll out VMware. If you were going to use a fiber channel instead, you’d have to upgrade the hardware in your network, and you’d also have to train your staff in it. It will be easier to use the existing Ethernet network and NFS if that’s what you were using already.

Storage Protocol Choice – NFS Benefits

The official line from NetApp is that you can use any protocol you want to, and it doesn’t affect performance. But if you read the technical report, you’ll see that it gently nudges you towards using NFS.

One of the benefits of using NFS is that ONTAP is aware of VMDK files when you use NFS. You don’t get that with traditional SAN datastores. However, if you’re using VVols, you still do get it with SAN.

Also, provisioning can be easier on an NFS NIC than an HBA because it’s easier to configure and less likely to require firmware management. Thin provisioning, deduplication, and cloning return savings immediately, making capacity management easier.

Furthermore, volumes can be easily shrunk or grown. With SAN protocols, they can only be easily grown, not shrunk. So again, it’s your choice of protocol. But now, I do gently nudge you towards using NFS.

Best Practices – Datastore Sizing

Larger data stores can benefit storage efficiency because when your storage efficiency is working at the volume level, the more stuff, the more data you’ve got in that volume. Thus, allowing you to deduplicate more of it. However, aggregate level deduplication on AFF alleviates the impact of this.

With VMware, you will typically get excellent deduplication savings because you will have lots of virtual machines that will be similar. For example, lots of virtual machines run the same operating system and run the same applications. So if they are all in the same volume and data store, you will get good deduplication there.

If you have them in different volumes, then if you’re using aggregate level deduplication and those volumes are in the same aggregate, you’ll get the excellent deduplication again. Larger data stores also provide easier management because there are less of them. With less data stores to manage, it’s easier to manage them.

Consider using at least 4 datastores or volumes if you’re using VVols to store your VMs on a single ONTAP controller to get maximum performance from the hardware resources. This approach also allows you to establish datastores with different recovery policies.

You could have some backed up or replicated more frequently than others. We said it’s good to have less datastores and make them larger. However, you do want to have at least 4 datastores.

The data store recommended size is between 4 and 8 terabytes. That size is a good balance point for performance, ease of management, and data protection. It’s big enough to hold lots of virtual machines but small enough to recover quickly in case of a disaster.

Also, enable autosize to grow and shrink the volume as used space changes automatically. If you’re using VSC, then autosize is enabled by default.

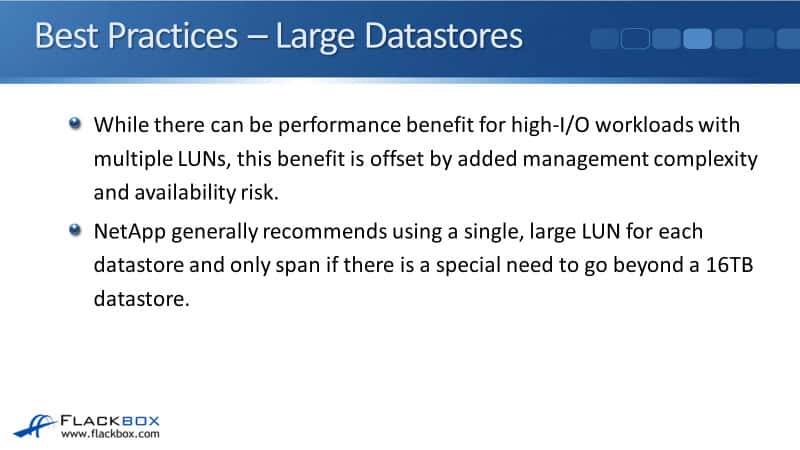

Best Practices – Large Datastores

For workloads that require a large data store, the recommended datastore size is 4 to 8 terabytes. But if you’ve got a workload in your particular environment that requires a larger datastore than that, well, NFS datastores can be up to 100 terabytes in size. VMFS datastores can be up to 64 terabytes in size.

If SAN is required, the ONTAP maximum LUN size is 16 terabytes. Therefore, a maximum size VMFS datastore of 64 terabytes is created by spanning across 4 separate 16 terabytes LUNs.

While there can be performance benefits for high I/O workloads with multiple LUNs, added management complexity and availability risk offset this benefit. As a result, NetApp generally recommends using a single large LUN for each data store and only span if there’s a special need to go beyond a 16 terabyte data store.

To summarise, recommended datastore size is 4 to 8 terabytes. If you need to use larger than that, you can get larger data stores with NFS, 100 terabytes in size. VMFS datastores can be up to 64 terabytes in size.

The maximum size of a LUN is 16 terabytes. So it’s not recommended to go above that 16 terabyte size unless, in your particular environment, the requirement is to have a large data store, and you also have to have a SAN protocol. You could span it across multiple LUNs, but that’s not generally the recommended way to do it.

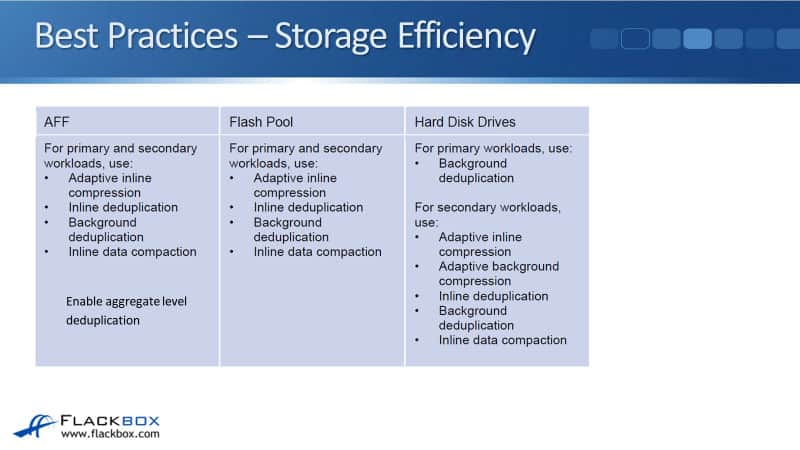

Best Practices – Storage Efficiency

Next up is storage efficiency, and as you’ll see here, everything is pretty much just at the default. Using AFF, you will use and enable adaptive inline compression, inline deduplication, background deduplication, and inline data compaction. Also, enable aggregate level deduplication.

If you’re using Flash Pool on FAS, then adaptive inline compression, inline deduplication, background deduplication, and inline data compaction are used and enabled.

If you’re using a Hard Disk Drive aggregate on FAS, if it is a primary workload that requires higher performance, then use just background deduplication. Use adaptive inline compression, adaptive background compression, inline deduplication, background deduplication, and inline data compaction for secondary workloads.

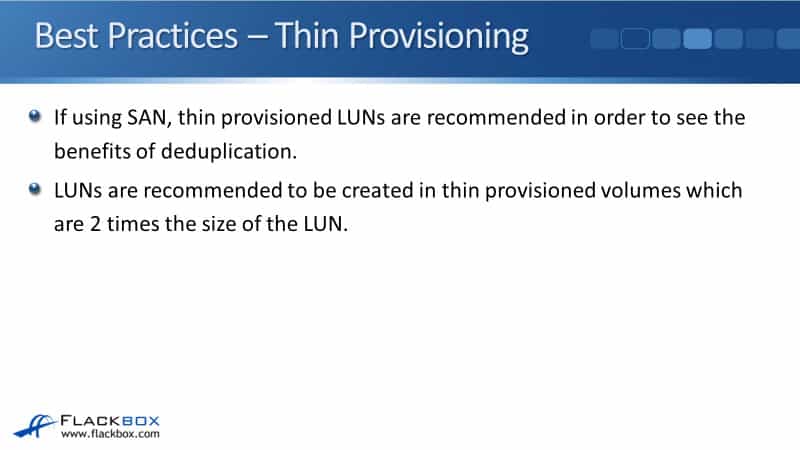

Best Practices – Thin Provisioning

Thin provisioning is the default and is recommended for ONTAP NFS volumes. In addition, thin provisioned VMDKs, from the vSphere side are generally recommended, depending on the application.

For SAN, thin provisioned LUNs are recommended to see the benefits of deduplication. LUNs are also recommended to be created in thin provision volumes, which are 2 times the size of the LUN.

Best Practices - Networking

For networking, use a dedicated network for storage traffic. You don’t have any other traffic on that particular network. ESXi hosts and the SVM LIFs, on the ONTAP side, should be on the same IP subnet.

The storage traffic does not pass through a router. It’s going directly between the ESXi hosts and the SVM LIFs. Also, follow general networking best practices, such as redundant paths and one LIF per node, per SVM.

Best Practices – NFS

If you’re using NFS as a protocol, use FlexVol volumes for the NFS data stores, not qtrees or FlexGroups. All ESXi hosts mounting an NFS datastore must use the same protocol, NFSv3 or NFSv4.1.

If you’ve got hosts using different protocols, connecting to the same datastore can corrupt the datastore. Make sure that does not happen by using Host Profiles in VMware.

Best Practices – VSC Backup

For backup, back up your vSphere environment, obviously. You can use SnapCenter to do that. Moreover, redundancy should be provided for the VSC virtual machine through the standard vSphere High Availability or Fault Tolerance features.

The VSC runs as a virtual machine, and you can use VMware’s built-in High Availability or Fault Tolerance to provide redundancy for that virtual machine. Nothing special about it. It just works the standard way that it always does in VMware.

Information in VSC and the VASA provider can change frequently, so take snapshots at least every hour and retain them for enough time to discover any problems and resolve them.

Best Practices – General

Finally, for some general best practices, use a guest-owned file system or Raw Device Mapping (RDM) if that is recommended for the guest application. For enabling encryption, use NetApp, ad not vSphere encryption.

Also, the storage Distributed Resource Scheduler (DRS) should be set to manual if enabled. What that does is it automatically re-balances your virtual machines across the available datastores.

If you allow that to be running automatically, then moves could lose efficiency benefits and lock space in snapshot copies. So either have it disabled or if you do have it turned on, have it set to manual to check any moves before they actually occur.

Additional Resources

VMware vSphere with ONTAP Best Practices : NetApp Solutions: https://docs.netapp.com/us-en/netapp-solutions/pdfs/sidebar/VMware_vSphere_with_ONTAP_Best_Practices.pdf

NetApp and VMware vSphere Storage Best Practices: https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/partners/netapp/vmware-netapp-and-vmware-vsphere-storage-best-practices.pdf

Best Practices for Running VMware vSphere on NetApp ONTAP: https://datacenterrookie.wordpress.com/2017/06/28/best-practices-for-running-vmware-vsphere-on-netapp-ontap/

Libby Teofilo

Text by Libby Teofilo, Technical Writer at www.flackbox.com

Libby’s passion for technology drives her to constantly learn and share her insights. When she’s not immersed in the tech world, she’s either lost in a good book with a cup of coffee or out exploring on her next adventure. Always curious, always inspired.