In this NetApp training tutorial, I will cover MultiPath Input/Output (MPIO), Device-specific Modules (DSM), NetApp Host Utilities, and Asymmetric Logical Unit Access (ALUA). These are the software utilities that allow the initiator (the SAN client) to discover and correctly use multiple paths to the storage system. Scroll down for the video and also text tutorial.

NetApp MPIO, DSM, SLM and Host Utilities Video Tutorial

Joseph Dermody

I began working for NetApp in 2007, they should make your course a mandatory prerequisite for all of their engineers. The lectures are concise and to the point and the extensive catalog of content you provide is unrivaled.

Nothing I’ve come across will prepare someone for a NetApp certification like your one-stop-shop course.

MultiPath Input/Output (MPIO)

Let’s start with MPIO. Multipath I/O solutions use multiple physical paths between the storage system and the host. If a path fails, the MPIO system switches I/O to other paths so that hosts can still access their data. This provides load balancing and redundancy.

MPIO software is a must if you have multiple paths between a storage system and a host so that it sees all of the paths as connecting to a single virtual disk. Without MPIO the client operating system may treat each path as a separate disk. If you have configured a single LUN for a client but you see it reporting multiple identical disks then you have a multipathing configuration issue on the host itself that you need to fix.

Windows MPIO and Device Specific Module (DSM)

On a Windows system there are two main components to any MPIO solution: the Device Specific Module (DSM) and the Windows MPIO components. The MPIO software presents a single disk to the operating system for all paths, and the DSM manages the path failover.

To connect to NetApp storage, enable Windows MPIO on your Windows host. For the DSM you can use the Windows native DSM (which is enabled along with MPIO), or you can install the ONTAP DSM for Windows MPIO. You can download this from the NetApp website. Current Windows versions have full NetApp support so you can use either.

The DSM includes storage system specific intelligence needed to identify paths and to manage path failure and recovery. The NetApp DSM sets host SAN parameters to the recommended settings for NetApp and also includes reporting tools.

NetApp Host Utilities

Other software with can be downloaded from the NetApp website includes NetApp Host Utilities. This also sets recommended SAN parameters such as timers on your host computer, and on certain host bus adapters.

Hosts can connect to LUNS on NetApp storage systems without installing the Host Utilities. To ensure the best performance and that the host will successfully handle storage system failover events it is however recommended to install Host Utilities or the NetApp DSM.

You can install either one or both. Or you could install neither and still be able to connect to your NetApp storage from the Windows client. To ensure you have the NetApp recommended settings it's recommended to use either or both of them.

Unlike the NetApp DSM, NetApp Host Utilities does not require a license. To download the software, just visit www.netapp.com. Click on the Sign In link, then log into the Support site. Login using your username/password, then once the page loads, hover your mouse on the Downloads link then click on the Software option. Once the Software page loads, scroll down and look for the Host Utilities SAN. The Host Utilities software comes in various flavors of UNIX, Linux and Windows. You can scroll down the page to see the other options such as Multipath I/O, and the NetApp DSM which is only available for Windows.

Asymmetric Logical Unit Access (ALUA)

Now let’s discuss Asymmetric Logical Unit Access (ALUA) and selective LUN mapping. ALUA is an industry-standard protocol for identifying optimized paths between a storage system target and a host initiator. It enables the initiator to query the target about path attributes, and the target to communicate events back to the initiator.

Direct paths to the node owning the LUN are marked as optimized paths and you can configure the initiator to prefer them. Other paths are marked as non-optimized paths. If all of the optimized paths fail, the DSM will automatically switch to the non-optimized paths, maintaining the host's access to its storage. In that case traffic would be going over the cluster interconnect on a NetApp FAS system though, so it's preferable to use the optimized paths that terminate on the node that actually owns the LUN. ALUA is enabled by default on Clustered ONTAP.

Selective LUN Mapping (SLM)

We also have Selective LUN Mapping (SLM) that reduces the number of paths from the host to the LUN to keep things cleaner. With SLM, when a new LUN map is created, the LUN is accessible only through paths on the node owning the LUN and its HA partner. The optimized paths that terminate on the node owning the LUN will be preferred. The non-optimized paths will be on the HA partner.

For example, if we had Fabric A and Fabric B, and each node is connected to both Fabrics, and we had four nodes, with Selective LUN Mapping we will have two optimized paths terminating on the node that owns the LUN, two non-optimized paths that terminate on the HA partner, and the four paths on the other two nodes would not be reporting. That provides enough paths for our load balancing and redundancy, while keeping things clean and not reporting too many paths.

SLM is enabled by default from Clustered ONTAP 8.3. Previously to version 8.3 you could enable it with this command:

lun mapping remove-reporting-nodes command

MPIO, ALUA and SLM Example

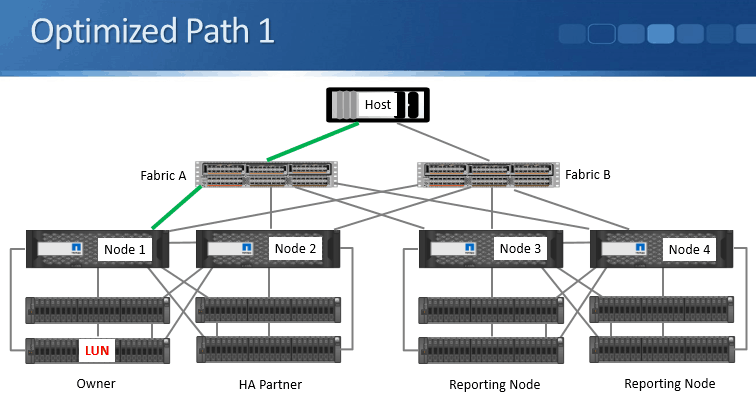

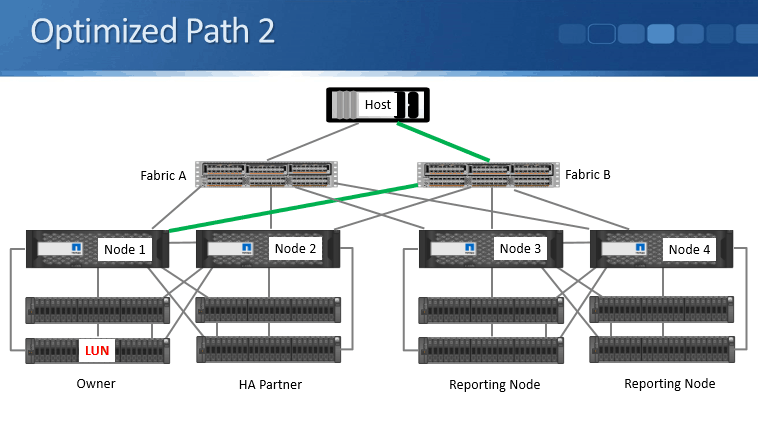

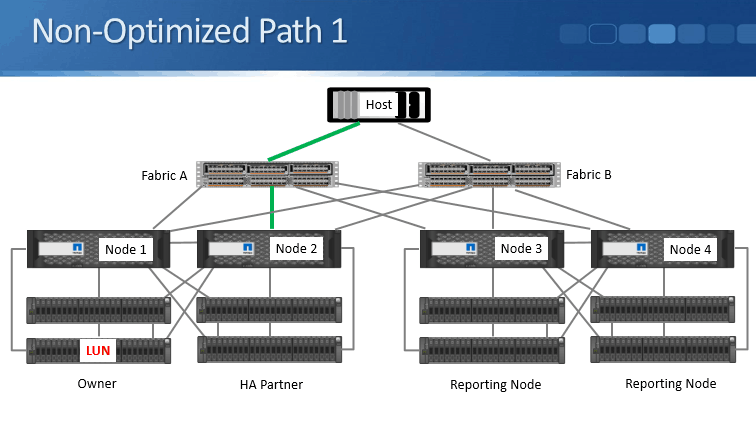

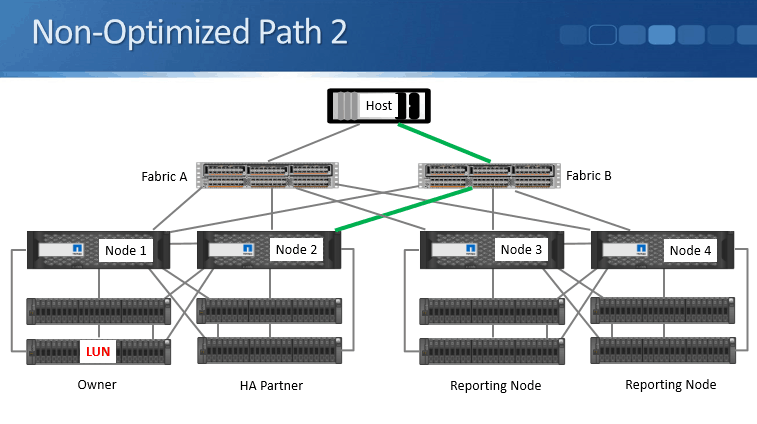

Let's take a look at an example of how MPIO, ALUA, and SLM works. Here we’ve got a four-node cluster, redundant fabrics A and B, and the LUN is owned by Node 1.

Optimized Path 1 shown in bold green in the diagram above terminates on Node 1 and is via the Fabric A switch.

Optimized Path 2 again terminates on Node 1 because it owns the LUN, and is going through Fabric B.

Non-optimized Path 1 is on the HA peer Node 2 and goes via Fabric A.

Non-Optimized Path 2 also terminates on the HA peer Node 2 but goes through Fabric B.

With Selective LUN Mapping enabled, as it is by default, the host will learn just those four paths, and it will prefer the optimized paths that terminate on Node 1.

From the MPIO software on the host itself, you'll be able to configure how you want the load balancing and the redundancy to work. For example, you could use Active/Active, or you could use Active/Standby. Typically we're going to prefer to use Active/Active and load balance over both optimized paths.

Using the NetApp DSM, there are six load balancing policies that can be used for both Fibre Channel and iSCSI paths:

- Least Queue Depth

- Least Weighted Paths

- Round Robin

- Round Robin with Subset

- Failover only

- Auto Assigned

NetApp DSM Load Balancing Options

The first one is Least Queue Depth which is the default policy. I/O is sent Active/Active preferring the optimized path with the smallest current outstanding queue. It can load balance between the paths using whichever one has got the smallest current outstanding queue right now. This policy works as Active/Standby if the LUN is 2TB or larger. So this is good as the default if the LUN is smaller than 2TB. If it's larger than 2TB, it’s better to use one of the other options.

The next available policy is Least Weighted Paths. Here, the administrator defines weight on the available paths. I/O is sent Active/Standby preferring the optimized path with the lowest weight value. Typically we're going to want to use Active/Active rather than Active/Standby to get the best use of our available paths.

The next available option is Round Robin. All optimized paths to the storage system are used Active/Active when they are available.

Next is Round Robin with Subset. Here, the administrator defines a subset of paths. I/O is Round Robin load balanced between them. This is recommended to be used for 2TB LUNs or larger. With Round Robin with Subset, you can also use non-optimized as well as optimized paths, but it's not recommended to do that because then you'll have some of the traffic going over the cluster interconnect.

Our next option is Failover Only. This is an Active/Passive option. The administrator defines the active path. Again, it can use non-optimized paths, but that's not recommended.

The last available option is Auto Assigned. For each LUN, only one path is chosen at a time. This is another Active/Standby option.

Windows Native DSM Load Balancing Options

The load balancing options for the Windows native DSM are similar - we have Failover, Round Robin, Round Robin with a subset of paths, Dynamic Least Queue Depth, Weighted Path, or Least Blocks.

All of these other than Least Blocks are also available with the NetApp DSM. With Least Blocks, I/O is sent down the path with the fewest data blocks that are currently being processed. This is another Active/Active option.

Additional Resources

NetApp Clustered ONTAP, iSCSI, and MPIO with Server 2012

Installing and Configuring Host Utilities

Want to practice NetApp SAN utilities on your laptop? Download my free step-by-step guide 'How to Build a NetApp ONTAP Lab for Free'

Click Here to get my 'NetApp ONTAP 9 Storage Complete' training course.