In this NetApp tutorial, you’ll learn about FlexGroups. FlexGroups is a new feature for ONTAP 9 that allows you to have volumes which are a much larger size than would normally be available. It also improves performance too. Scroll down for the video and also text tutorial.

NetApp FlexGroups Video Tutorial

Rodney Banipal

I am happy to say that I have passed the NetApp NCDA exam. Your video course was very informative to understand how NetApp works. I have practiced a lot in my lab and that led me to get a NetApp job. Without your course I would not be here so a big thank you goes to you. I could not have done it without your course.

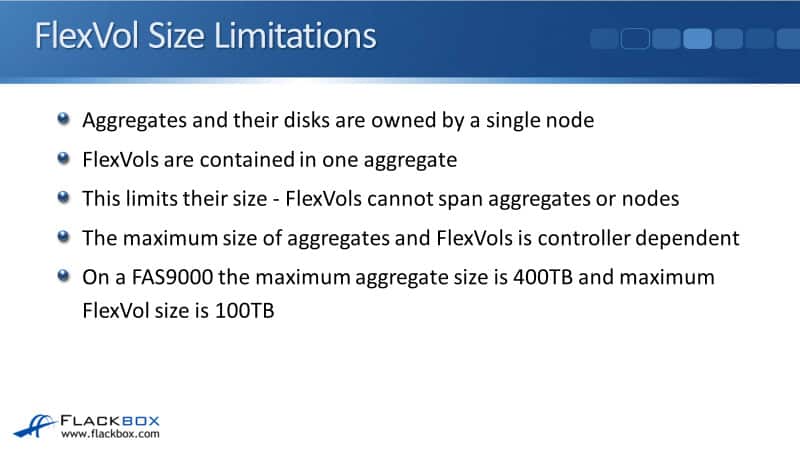

FlexVol Size Limitations

Our normal FlexVols are limited in size. Aggregates and their disks are owned by a single node, and FlexVols are contained in one aggregate. So, that limits their size. FlexVols cannot span aggregates or nodes. They're limited to the size of the aggregate they're in, on the node that they are on.

The maximum size of aggregates and FlexVols is controller dependent. On a FAS9000, for example, the maximum aggregate size is 400TB and the maximum FlexVol size is 100TB.

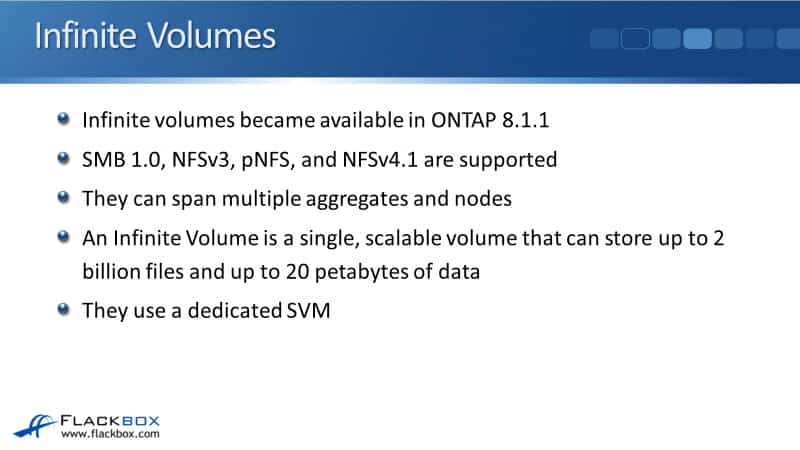

Infinite Volumes

Infinite Volumes came out back in ONTAP 8.1.1 to overcome that size limitation of flexible volumes. The way that they do that is that they can span multiple aggregates. A normal FlexVol goes in one aggregate while an Infinite Volume can span across multiple aggregates, across multiple nodes which allows an Infinite Volume to be much larger than a FlexVol could be.

With Infinite Volumes, the protocols that are supported are SMB 1.0, NFSv3, pNFS, and NFSv4.1. An Infinite Volume is a single scalable volume that can store up to 2 billion files and up to 20 petabytes of data. It is so much larger than a normal flexible volume because it does span those aggregates and nodes.

With Infinite Volumes, they use a dedicated SVM. If you're going to use this feature, you have to create an SVM that is used specifically for the Infinite Volume. There's a one-to-one relationship between that Infinite Volume and its SVM.

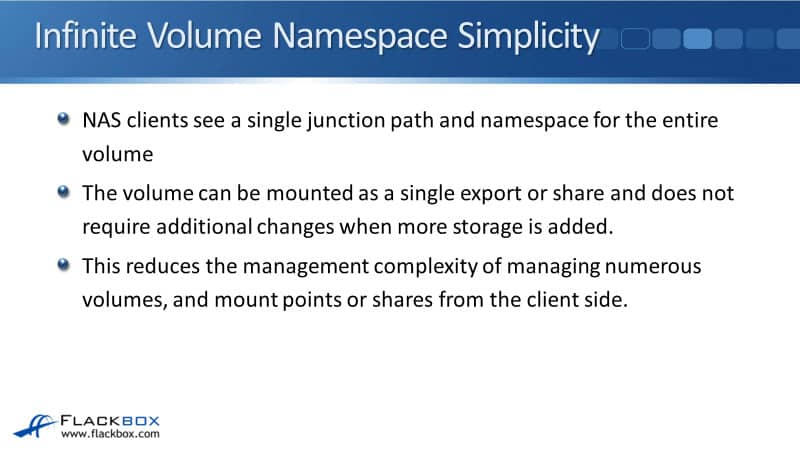

Infinite Volume Namespace Simplicity

When you're using Infinite Volumes, the NAS clients see a single junction path and Namespace for the entire volume. Infinite Volumes are for NAS only and SAN is not supported. If you're using Infinite Volumes, you can't use SAN at all on that particular cluster. It's going to be NAS only. You can have other SVMs on there with normal flexible volumes, but you can't have SAN.

With Infinite Volumes, that volume can be mounted as a single export for our NFS clients, or share for SFS clients, and does not require additional changes when more storage is added. That reduces the management complexity of managing numerous volumes, and mount points or shares from the client side.

Most of the things you're going to configure in ONTAP, like your Snapshots, also your SnapMirror, replication, et cetera, are managed at the volume level. A lot of volumes can mean a lot of management. If you use an Infinite Volume, that's managed as a single volume, which can make the management easier.

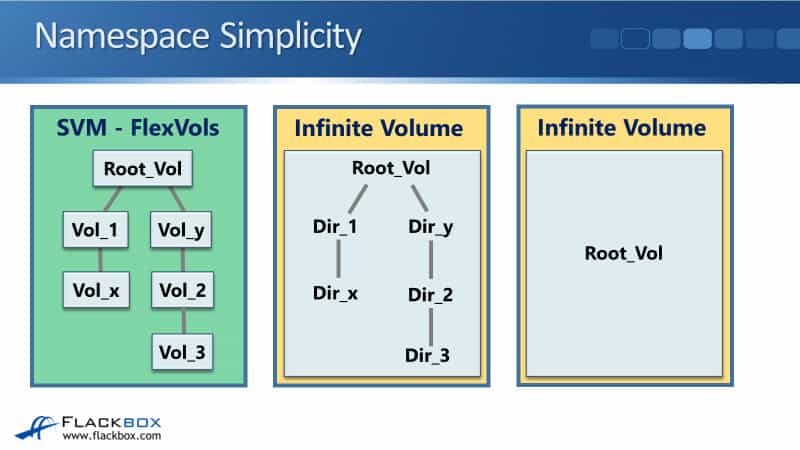

Namespace Simplicity

Let's have a look and see how this simplifies the Namespace. First up, looking at where we're using our normal FlexVols. Here I've got a normal SVM using normal FlexVols. It's got it's root volume and I've got several volumes which are hanging off of there to build the Namespace. These volumes can be in different aggregates, on different nodes throughout the cluster.

Then, let's say that we configure an Infinite Volume. We could build the same directory structure here, but rather than using separate volumes, we are using separate directories inside that one Infinite Volume. So when we were using FlexVols, we had 6 volumes to manage.

Now, we've just got a single volume to manage. It's got a lot of data in there, which is more than we could fit into a single aggregate but we can do that with a single volume now by using an Infinite Volume.

Another use for Infinite Volume would be if you want to have a huge, flat Namespace on a volume that is bigger than would be supported with a FlexVol. You can create an Infinite Volume, it's spanning multiple aggregates. It's the single volume with a single directory structure there. A huge, flat directory if we want to store a huge amount of data in there.

Space Efficiency

We also get additional space efficiency because Infinite Volumes support thin provisioning, deduplication, and compression. You can make it huge, spanning across multiple aggregates.

You can also make it appear to be bigger than the actual underlying physical space by using thin provisioning. You can also squeeze more into there, by using deduplication and compression. It can be expanded non-disruptively if you do want to make it larger later on as well.

Infinite Volume Constituents

Let's look at the way that Infinite Volumes work. Infinite Volumes are made up of constituent volumes. They have got the data constituents and there's also a single Namespace constituent that maps directory information and file names to the file's physical data location. The Infinite Volume is spanning across multiple aggregates.

So how do we know which actual aggregate a file is on? The Namespace constituent takes care of that. Also, because it's a single Namespace constituent and it's critical to the Infinite Volume working, we need to have a backup of that. That is the Namespace Mirror Constituents. It's a backup copy of the Namespace constituent.

The way that it works with the constituents is that files are automatically distributed on ingest. As they're received by the storage system across all the data constituents to balance the space allocation, a single file is allocated to a single data constituent.

That's a single volume and a single aggregate. It's not striped across constituents. However, it is on top of an underlying aggregate, and that underlying aggregate does have RAID configured on there, so it's striped at the aggregate level. But at the file level, it goes into a single constituent.

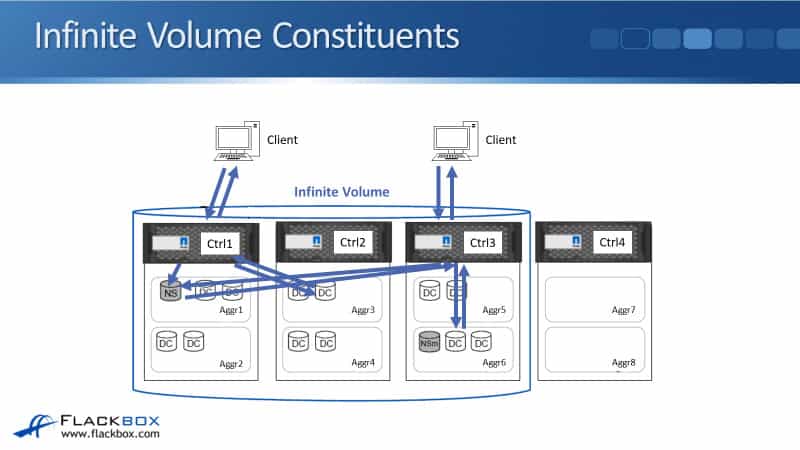

In this example, I have got an Infinite Volume that is in an SVM, which is dedicated for it. We've configured this Infinite Volume to use our nodes, Ctrl1, Ctrl2, and CTRL3. We've also got CTRL4 in our cluster here. That can have different SVMs on there, which are using normal flexible volumes.

You can see that it's got a single Namespace constituent. In our example, that's on Aggr1 owned by CTRL1. We've got the Namespace Mirror, which is on Aggr6, owned by CTRL3. Across all three of those controllers, we have got our data constituents that are going to be hosting the files. Then a client, up in the top left, sends in a read request for a file that is in the Infinite Volume, and that hits CTRL1.

When connections are coming in over the network, wherever you're using Infinite Volumes or normal FlexVols, it’s normal that they are going to be load-balanced across all of the different controllers. So, when the client sent that connection and it could have hit CTRL1, 2, 3, or 4. It's going to be load-balanced across all of them.

In this example, just so happens that the client has hit CTRL1. That is where the Namespace constituent is. CTRL1 will query it's Namespace constituent to find out where that actual file is. It learns that the file is on Aggr3, and a data constituent in there. It fetches it over the clustering or connects from Aggr3. That goes back to CTRL1 and CTRL1 can then send it back to the client.

Now, while this is going on, you're not going to just have one client using that Infinite Volume. You're going to have multiple connections happening at the same time. So while that was going on, another client shown over on the right sends in a read request as well. That got load-balanced over the network to CTRL3 in our example.

CTRL3 queries the Namespace constituent on controller one for wherever the file was located and it learns that information. In this example, it is on Agg6. It gets it from there and sends it back to the clients. You're going to have multiple connections happening at the same time and you can see that the Namespace constituent is going to be queried every time.

Infinite Volume Performance Limitation

Infinite Volumes have got limited performance because of that single Namespace constituent which can become a bottleneck.

Metadata operations operate in a single serial CPU thread, which is going to limit performance because you've got all those concurrent connections coming in at the same time. Metadata intensive workloads with high file counts are affected the worst by the limitation.

FlexGroups

Up to this point, I've been talking about Infinite Volumes, but the lecture is about FlexGroups. So you've maybe been thinking, "Okay. What gives?" Why have I been talking about Infinite Volumes? The reason is that Infinite Volumes we're the first solution to the problem. They were available in ONTAP 8.

FlexGroups are now an improved solution to that same problem of having large volumes. FlexGroups were introduced in ONTAP 9.1. They are supported on FAS, AFF, and ONTAP Select platforms. However, it is not supported on the cloud platforms.

The protocols that are supported are NFSv3, SMB2.x, and SMB3.x. Support protocols a bit different than it was for Infinite Volumes. Right now, as I'm recording this, NFS version 4.1 Is not supported with FlexGroups yet, but SMB2 and 3 are supported, which was not supported on Infinite Volumes.

FlexGroups vs Infinite Volumes

I also talked about Infinite Volumes first because FlexGroups are very similar to Infinite Volumes. They span multiple aggregates and nodes so our volumes can be a larger size. NAS clients see a single junction path and Namespace for the entire FlexGroup volume, the same as it worked with Infinite Volumes.

A FlexGroup can be larger than the available physical storage by using thin provisioning. It supports space efficiency with thin provisioning, deduplication, and compression. It can be expanded non-disruptively same as on Infinite Volumes.

FlexVols are the building blocks to a NetApp FlexGroup volume. This is the equivalent to our constituents with our Infinite Volumes. Each FlexGroup contains several member FlexGroup volumes, just like our Infinite Volumes had several constituents spread across the different aggregates.

Files are distributed across all member volumes to balance the space allocation. That's going to be automatically done on ingest. A single file is allocated to a single member volume and it's not striped across them. However, those volumes are on top of underlying aggregates, which do have RAID configured on them.

Okay. So those were the similarities. Let's look now at the differences between FlexGroups and Infinite Volumes and why FlexGroups are better. With FlexGroups, they're tested up to 400 billion files and 20 petabytes of data.

With Infinite Volumes, that was up to 2 billion files. That’s a big difference there. This is just what they've been tested up to, they can actually go larger than that. The limitation depends on the hardware that your cluster is running on.

There is no Namespace constituent, which was the bottleneck in Infinite Volumes. So that's the big difference and that's where the performance improvement comes from. Member volumes dynamically balanced space and load allocation, including metadata access evenly among the members. That did not happen with Infinite Volumes.

Another difference is you can have multiple FlexGroups in the same SVM. There's not that one to one relationship that there was between an Infinite Volume and an SVM.

FlexGroup Member Volumes

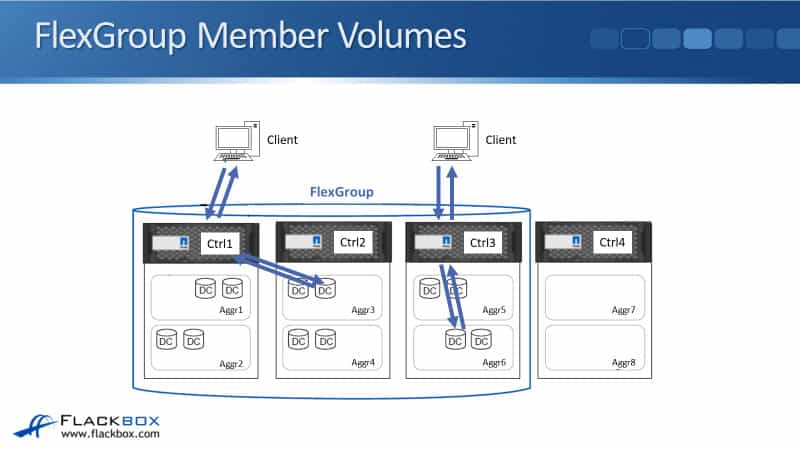

This diagram looks really similar to what I used for Infinite Volumes before. The difference is we do not have the Namespace constituent there. So what happens now is client over on the left sends in a read request. That happens to hit CTRL1.

With our network load-balancing, it could have hit any of the controllers in the cluster. CTRL1 fetches that from Aggr3 on CTRL2 and sends it back to the client.

Then at the same time, the client over underwrites. It sends in a read request that happens to hit CTRL3. CTRL3 fetches that from Aggr6 and sends the read back to the client. Writes are going to work similarly again as well.

You can see the differences, we've taken out the Namespace constituent so we don't have that single bottleneck that all the traffic is having to go through.

FlexGroup Performance

There is no Namespace constituent. FlexGroups dynamically balance space and load allocation, including metadata access across multiple hardware assets and CPU cores to perform at a higher performance threshold. Operations are performed concurrently across multiple member FlexVol volumes, aggregates, and nodes.

Therefore, you're getting the best use of your distributed storage system. You're using all of those different distributed hardware resources. Everything is running in parallel and distributed. You get better performance that way. Better performance, not just compared to Infinite Volumes, but compared to your normal flexible volumes as well.

Now, something to mention here is that with flexible volumes, you're still going to be load balancing across those incoming network connections. However, you're not going to get the same level of distribution that you do with FlexGroups across all the different components for your hardware in the cluster.

Because of the load balancing, FlexGroups are recommended for performance, not just size. With Infinite Volumes, you would only use those for the size, but with FlexGroups, you get performance improvement as well. FlexGroups provide a performance gain compared to Infinite Volumes and FlexVols.

They're recommended for the ideal use cases, with volumes where the volume is 20TB or larger. Metadata-intensive workloads with many new concurrently written file counts, achieve the biggest boost. This is actually the opposite of what was the biggest detriment with the Infinite Volumes.

Ideal Use Cases

What were those ideal use cases? They are unstructured NAS data. Again, just like Infinite Volumes, our FlexGroups are NAS protocols only. No support for SAN. So a good example of unstructured NAS data that can take up a lot of space would be your home directories. Also for big data, for example, Hadoop if you're using the NetApp NFS connector.

It is also ideal for software build and test environments, electronic design automation, log file repositories, media asset, or regulated archives, file streaming workflows, and seismic or oil and gas. Which with seismic and oil and gas, that can be a huge amount of data. So FlexGroups are great for that.

Non-Ideal Use Cases

Where is FlexGroup not such a good idea? Well, where any file takes up over 50% of the member volume size. FlexGroups get that big performance improvement because everything is so distributed. Well, if there's a single file, which takes up over 50% of the member volume size, it's not so distributed now and that's just not going to work well with FlexGroups.

Also, virtualized workloads such as VMware, are not recommended to use with FlexGroups. Where particular files need to be mapped to a specific FlexVol, or specific location, then FlexGroups obviously is not a good match for that either.

We mentioned earlier that as the files are coming in, they're going to be automatically load-balanced across all of the member volumes and those member volumes are spread across the different aggregates. It's obvious that you want those aggregates to have the same performance characteristics. You don't want one of them to be on SSDs and another one on HDDs.

If you do have a situation where you've got particular files, particular workload, and you want that to be mapped to a particular volume or a particular location in your cluster in the layout, then FlexVol would be a better choice for that rather than FlexGroups. Because again, FlexGroups it's going to be automatically distributed throughout all the members. You don't have control of where that is going to go.

Another place where you wouldn't use FlexGroup is if you required a feature that is not supported on FlexGroup. You can check the documentation on the NetApp website to see what features are and are not supported. This is something that's going to be updated frequently. So, rather than me telling you what's available right now, just check online to see what is available.

Deploying FlexGroups

When you do deploy the FlexGroup, the way it's going to work for the layout is the default number of member FlexVol volumes in a FlexGroup volume is 8 per node. Remember, volumes are created with equal size.

When using automatic creation, at least 2 aggregates are required per node when using HDD. If you've only got 1 HDD aggregate per node, you can still use FlexGroup by doing manual creation. If you're using SSD aggregate, then 1 aggregate per node is no problem. With the automatic methods of deployment, that will work there too.

Let's say that you have got a two node cluster. And you're going to create a FlexGroup which is going to be size 160TB. Well, you have got 2 members and there's going to be 8 volumes per node. So, 2 times 8 is going to be 16 which are the actual member volumes that are going to be created. We specified that the size of the FlexGroup is 160TB, that's going to be 10TB per member volume.

Let's do another example. I'm just going to double it for the number of nodes. Let's say that rather than a two node cluster, we've now got a four node cluster. And again, we're going to create a FlexGroup of size 160TB. The number of volumes per node is going to be the same. It's still 8. We don't half it because we've got double the number of nodes.

We've got four nodes now, which have got 8 each. So, 4 times 8 is going to be 32, rather than 16 member volumes. We've got 32 member volumes. The size of the FlexGroup was 160TB. Now each volume is going to be 5TB.

When you create the FlexGroup, you specify the size that you want the FlexGroup to be. The system is going to automatically calculate what size the member volumes will be. The way it does that is by putting 8 member volumes per node. Each member volume is going to be the same size. It just divides to figure out what size to make each of those volumes.

The last thing to tell you is the commands for deploying FlexGroups. The original command to deploy FlexGroups was:

flexgroup deploy

Then in ONTAP 9.2, a new command became available, which is:

volume create -auto-provision-as flexgroup

From ONTAP 9.2, if you run the ‘flexgroup deploy’ command, it will let you do it, but it will give you a warning that it's a deprecated command. You're meant to use the new command now. That new command has got more control when you run it because it's got more fields available that you can configure, including nodes. You can specify the nodes that you want to be included for this FlexGroup.

The last way you can do it is with the command:

volume create

Without using the ‘-auto-provision-as flexgroup’ field. If you do that, then it allows you to manually specify the aggregates to be used. With the ‘volume create -auto-provision-as flexgroup’ command, you can specify the nodes.

You get even more granularity with ‘volume create’ and you can actually specify down to the aggregate level when you do that. FlexGroups can also be deployed in the GUI.

NetApp FlexGroups Tutorial Configuration Example

This configuration example is an excerpt from my ‘NetApp ONTAP 9 Complete’ course. Full configuration examples using both the CLI and System Manager GUI are available in the course.

Want to practice this configuration for free on your laptop? Download your free step-by-step guide ‘How to Build a NetApp ONTAP Lab for Free’

- Create three SVMs, named FG1, FG2, and FG3. Use the UNIX security style and accept all default settings.

UNIX is the default security style so does not need to be explicitly configured.

cluster1::> vserver create -vserver FG1

[Job 51] Job succeeded:

Vserver creation completed.

cluster1::> vserver create -vserver FG2

[Job 52] Job succeeded:

Vserver creation completed.

cluster1::> vserver create -vserver FG3

[Job 53] Job succeeded:

Vserver creation completed.

2. If using the GUI, skip ahead to step 14.

3. In the next step you will use the ‘flexgroup deploy’ command to create a thin provisioned 16TB FlexGroup. How many FlexVol members will be created and what will be their size?

Sixteen FlexVol members will be created, eight on each node. Their size will be 1TB each.

4. Use the ‘flexgroup deploy’ automatic method to create a thin provisioned 16TB FlexGroup named FlexGroup1 in the FG1 SVM.

cluster1::> flexgroup deploy -vserver FG1 -size 16TB -space-guarantee none -volume FlexGroup1

(volume flexgroup deploy)

Notice: This command is deprecated. Use the "volume create -auto-provision-as flexgroup" command instead.

Warning: The export-policy "default" has no rules in it. The volume will therefore be inaccessible over NFS and CIFS protocol.

Do you want to continue? {y|n}: y

Notice: FlexGroup deploy will perform the following tasks:

The FlexGroup "FlexGroup1" will be created with the following number of constituents of size 1TB: 16. The constituents will be created on the following aggregates:

aggr2_C1N1, aggr2_C1N2, aggr3_C1N1, aggr4_C1N2

Do you want to continue? {y|n}: y

[Job 54] Job succeeded: The FlexGroup "FlexGroup1" was successfully created on Vserver "FG1" and the following aggregates: aggr2_C1N1,aggr2_C1N2,aggr3_C1N1,aggr4_C1N2

5. Verify the FlexGroup is created.

cluster1::> volume show

Vserver Volume Aggregate State Type Size Available Used%

--------- ------------ ------------ ---------- ---- ---------- ---------- -----

DeptA DeptA_root aggr1_C1N1 online RW 20MB 18.71MB 1%

DeptA DeptA_vol1 aggr1_C1N1 online RW 100MB 94.68MB 0%

DeptB DeptB_root aggr1_C1N2 online RW 20MB 18.68MB 1%

FG1 FlexGroup1 - online RW 16TB 10.30GB 0%

FG1 svm_root aggr2_C1N2 online RW 20MB 18.75MB 1%

FG2 svm_root aggr3_C1N2 online RW 20MB 18.76MB 1%

FG3 svm_root aggr4_C1N1 online RW 20MB 18.76MB 1%

cluster1-01

vol0 aggr0_cluster1_01

online RW 1.66GB 1.10GB 29%

cluster1-02

vol0 aggr0_cluster1_02

online RW 807.3MB 469.0MB 38%

9 entries were displayed.

6. Check where the FlexVol members are located. How many FlexVol members were created on each aggregate?

cluster1::> volume show -vserver FG1 -is-constituent true

Vserver Volume Aggregate State Type Size Available Used%

--------- ------------ ------------ ---------- ---- ---------- ---------- -----

FG1 FlexGroup1__0001

aggr2_C1N1 online RW 1TB 2.47GB 0%

FG1 FlexGroup1__0002

aggr2_C1N1 online RW 1TB 2.47GB 0%

FG1 FlexGroup1__0003

aggr2_C1N1 online RW 1TB 2.47GB 0%

FG1 FlexGroup1__0004

aggr2_C1N1 online RW 1TB 2.47GB 0%

FG1 FlexGroup1__0005

aggr2_C1N2 online RW 1TB 2.45GB 0%

FG1 FlexGroup1__0006

aggr2_C1N2 online RW 1TB 2.45GB 0%

FG1 FlexGroup1__0007

aggr2_C1N2 online RW 1TB 2.45GB 0%

FG1 FlexGroup1__0008

aggr2_C1N2 online RW 1TB 2.45GB 0%

FG1 FlexGroup1__0009

aggr3_C1N1 online RW 1TB 2.46GB 0%

FG1 FlexGroup1__0010

aggr3_C1N1 online RW 1TB 2.46GB 0%

FG1 FlexGroup1__0011

aggr3_C1N1 online RW 1TB 2.46GB 0%

FG1 FlexGroup1__0012

aggr3_C1N1 online RW 1TB 2.46GB 0%

FG1 FlexGroup1__0013

aggr4_C1N2 online RW 1TB 2.47GB 0%

FG1 FlexGroup1__0014

aggr4_C1N2 online RW 1TB 2.47GB 0%

FG1 FlexGroup1__0015

aggr4_C1N2 online RW 1TB 2.47GB 0%

FG1 FlexGroup1__0016

aggr4_C1N2 online RW 1TB 2.47GB 0%

16 entries were displayed.

There are four member FlexVols on each of the aggregates used.

7. In the next step you will use the ‘-auto-provision-as flexgroup’ command to create a thin provisioned 16TB FlexGroup. How many FlexVol members will be created and what will be their size?

Sixteen FlexVol members will be created, eight on each node. Their size will be 2TB each.

8. Use the ‘-auto-provision-as flexgroup’ automatic method to create a thin provisioned 32TB FlexGroup named FlexGroup2 in the FG2 SVM.

cluster1::> volume create -auto-provision-as flexgroup -vserver FG2 -size 32TB -space-guarantee none -volume FlexGroup2

Notice: The FlexGroup "FlexGroup2" will be created with the following number of constituents of size 2TB: 16. The constituents will be created on the following HDD aggregates:

aggr1_C1N1, aggr1_C1N2, aggr3_C1N2, aggr4_C1N1

Do you want to continue? {y|n}: y

[Job 56] Job succeeded: Successful

9. Verify the FlexGroup is created.

cluster1::> volume show

Vserver Volume Aggregate State Type Size Available Used%

--------- ------------ ------------ ---------- ---- ---------- ---------- -----

DeptA DeptA_root aggr1_C1N1 online RW 20MB 18.71MB 1%

DeptA DeptA_vol1 aggr1_C1N1 online RW 100MB 94.67MB 0%

DeptB DeptB_root aggr1_C1N2 online RW 20MB 18.68MB 1%

FG1 FlexGroup1 - online RW 16TB 10.30GB 0%

FG1 svm_root aggr2_C1N2 online RW 20MB 18.75MB 1%

FG2 FlexGroup2 - online RW 32TB 10.15GB 0%

FG2 svm_root aggr3_C1N2 online RW 20MB 18.76MB 1%

FG3 svm_root aggr4_C1N1 online RW 20MB 18.76MB 1%

cluster1-01

vol0 aggr0_cluster1_01

online RW 1.66GB 1.08GB 31%

cluster1-02

vol0 aggr0_cluster1_02

online RW 807.3MB 460.5MB 39%

10 entries were displayed.

10. Check where the FlexVol members are located. How many FlexVol members were created on each aggregate? Were the same aggregates used as for FlexGroup1?

cluster1::> volume show -vserver FG2 -is-constituent true

Vserver Volume Aggregate State Type Size Available Used%

--------- ------------ ------------ ---------- ---- ---------- ---------- -----

FG2 FlexGroup2__0001

aggr1_C1N2 online RW 2TB 2.45GB 0%

FG2 FlexGroup2__0002

aggr1_C1N2 online RW 2TB 2.45GB 0%

FG2 FlexGroup2__0003

aggr3_C1N2 online RW 2TB 2.45GB 0%

FG2 FlexGroup2__0004

aggr4_C1N1 online RW 2TB 2.45GB 0%

FG2 FlexGroup2__0005

aggr1_C1N1 online RW 2TB 2.35GB 0%

FG2 FlexGroup2__0006

aggr1_C1N1 online RW 2TB 2.35GB 0%

FG2 FlexGroup2__0007

aggr3_C1N2 online RW 2TB 2.45GB 0%

FG2 FlexGroup2__0008

aggr1_C1N1 online RW 2TB 2.35GB 0%

FG2 FlexGroup2__0009

aggr1_C1N2 online RW 2TB 2.45GB 0%

FG2 FlexGroup2__0010

aggr3_C1N2 online RW 2TB 2.45GB 0%

FG2 FlexGroup2__0011

aggr4_C1N1 online RW 2TB 2.45GB 0%

FG2 FlexGroup2__0012

aggr1_C1N1 online RW 2TB 2.35GB 0%

FG2 FlexGroup2__0013

aggr4_C1N1 online RW 2TB 2.45GB 0%

FG2 FlexGroup2__0014

aggr4_C1N1 online RW 2TB 2.45GB 0%

FG2 FlexGroup2__0015

aggr3_C1N2 online RW 2TB 2.45GB 0%

FG2 FlexGroup2__0016

aggr1_C1N2 online RW 2TB 2.45GB 0%

16 entries were displayed.

There are four member FlexVols on each of the aggregates used. Different aggregates were used than for FlexGroup1.

11. Create a thin provisioned 16TB FlexGroup named FlexGroup3 in the FG3 SVM using aggr1_C1N1 and aggr1_C1N2 only.

You need to use the manual ‘volume create’ method without ‘auto-provision-as flexgroup’ to specify individual aggregates to use.

cluster1::> volume create -vserver FG3 -volume FlexGroup3 -aggr-list aggr1_C1N1,aggr1_C1N2 -size 16TB -space-guarantee none

Notice: The FlexGroup "FlexGroup3" will be created with the following number of constituents of size 2TB: 8.

Do you want to continue? {y|n}: y

[Job 57] Job succeeded: Successful

12. Verify the FlexGroup is created.

cluster1::> volume show

Vserver Volume Aggregate State Type Size Available Used%

--------- ------------ ------------ ---------- ---- ---------- ---------- -----

DeptA DeptA_root aggr1_C1N1 online RW 20MB 18.71MB 1%

DeptA DeptA_vol1 aggr1_C1N1 online RW 100MB 94.67MB 0%

DeptB DeptB_root aggr1_C1N2 online RW 20MB 18.68MB 1%

FG1 FlexGroup1 - online RW 16TB 10.07GB 0%

FG1 svm_root aggr2_C1N2 online RW 20MB 18.74MB 1%

FG2 FlexGroup2 - online RW 32TB 10.15GB 0%

FG2 svm_root aggr3_C1N2 online RW 20MB 18.75MB 1%

FG3 FlexGroup3 - online RW 16TB 4.69GB 0%

FG3 svm_root aggr4_C1N1 online RW 20MB 18.76MB 1%

cluster1-01

vol0 aggr0_cluster1_01

online RW 1.66GB 1.06GB 32%

cluster1-02

vol0 aggr0_cluster1_02

online RW 807.3MB 462.3MB 39%

11 entries were displayed.

13. Check where the FlexVol members are located. How many FlexVol members were created and what is their size?

cluster1::> volume show -vserver FG3 -is-constituent true

Vserver Volume Aggregate State Type Size Available Used%

--------- ------------ ------------ ---------- ---- ---------- ---------- -----

FG3 FlexGroup3__0001

aggr1_C1N1 online RW 2TB 2.18GB 0%

FG3 FlexGroup3__0002

aggr1_C1N2 online RW 2TB 2.28GB 0%

FG3 FlexGroup3__0003

aggr1_C1N1 online RW 2TB 2.18GB 0%

FG3 FlexGroup3__0004

aggr1_C1N2 online RW 2TB 2.28GB 0%

FG3 FlexGroup3__0005

aggr1_C1N1 online RW 2TB 2.18GB 0%

FG3 FlexGroup3__0006

aggr1_C1N2 online RW 2TB 2.28GB 0%

FG3 FlexGroup3__0007

aggr1_C1N1 online RW 2TB 2.18GB 0%

FG3 FlexGroup3__0008

aggr1_C1N2 online RW 2TB 2.28GB 0%

8 entries were displayed.

Eight member FlexVols were created, four on each aggregates. Their size is 2TB each. Manual FlexGroup creation overrides the automatic method defaults.

14. Use System Manager to create a thin provisioned 16TB FlexGroup named FlexGroup1 in the FG1 SVM and then verify which aggregates are used by the FlexGroup. Skip this step if you used the CLI to complete this lab exercise.

Additional Resources

What a FlexGroup volume is: https://docs.netapp.com/us-en/ontap/flexgroup/definition-concept.html

NetApp ONTAP FlexGroup Volumes Technical Overview: https://www.netapp.com/pdf.html?item=/media/7337-tr4557pdf.pdf

Monitor the Space Usage of a FlexGroup Volume: https://docs.netapp.com/us-en/ontap/flexgroup/monitor-space-usage-task.html

Libby Teofilo

Text by Libby Teofilo, Technical Writer at www.flackbox.com

Libby’s passion for technology drives her to constantly learn and share her insights. When she’s not immersed in the tech world, she’s either lost in a good book with a cup of coffee or out exploring on her next adventure. Always curious, always inspired.