In this NetApp training tutorial, you’ll learn about the NetApp Data Fabric, which is NetApp’s vision or strategy to integrate all of their storage platforms across their portfolio with each other and also across Cloud services. Scroll down for video and text tutorials.

The NetApp Data Fabric – Overview Video Tutorial

Casey Cockfield

Your NetApp training courses saved my job. All the Engineers left except me, and without your training I would have failed. Without your course, being thrown into NetApp so swiftly as an entire European cluster failed… I would have been in a big big mess! Thank you so much brother… You saved my career!

The point of the NetApp Data Fabric is to ensure that the data can be seamlessly stored and moved between different platforms in the NetApp portfolio and across Cloud services as well, to meet capacity, performance, and data protection needs. The idea is to achieve greater integration across diverse storage and get away from storage silos and lock-in.

NetApp has recognized a problem with a lot of older legacy storage systems because they're standalone systems and they don't integrate with anything else. When these systems get older and you would want to move the data, it would be difficult.

They've also recognized the huge emergence of Cloud services, and it's essential that nowadays, people and companies can move their data where it's needed and when it's needed.

The goals of the Data Fabric are:

- Mobility

- Simplicity

- Automation

- Visibility

- Security

The simplified mobility makes it easier to provision backup on disaster recovery across different platforms and to place data on the most suitable storage platform and media as it moves through its life cycle.

As data gets older, typically, it can be moved to lower performance storage. It's traditionally been normally easy to do that within the same storage platform, but not between different storage platforms. NetApp is working on fixing that now.

Data Mobility Use Cases

For the data mobility use cases, you can move data across those platforms and across Cloud providers as well. So that makes it a lot easier for:

- Backup and Archive

- Disaster Recovery

- Storage Tiering and Lifecycle Management

- Migrating to the Cloud

- Running Analytic Services in the Cloud

- Datacenter consolidation

You want to have mobility because these all require you to move your data around. For your backup and archive and disaster recovery, you want your data to be in an offsite location. With the NetApp Data Fabric, it doesn't have to be the same type of platform that you're moving it to. It can be an entirely different model of storage.

With storage tiering and lifecycle management and migrating to the Cloud or migrating back on premises also requires moving your data. The idea of NetApp Data Fabric is to be as flexible as possible. Let's look at some of the tools and features that are available from the app that helps enable this below.

SnapMirror

Snap Mirror engine is an integrated feature on ONTAP and also on the element OS systems, SolidFire and NetApp HCI. The SnapMirror engine has two parts:

- SnapMirror - Used for disaster recovery.

- SnapVault - A backup feature.

SnapMirror engine has been available for a long time on ONTAP systems. It's also had been ported over to those element OS systems as well.

With SnapMirror, data can be replicated between all the different ONTAP platforms namely, FAS, AFF, ONTAP Select, Virtual Machines, and Cloud Volumes ONTAP. There are no restrictions on moving data between any of those different platforms.

Data can be replicated from SolidFire and NetApp HCI to any ONTAP platform. Right now you can't replicate vice versa, from ONTAP back to SolidFire and NetApp HCI. It is expected that that will be available in a future release though. Data can also be replicated to Cloud Backup, which was formerly known as AltaVault.

Fabric Pool

Fabric Pool is an ONTAP feature so it works on all the different ONTAP platforms and it tiers cold data to object storage.

- Cold Data - Has not been read or written to recently. It is tiered off to lower cost storage.

- Hot Data - Has been touched recently. It stays on SSD drives on your ONTAP system.

The lower cost storage is going to be object storage on StorageGRID Webscale, Amazon S3, or on Azure Blob. When you do tier your data, you can choose to move your snapshots only, or the SnapMirror destination traffic only, or you can see all of the data you want to be eligible to be tiered. You can configure the system as a thin provisioned volume.

The volume looks big to the client, but you only put your hot data on there. After your data gets cold, it will be moved off to that lower cost storage. Therefore, it means that you get better utilization on your SSD drives becoming more efficient.

On the volumes where all data is eligible to be tiered, untouched blogs will be moved to object storage after 31 days by default. However, you can set it to a shorter or longer period as well.

Whenever you've got any blocks that have been tiered off to the Object Storage and a client wants to read or write to them later, the blocks will be pulled back and marked as hot again.

The MetaData remains local. Fabric Tool is a tiering solution, not a backup solution. Even the cold data that's been moved off the MetaData still stays on the SSDs.

So don't think, "Well I can lose my SSDs and I can still get that data." Fabric Tool allows you to get more efficiency, better cost-effectiveness out of your SSD drive, therefore you would still need a backup in place.

Cloud Backup (formerly AltaVault)

Cloud Backup which was formerly known as AltaVault backs up data to Object Storage on public or private Cloud. The Cloud Backup device, which is either a hardware or a virtual machine, sits between a backup server and the Cloud. SnapMirror to Cloud Backup is also supported.

The Cloud Backup device has its own storage space. All backups are sent to the Cloud and the recent backups are stored on the device. This means that the majority of restores can be completed quickly on site without incurring Cloud bandwidth costs.

Your Cloud Backup device stays on the same site as the storage that you're backing up. The backups go the Cloud Backup device and it performs deduplication and compression. Then, it sends them off to the Object Storage. Deduplication and compression allow backups to take up less space, leading to cost savings.

The most recent backups are cached on the device. Let’s say that you're moving those backups off to public Cloud. When you do a restore, you don't want to be pulling the data back from the public Cloud again because that will be slow and expensive. When you do restores, most often it's because somebody's done an accidental deletion or an accidental change.

Normally when you do a restore, it is going to be recent data that is stored on the Cloud Backup device, which is on premises. Therefore, you don't have to pull the data back from the public Cloud. It can be done quickly and at no cost.

As I mentioned earlier, the Cloud Backup is installed as a device. It is either a physical appliance or a virtual machine that runs on KVM, Hyper-V, or VMware. It can also run as a Cloud instance in AWS or Azure.

Cloud Backup performs:

- Inline deduplication and compression, providing cost savings.

- Data encryption before sending the backups off to the Cloud, providing security.

Cloud Backup or AltaVault evolve from the acquisition of Riverbed's SteelStore in 2014. Riverbed is best known as a compression company, specializing in network compression particularly.

Cloud Backup Use Cases

The use cases of Cloud Backup are the following:

- Backup to Private or Public Cloud. You can back up to your own object store or you can back up to pretty much any of the Cloud providers.

- Disaster Recovery.

- Cloud Backup to Private Cloud and Private Cloud to Public Cloud Tertiary Backup. It's recommended to have three copies of your data. You can have the primary data on your storage system, and the secondary data is cached on the Cloud Backup device. It also can be in your own private object store on site and you can backup offsite to public Cloud as well.

- Cloud Disaster Recovery (DR) Site. It can either be your own DR site and your own building offsite or you can do DR in the Cloud. You can spin up an AWS or Azure instance of Cloud Backup and get your actual backups in the Cloud.

- Supports migration from one Cloud provider to another.

Cloud Backup Appliance

Let's have a look at the Cloud Backup in a bit more detail. You can get the Cloud Backup datasheet in the NetApp website and it is stated there that it supports data coming in from SnapMirror or ONTAP system and pretty much all of the well known backup solutions in the market.

The backup then is sent to your AltaVault appliance, either a physical appliance or running in software. Both of them have the cache storage and then from there, it gets sent out to the object storage. It could either be a public cloud, like Amazon or Azure, or to your own private cloud such as NetApp Storage Grid Webscale.

Cloud Sync

Cloud Sync allows you to synchronize data between CIFS, NFS, Object Storage, and Cloud Storage. Network broker software runs on premises or in the cloud and manages with sync relationships.

Cloud Sync is not like the Cloud backup which is specifically for backups and caches a local copy of your recent backups. Cloud sync is more of a basic service used for moving your data around.

After the initial copy or the initial sync, the service will then sync any changed data every 24 hours. So, you can use this for copying data from one location to another and it can keep them in sync afterward as well.

In the NetApp website, you can see the different options with Cloud Sync. From AWS EFS, you can go to AWS S3 or NFS server. From AWS S3, you can go to an AWS EFS, NFS, CIFS server, or another AWS S3 bucket in another location.

Azure, IBM, and Google are supported as well. Pretty much any NFS and CIF server can migrate to or from the public cloud using the Cloud Sync service.

Cloud Sync Use Cases

The use cases for Cloud Sync are:

- Data Migration to the Cloud

- Run Analytic Services in the Cloud

- Tech Refresh. It can help with moving the data around.

- Datacenter consolidation. You can use it to pull data from the cloud.

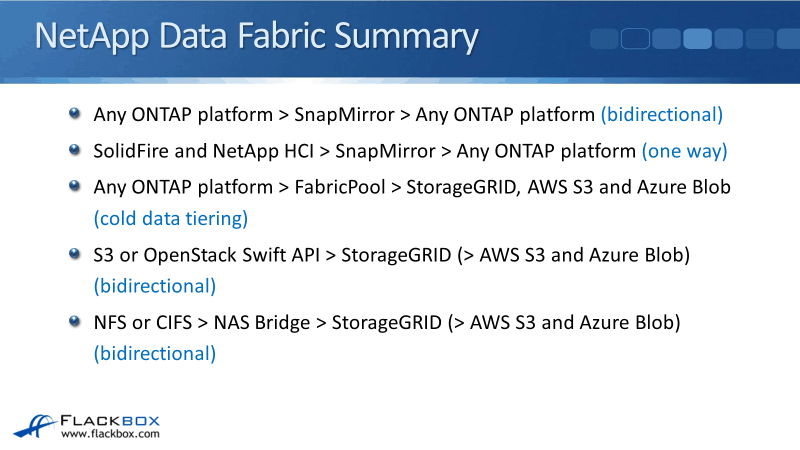

NetApp Data Fabric Summary

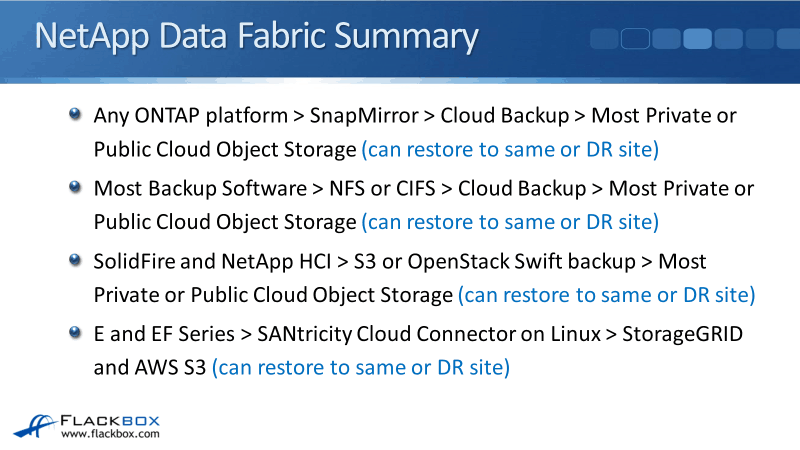

Now, I'm going to give you a summary of all the different mobility options. This is something that NetApp is working hard on right now. So this list for sure is going to be updated over the coming months. This is what's available right now:

- From any ONTAP platform, you can SnapMirror to any other ONTAP platform. This is bidirectional which means the data can go either way around.

- From SolidFire and NetApp HCI, you can SnapMirror to ONTAP. Right now, this is one way. From ONTAP you can not SnapMirror to SolidFire and NetApp HCI.

- From any ONTAP platform, you can FabricPool, StorageGRID, AWS S3, and Azure Blob. That is cold data tiering.

- S3 or OpenStack Swift API can be used to send objects to StorageGrid which is on private Cloud. From there you can also optionally send the data off to the objects on AWS S3 and Azure Blob.

- From an NFS or CIFS client, you can use the NAS Bridge to send traffic to StorageGrid, then optionally onto AWS S3 and Azure.

- Any ONTAP platform can SnapMirror to Cloud Backup which can send the data to most private or public cloud object storage after it has done the deduplication and the compression. If you lose your primary site, you can restore to your primary or different DR site.

- Most backup software can do the same thing as well. So for saying that it's a Cloud Backup. It can either be from SnapMirror or it can be from most backup software.

- SolidFire and NetApp HCI can do a backup using S3 or OpenStack Swift APIs and that can be sent to most private or public cloud object storage. Again, you can restore that back to the same or a different DR site.

- The E and the EF series platforms can use the SANtricity Cloud Connector on Linux to also do backups to StorageGRID and AWS S3.

There are more features that can be used for data mobility:

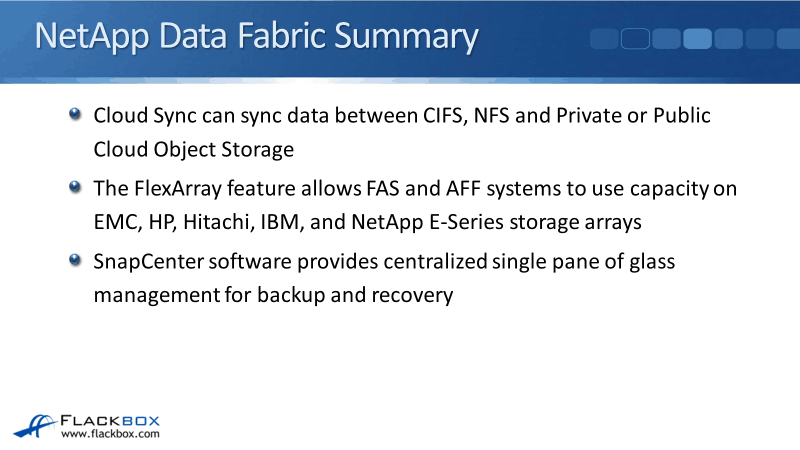

- Cloud Sync can sync data between CIFS, NFS, and private or public Cloud Object Storage.

- FlexArray feature on ONTAP allows FAS and AFF systems to use capacity on EMC, HP, Hitachi, IBM, and NetApp E-Series storage arrays.

- SnapCenter software provides a centralized single pane of glass management for backup and recovery.

Additional Resources

What Is a Data Fabric?: https://www.netapp.com/data-fabric/what-is-data-fabric/

Learn How You Can Start Building Your Data Fabric Today: https://www.netapp.com/blog/learn-how-you-can-start-building-your-data-fabric-today/

The Season for the Data Fabric: https://www.netapp.com/blog/the-season-for-the-data-fabric/

Click Here to get my 'NetApp ONTAP 9 Storage Complete' training course.

Libby Teofilo

Text by Libby Teofilo, Technical Writer at www.flackbox.com

Libby’s passion for technology drives her to constantly learn and share her insights. When she’s not immersed in the tech world, she’s either lost in a good book with a cup of coffee or out exploring on her next adventure. Always curious, always inspired.