In this NetApp tutorial, you’ll learn about the different administrative components on the cluster. I’ll tell you about Volume Zero, and Aggregate Zero. Also, about the replicated database and our admin SVMs. Scroll down for the video and also text tutorial.

NetApp Admin Components Video Tutorial

Charles Lawton

Just completed NetApp Storage training! I found the instructor Neil Anderson to be amazing. He’s extremely competent, has spent time in the industry, and knows the platform very well. He colors the materials with some real world examples, which is always helpful to understand the differences between doing something in the lab and doing it in the real world.

I manage several NetApp arrays, and found myself going to the real platform to see how we’ve implemented the concepts presented here. Very happy I picked up this course!

When the system initially comes to you from the factory, it has already got ONTAP installed. The system image is stored on CompactFlash boot media, that's what this system boots up from. So, it's able to boot up into the operating system, but it's got a blank configuration there ready for you to start configuring the system.

Vol0 – The Node Root Volume

When you do configure the system, that information is stored on disk. The volume is at the lowest level that data can be accessed. Because that system information you configure is stored on disk, we need to have a volume and an aggregate to be able to store it on. The volume for that system information is Vol0, Volume Zero and it is stored on Aggregate Zero.

The system information is replicated between all the different nodes in the cluster. It is possible that a node could fail, so obviously, we don't want to have it just sitting on one node. We want to have it on all nodes so they've all got access to that same information. On each node, that information is stored on Vol0, which is in Aggregate Zero.

That system information, including the replicated database and log files, are stored on Vol0. The system information, as I said, is replicated between all the different nodes in the cluster, and Vol0 is dedicated to system information. You cannot have any normal user data saved in Vol0.

The Replicated Database (RDB)

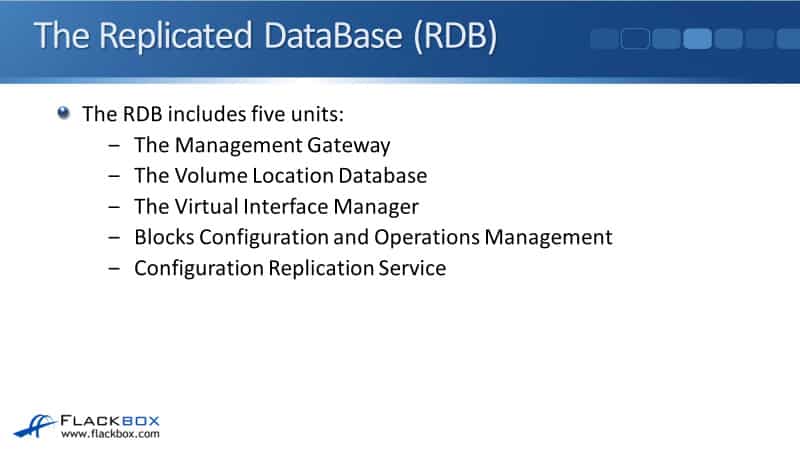

The replicated database (RDB) has five units. That's the management gateway, the volume location database, the virtual interface manager, blocks configuration and operations management, and the configuration replication service.

One node in the cluster will be elected as the replication master for each of the RDB units which will be in control of the replication. The same node will typically be the master for all units. Typically, it will be the first node in the cluster, but that can change if there are any failover events.

The Management Gateway (Mgmt)

Now, let's talk about those different units. The first one was the management gateway which provides the management command line interface. The entire cluster, including all the nodes that make up the cluster, is managed as a whole. There is one command line interface that you use to manage all nodes, therefore, you don't need to configure nodes individually.

This is something that can confuse beginners to NetApp ONTAP. When we built the cluster, we configured a separate management IP address for each of the separate nodes. What some beginners think is, "Oh, I have to connect to, for example, in our cluster, 172.23.1.12 to manage node one, I configure to 172.23.1.13 to manage node two and so on." But it doesn't work like that. If we had to manage each of our nodes separately, that would be super inconvenient. So, you actually manage the entire cluster as a whole.

For example, we've got our aggregates on node one and we've got our aggregates on node two. You don't connect to node one to manage its aggregates and connect to node two to manage its aggregates, you connect to the cluster management address.

If you're using the GUI system manager, when you go to the aggregates page, you will see the aggregates for both node one and node two. If you wanted to create a new aggregate on either node, you do it from that one interface.

The same with the command line, when you connect with the command line, you can see the aggregates across all the nodes in the cluster and you can create an aggregate on any node in the cluster as well. Obviously, it's not just the aggregates, everything that you manage in the system is all managed from the one single pane of glass.

You can use the GUI or the command line to manage the cluster by connecting to the cluster management IP address. That's where you'll normally connect to. When you make any changes, those changes are going to be replicated between all the nodes in the cluster, they're all going to have that information stored on their local Volume Zero.

The Volume Location Database (VLDB)

The next one is the volume location database, the VLDB, it lists which aggregate contains each volume and which node contains each aggregate. A client might connect to a different node than the one which hosts the volume.

For example, say that we have got a four node cluster and we've got a volume on node one which a client is going to connect to. Well, we don't just configure an IP address on node one for the client to connect to, we'll configure an IP address on all four nodes in the cluster. That's the best practice.

We're also going to load balance the incoming connections across all four nodes so we can get better performance from the system. Rather than having all the clients hit one node, we're going to have those incoming connections spread across all four nodes in the cluster.

If a client hits node four and it wants to access a volume which is on node one, well, node four needs to know where that volume is. So, the VLDB is what is used to keep track of that information.

Also, as in the administrator, you can move a volume to a different aggregate. Let's say that the aggregate is running out of disk space or maybe you want to move the volume to lower performance disks because it's older data now, you can do that by doing a volume move.

When that happens, the VLDB is going to be updated to reflect the change of which aggregate the volume is in now. The VLDB is cached in memory on each node to optimize performance. It's stored on Vol0 on the hard disks, it's also cached in memory as well to get that really good performance.

The Virtual Interface Manager (VIFMGR)

The next unit is the virtual interface manager. IP addresses on the system live on a logical interface (LIF). Those logical interfaces are not tied to a physical port on the system so they can move. That's why we use a logical interface rather than putting the IP address directly onto the physical interface.

The virtual interface manager lists which physical interface the logical interfaces are currently on. A reason that a logical interface would move from one physical port to a different one would be if a node failed, for example.

Blocks Configuration and Operations Management (BCOM)

The next unit is the blocks configuration and operations management, that's BCOM. Our SAN protocols use to block access from the client. The block configuration and operations management unit is storing information for our SAN protocols. It includes information on the LUNS and the iGroups, which is how LUN Masking is configured in NetApp.

Configuration Replication Service

Finally, we've got the configuration replication service. The configuration replication service is used by MetroCluster to replicate configuration and operational data to the remote secondary cluster. MetroCluster is a disaster recovery feature.

You can have half of your system in one building and you can have the other half of your system in a separate building in a different area. That way, if you lose either building, your clients still get access to their data.

Data SVMs

The next thing that I want to talk about is our admin SVMs. Before we get onto the admin SVMs, let's have a reminder about what our data SVMs are. Our data storage virtual machines serve data to clients. Whenever you hear anybody talking about SVMs in general, they're always talking about data SVMs.

No data SVMs exist by default when the system first comes online. You need to have at least one data SVM for clients to access their data. You can create multiple data SVMs, for example, if you want to have secure multitenancy between your different departments or customers or if you want to split the management of different SAN and NAS protocols so it looks like they're on different storage systems.

Each data SVM does appear as a separate storage system for clients. So, if you've got a department A SVM and a department B SVM, they've got their own volumes, their own data, own IP addresses, and from the client's perspective, they look like completely separate storage systems.

The Administrative SVM

The admin SVM is also known as the cluster management server and it provides management access to the system. The admin SVM is created during system setup. When we run the cluster setup wizard, we create our cluster, configure our nodes management address, and we configure our cluster management address. When we were doing that, the system was creating the admin SVM for those to live on.

The admin SVM does not host user volumes, it doesn't have any user data on there. It's purely for management access. The admin SVM owns the cluster management LIF. So, when we did the system setup, we set the cluster management IP address that is owned by the admin SVM.

The cluster management logical interface can failover to any physical port throughout the cluster that is used for management. It could be on node one right now, if node one fails, it could failover to node two, so we can still access the cluster to manage it.

Node SVMs

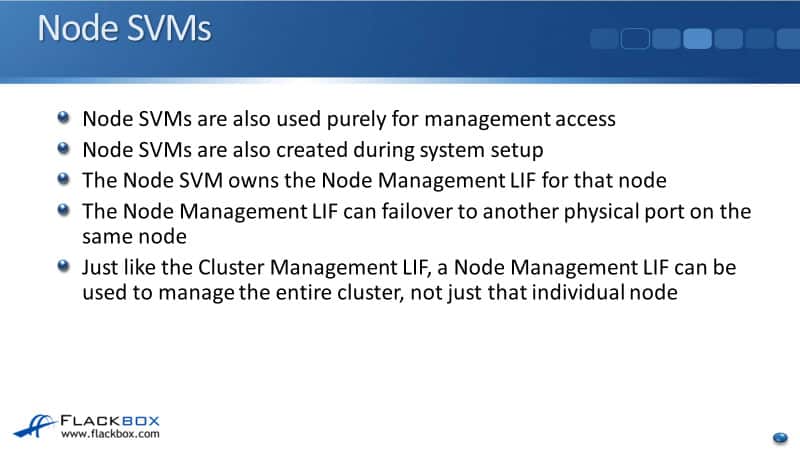

We've also got our node SVM as well. So, the global cluster management IP address lives on the global admin SVM. Our node management addresses live on the node SVM. Just like the admin SVM, again, the node SVMs are used purely for management access. You've got a separate node SVM for each node. They are also created during system setup, and this is where your node management lives.

The node management LIF can failover to another management port on the same node. So, the cluster management address can failover to any management port throughout any nodes on the entire cluster. The node management LIF always stays on that one node.

For beginners, this thing about the data SVM and the admin SVM can be a little bit confusing. Basically, when you're working with the cluster, you are going to be working with your data SVMs and you'll be setting those up and configuring client access through their data.

The admin SVM is created automatically when you do the cluster setup and after that, you can forget about it. It's there. It's just there so that you can connect to the cluster and manage it. It's just that we need somewhere for that cluster management IP address to live. After the initial setup, you can probably, pretty much forget about the admin SVM, you're never going to have to consider it again.

Additional Resources

What SVMs are: https://library.netapp.com/ecmdocs/ECMP1354558/html/GUID-E643017F-041B-4ECC-BEA1-E4D80E26A47E.html

Types of SVMs: https://library.netapp.com/ecmdocs/ECMP1354558/html/GUID-D860985F-11BF-4589-9457-E0FD03130B1F.html

Administration Overview with System Manager: https://docs.netapp.com/us-en/ontap/concept_administration_overview.html

Libby Teofilo

Text by Libby Teofilo, Technical Writer at www.flackbox.com

Libby’s passion for technology drives her to constantly learn and share her insights. When she’s not immersed in the tech world, she’s either lost in a good book with a cup of coffee or out exploring on her next adventure. Always curious, always inspired.