In this Cisco CCNA tutorial, you’ll learn about how EtherChannel load balancing works. Scroll down for the video and also text tutorial.

Cisco EtherChannel Load Balancing Video Tutorial

Chad Heck

This course helped fill in several of the gaps I had after using a few other resources. Neil is to the point without giving a bunch of unnecessary information. He explains concept well and then demonstrates them in labs. Best of all, I passed the exam today! Thank you!

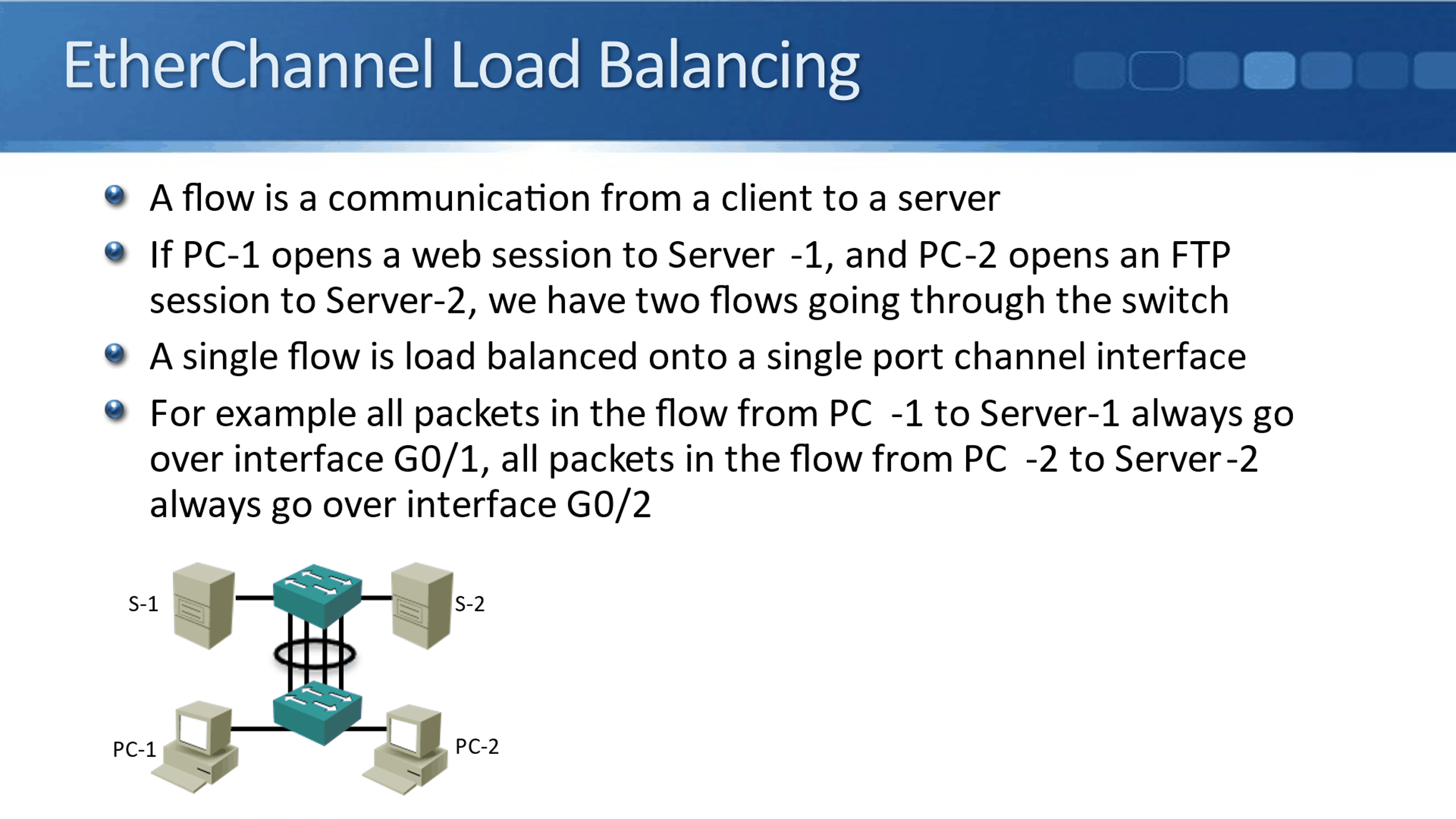

We’re going to use the diagram below. We've got two switches that have four links between them that have been grouped into an EtherChannel. Each of those four links is a Gigabit Ethernet interface. Starting with Gigabit Ethernet 0/1 on the left, going to Gigabit Ethernet 0/4 on the right.

In the bottom switch, we've got some PCs plugged in, namely PC-1 and PC-2. In the top switch, we've got some servers, Server-1 and Server-2.

We're going to cover how EtherChannel load balances the different flows that are going across the links between the switches. A flow is a communication from a client to our server using a particular application.

If PC-1, for example, opens a web session to Server-1 and PC-2 opens an FTP session to Server-2, we'd have two flows going through our switches. With EtherChannel, a single flow is load balanced onto a single port channel interface.

For example, all packets in the flow from PC-1 and Server-1 always go over interface Gig 0/1. All packets in the flow from PC-2, Server-2 always go over interface Gig 0/2.

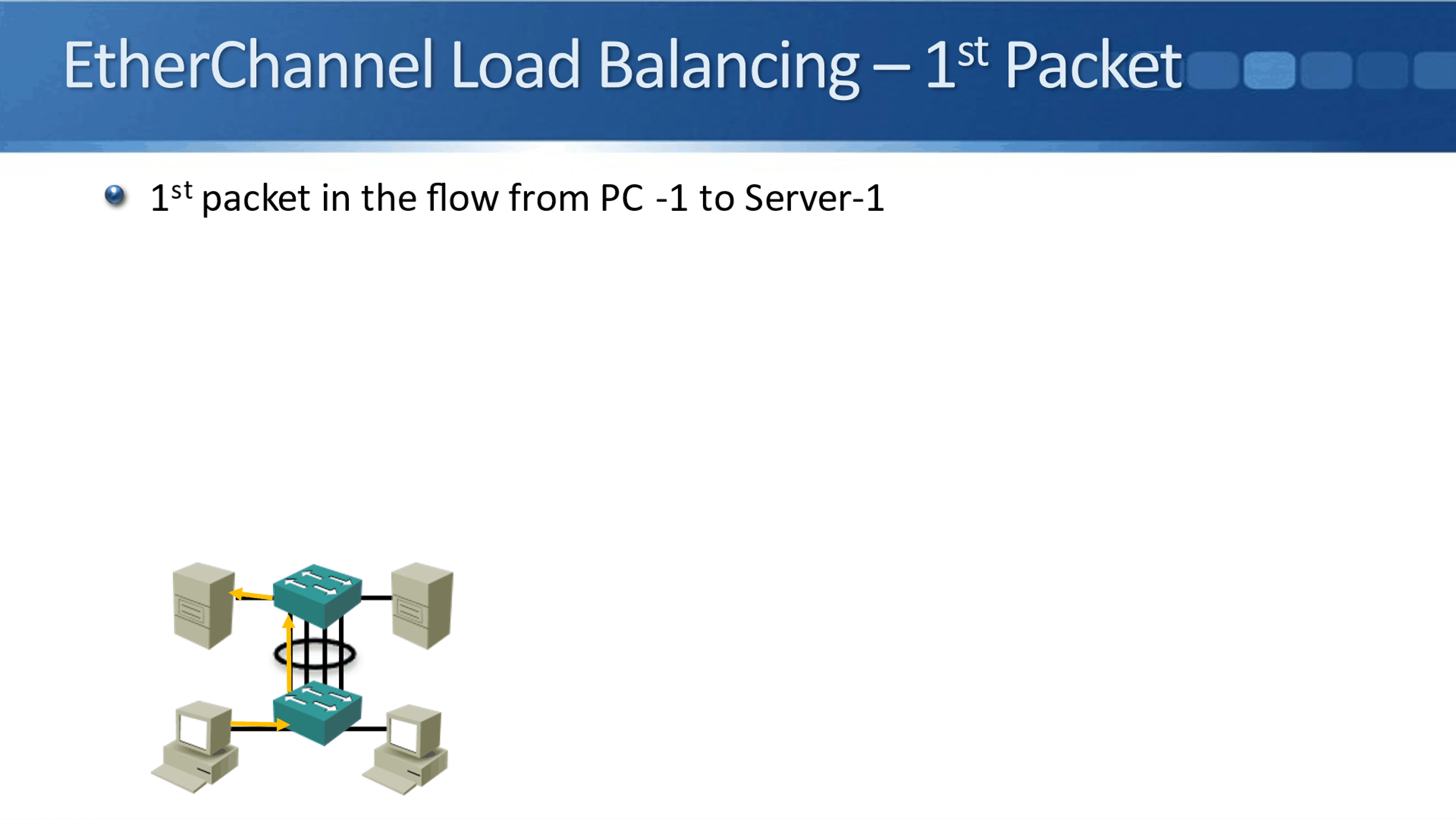

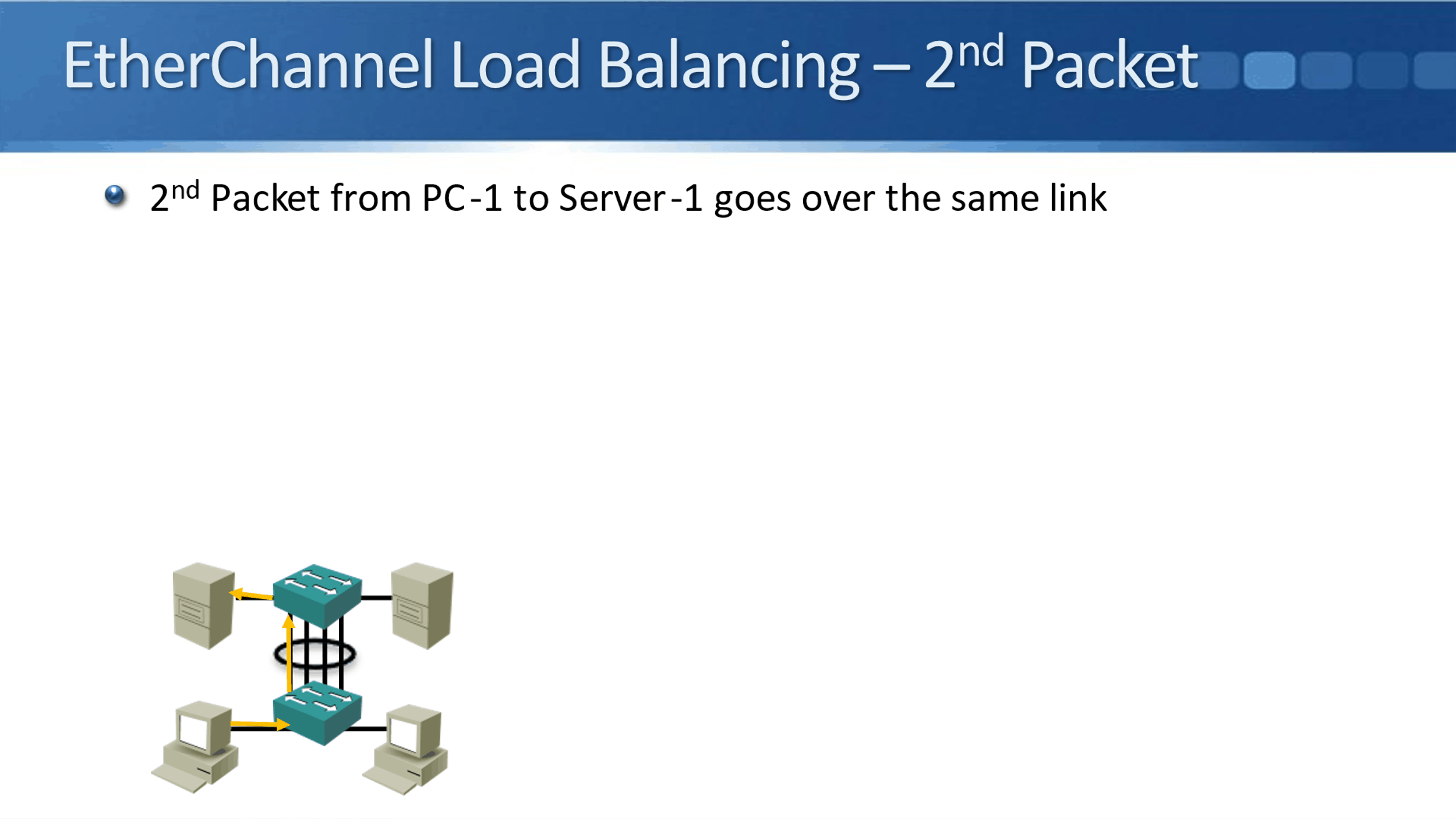

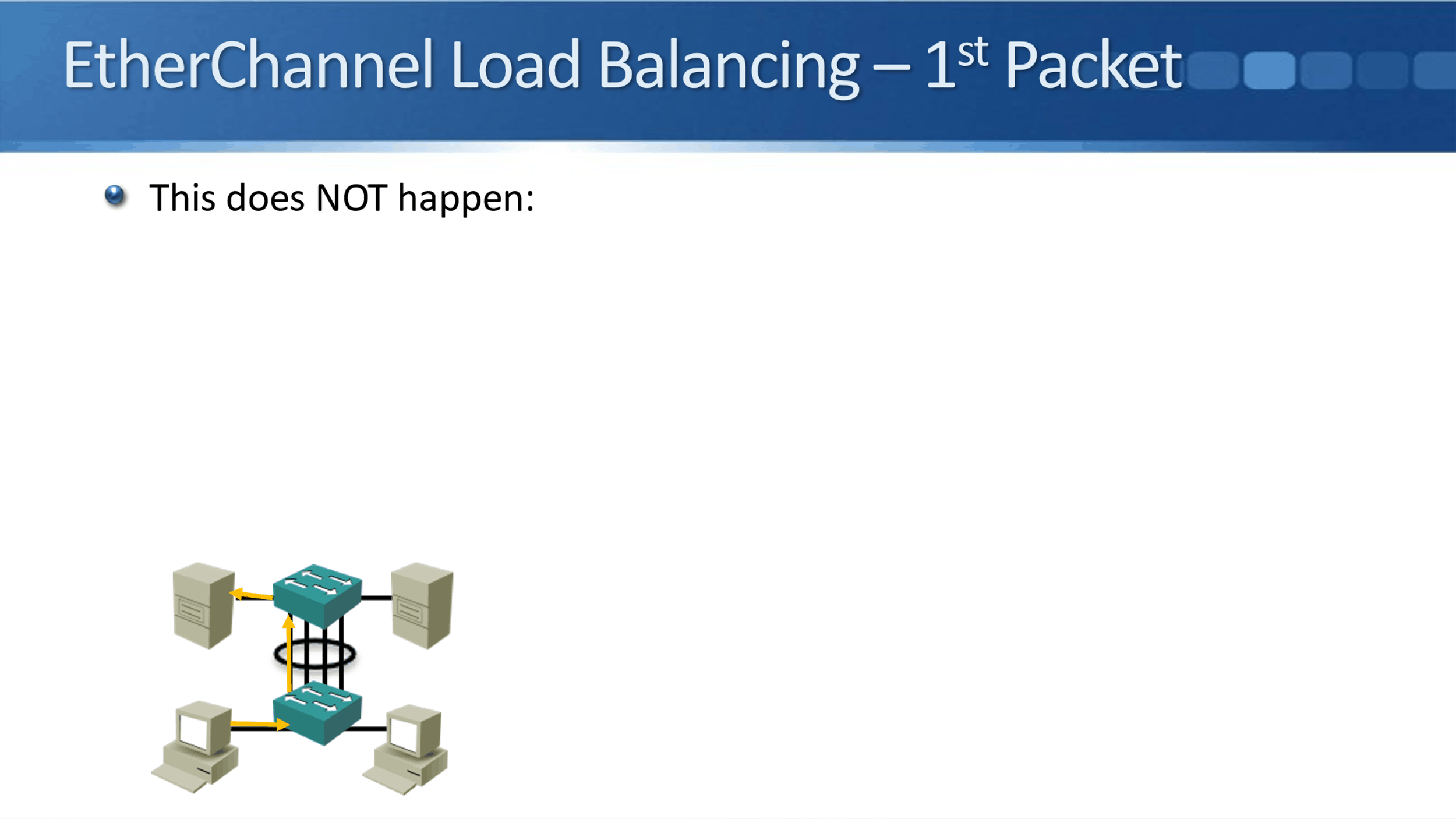

The first packet in the flow from PC-1 and Server-1 hits the first switch.

The switch decides which interface it's going to load balance it over. It chooses Gig 0/1 for example, and then that goes to the server.

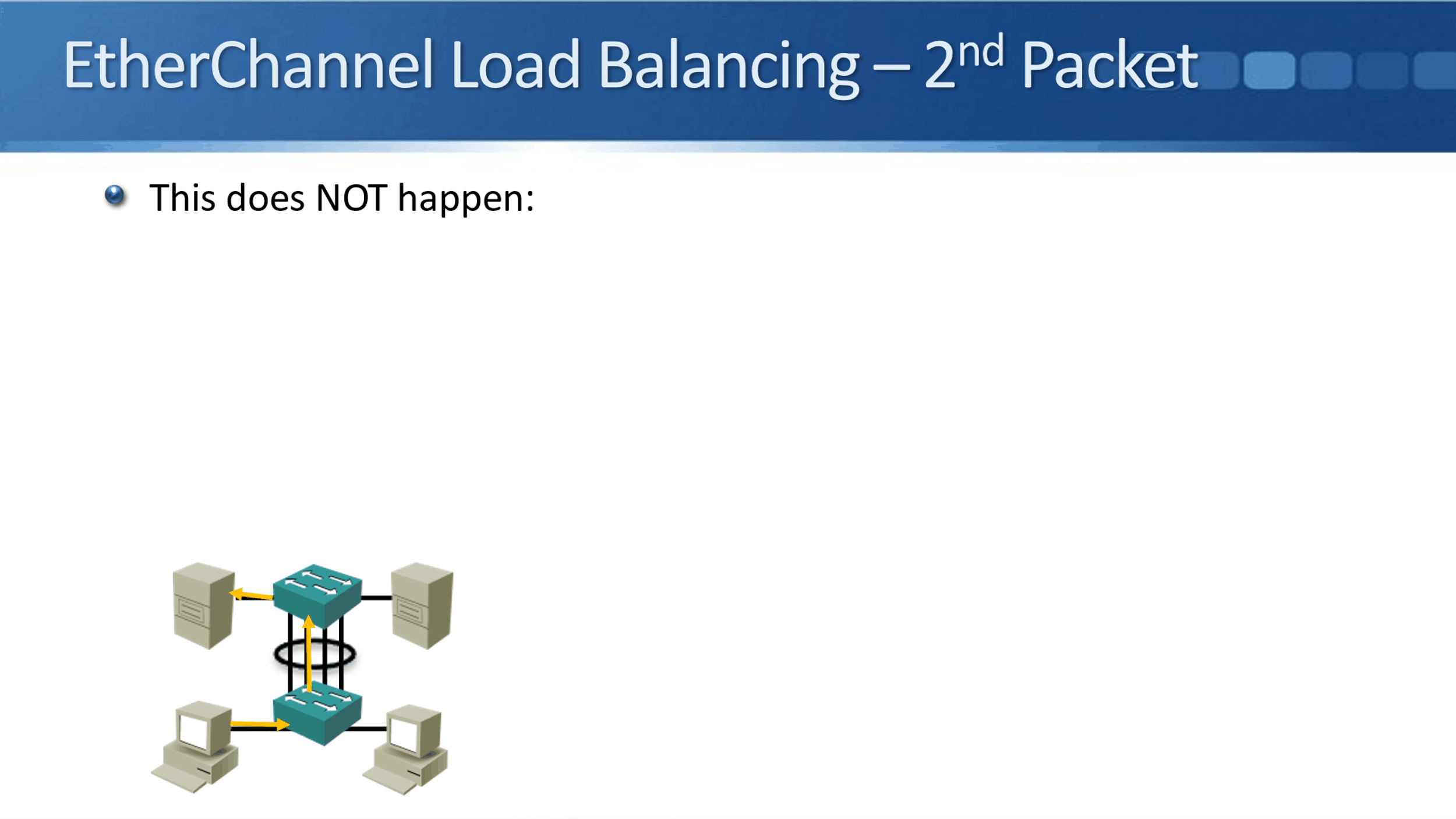

The next packet in the flow will also go over the same interface. So, when it comes in the switch, it load balances it to the same interface again, and then it goes up to the server.

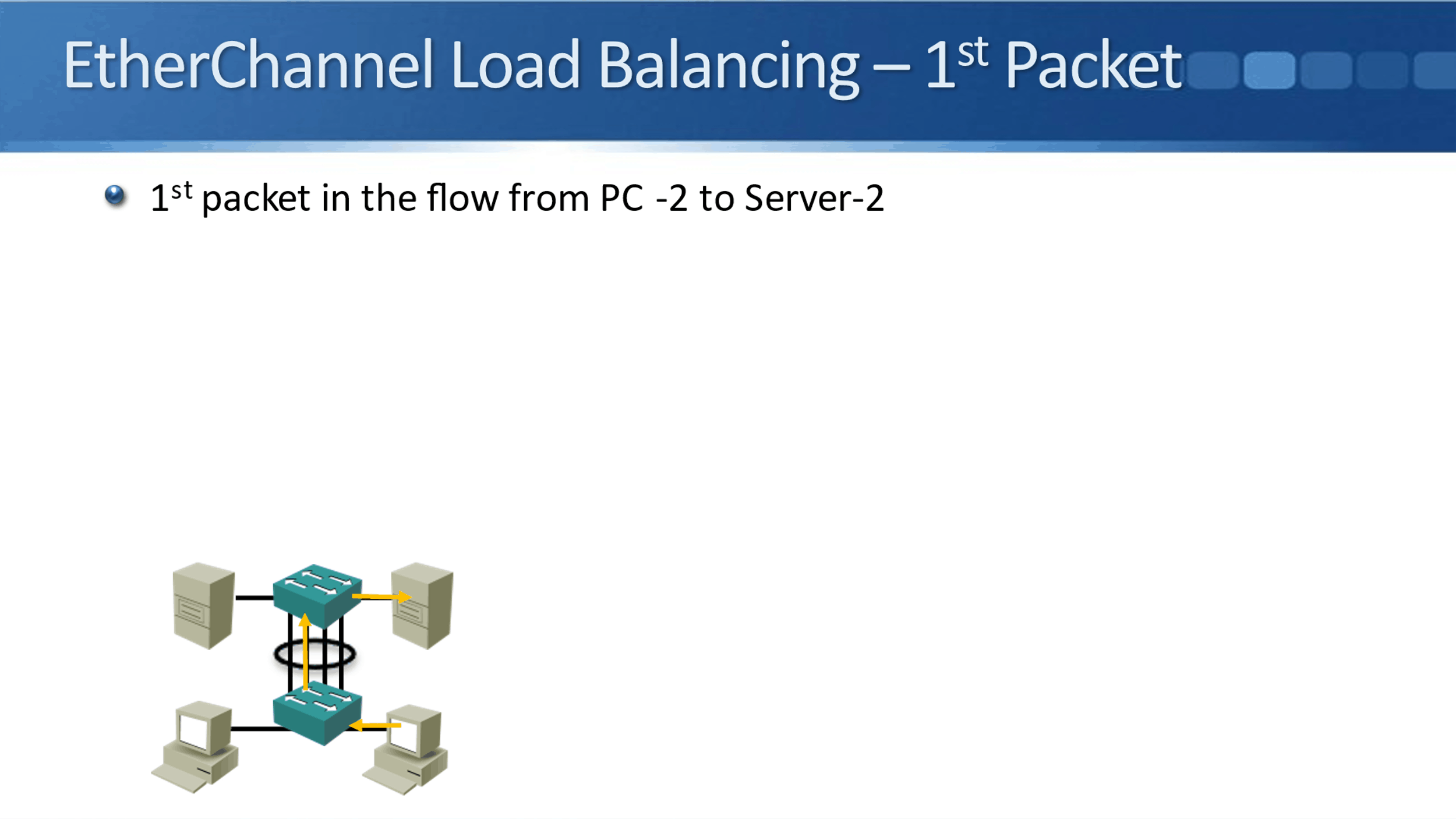

On the second flow from PC-2 Server-2, when that comes into the switch, the switch will use its algorithm to decide which interface the load balance it onto Gig 0/2 for example. Then, it goes to the server.

When the second and the third and fourth PC-1 packets come in from that flow, they'll all be load balanced onto the same interface. Packets from the same flow always load balanced on the same interface.

They're not load balanced round robin across all the interfaces in the port channel. For example, we don't load balance the first packet from PC-1 Server-1 on interface Gig 0/1, and then the second packet on that same flow to Gig 0/2.

The reason for that is that round robin load balancing could cause packets to arrive out of order at the destination, and that would break some applications. Therefore, we always load balance packets from the same flow on the same interface, so they're always going to arrive in order.

The first packet and the flow went over to interface Gig 0/1.

Now, the second packet in the same flow went over Gig 0/2. This does NOT happen.

This works in such a way because a single flow always gets load balanced on the same interface. Any single flow receives the bandwidth of a single link in the port channel as its maximum. That's a maximum of 1 Gbps per flow in our example where we were using 1 Gbps links between our switches and an aggregate of 4 Gbps across all available flows.

You can think of a port channel as a multi-lane motorway. The cars always stay in their own lane in a single lane, but because there are multiple lanes, the overall traffic gets there quicker. In our example, if we only had one uplink rather than four, we don't have so much overall bandwidth available between the switches.

EtherChannel provides redundancy as well as load balancing. If a link fails, the flows will be load balanced to the remaining links.

Additional Resources

Understanding EtherChannel Load Balancing and Redundancy on Catalyst Switches: https://www.cisco.com/c/en/us/support/docs/lan-switching/etherchannel/12023-4.html

Implementing EtherChannel in a Switched Network: https://www.ciscopress.com/articles/article.asp?p=2348266&seqNum=3

EtherChannel Load Balancing Explanation & Configuration: https://study-ccnp.com/etherchannel-load-balancing-explanation-configuration/

Libby Teofilo

Text by Libby Teofilo, Technical Writer at www.flackbox.com

Libby’s passion for technology drives her to constantly learn and share her insights. When she’s not immersed in the tech world, she’s either lost in a good book with a cup of coffee or out exploring on her next adventure. Always curious, always inspired.