In this Cisco CCNA tutorial, you’ll learn about Neural Networks and the Generative AI capabilities and models. Scroll down for the video and also text tutorials.

Cisco Generative AI Models Video Tutorial

Armand Picari

I watched your course and I passed my CCNA. Now I am working for the network team in my company. Thank you for the great videos!

Neural Networks

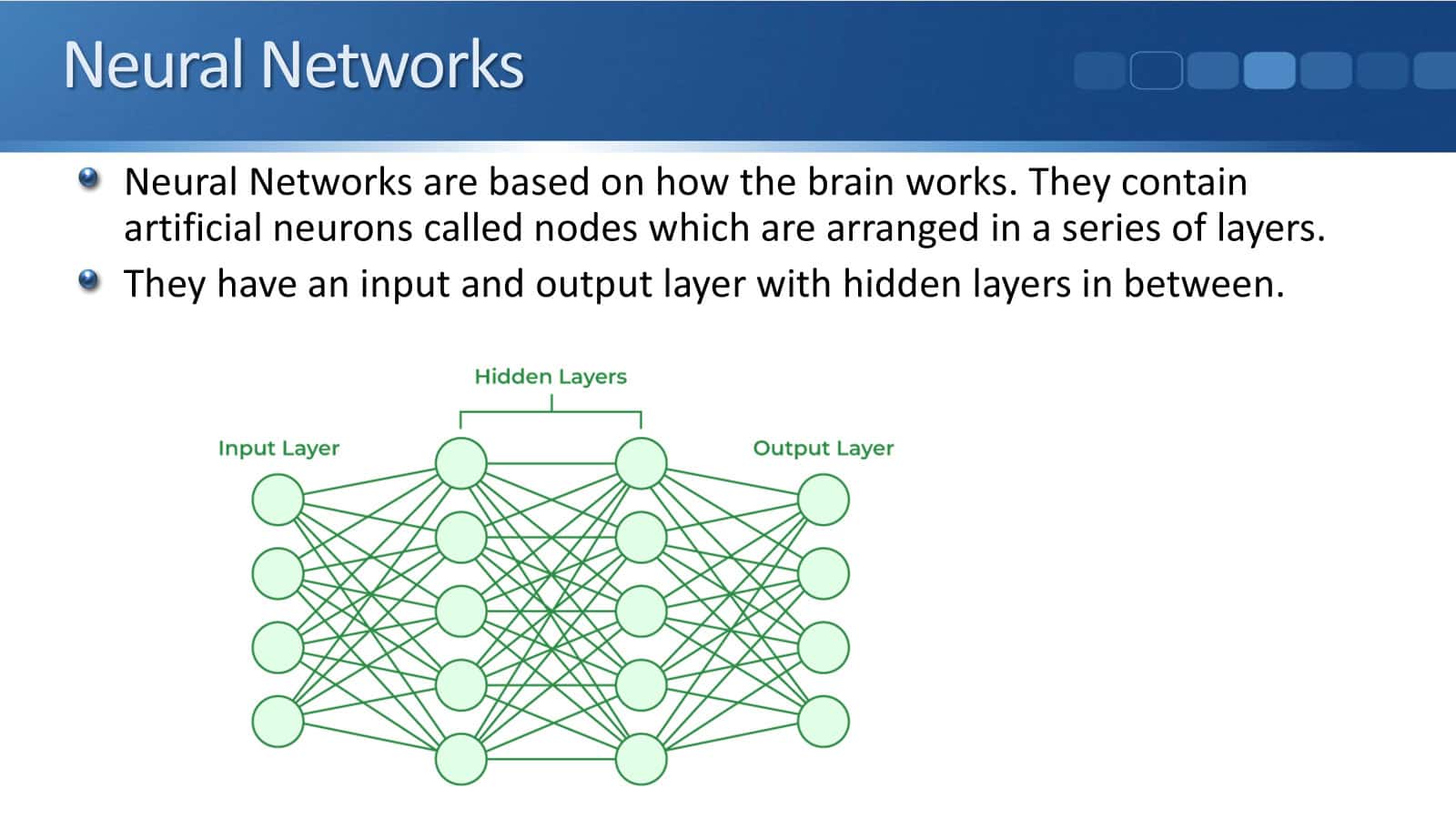

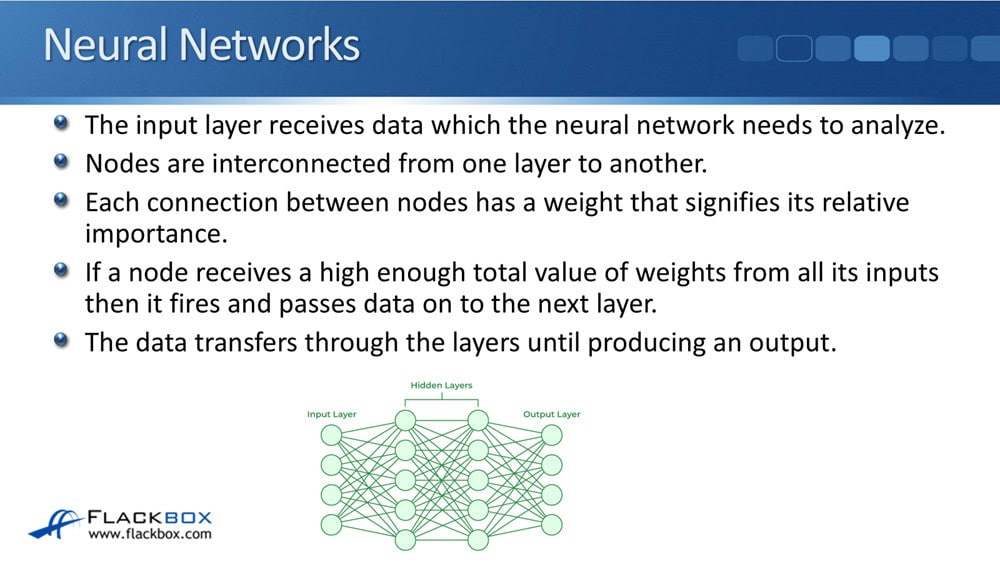

Neural networks are based on how the brain works. They contain artificial neurons called nodes, arranged in a series of layers. You can see a representation of a neural network below.

We’ve got a node here, which is similar to a neuron in the human brain. It has inputs coming into it, similar to a neuron's dendrites. It also has outputs going out, which are similar to axons. So, it's not the same as a human brain, but it was inspired by it.

They have an input layer and an output layer with hidden layers in between. We've got the input layer, where you give it some data, and then the output layer, where it generates the final result. All of the intelligence of how it gets to that final result is in the hidden layers here.

All of the nodes are interconnected at each layer. The input layer receives data that the neural network needs to analyze, and nodes are interconnected from one layer to another.

Each connection between nodes has a weight that signifies its relative importance. It's not the nodes themselves that have a weight. It's the connections that are coming into them.

If a node receives a high enough total value of weights from all its inputs, then it fires and passes data on to the next layer. The data then transfers through the layers until producing the final output.

Deep Learning

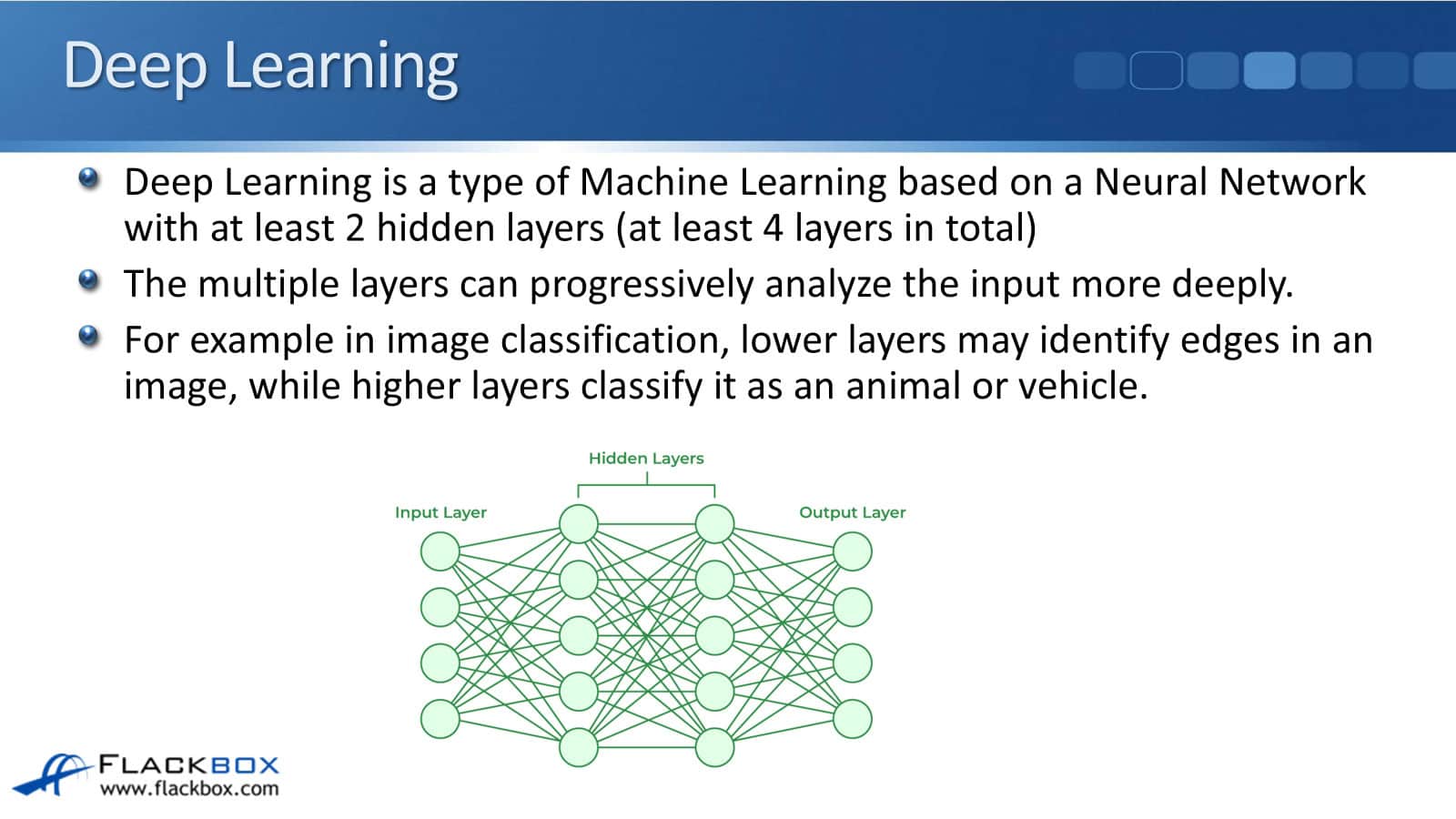

Deep Learning is a type of Machine Learning based on a Neural Network with at least 2 hidden layers, so that's at least 4 layers in total when we include the input and the output layer.

The multiple layers can progressively analyze the input more deeply. For example, in image classification, if we feed this Neural Network images of different animals, it needs to classify what particular animal each image is.

Lower layers may identify edges in an image, while higher layers classify it as an animal or vehicle, so it gets progressively more advanced as it goes through the different layers.

Neural Network Training

The path data is taken across the Neural Network, and the final output is influenced by the weight, which is on the connections between nodes. If the wrong output is produced, for example, an image of a cat as the input is classified as a dog when it gets output, then that must be corrected.

Therefore, we need to train the Neural Network to do its job correctly. The way that is usually done is through backpropagation.

Backpropagation is used to signal back through the network that the weights have to be changed. So let's say that we input an image of a cat, but then when it gets out at the other side, it is being classified as a dog.

There was a problem across the hidden layers. It's not taking the correct path. The way that we can influence that is by influencing the weight of connections. This could be done by a human or an automated method where, when the output is incorrect, we say, "No, that was incorrect. You said that this was a dog, but it is actually a cat."

We signal back to tell it that, and then the system will automatically use a mathematical formula to change the weights on the connections, and then we iteratively go through this. We just keep cycling through until we get to the stage where the system is consistently producing the correct result. We go through multiple iterations of backpropagation until we are getting accuracy.

Generative AI Models

Generative AI models are models that are used in Generative AI (Gen AI) for generating new content. Three common generative models built on neural networks are:

- Transformer Models

- Generative Adversarial Networks (GANs)

- Variational Auto Encoders (VAEs).

Unimodal and Multimodal Models

Transformer Models typically work with text, but they can work with other media, and GANS and VAEs typically work with visual data like images and video.

The models are not mutually exclusive. An AI system can use multiple models, so it could use a transformer also in conjunction with a GAN. You don't have to settle on just one for any particular application. You can mix and match between the different techniques and models to get the desired result.

Unimodal Models take instructions from the same input type as their output, for example, creating text from a text prompt.

Multimodal Models can take input from different sources and generate output in various forms. For example, from a text input, you could create an image and also a text caption for that image as well.

Transformer Models

A Transformer tracks relationships in sequential data. Always working with sequential data, they learn what is appropriate to come next in sequences. They could learn what is appropriate to come next in a text sentence, for example. They learn what is the appropriate word to come next. It could be next or previously or after or before.

What Transformer models do is analyze huge data sets. We're always going to be using a massive amount of data. For training, we'll normally use a data set which consists of articles from the Internet and other places. So it can get a huge amount of text to analyze.

The way it does the analysis is by figuring out what comes next in sequences. They use a mathematical self-attention technique to understand the importance of different parts of the sequence and determine in context. With language, context is very important, and two similar sentences could actually have completely different meanings.

Transformer models are really good at understanding context and in sentences, and also generating new sentences as they build new sequences of text. Therefore, they are often used with text, but they can work with any type of sequential data, for example, DNA for genetics research, amino acids for drug development, and also video.

They typically use massive data sets, so they're trained on a huge amount of data because they need that to build the full understanding. So you might think, well, if we're using these huge data sets, does that mean that they're going to take a long time to be trained?

Well, they can analyze data unsupervised. So it does not need a human operator to label the data beforehand. Also, they can do parallel processing. They can process multiple sequences at the same time. Because of this, they are acceptably fast to implement.

The transformer architecture is composed of encoder and decoder neural networks. The encoder is only concerned with the input and it's typically used for classification, for example, classifying images of different animals.

The decoder is used for output and is used to generate data such as a text article or a text programming code. The encoder and decoder can also be used together for tasks such as text translation from one language to another. Large Language Models (LLM) are built on transformers.

NLP Natural Language Processing and LLM

Natural Language Processing (NLP) uses a broad range of rule based methods, and also machine learning to enable computers to understand and generate language as it is spoken and written. So this is as it is spoken and written, that is the natural part of the language.

It focuses on recognizing patterns in language to understand its structure, and its tasks include understanding, generating and classifying language translation from one language to another, text-to-speech and speech-to-text.

NLP applications use a comparatively small data set compared to modern machine learning, and a method which is relevant to their specific goal. They can use different rule-based methods or machine learning methods to accomplish their tasks.

NLP has been around for a really long time. You might have heard of Dragon before. They do text-to-speech and speech-to-text software. They've actually been around since the 80's, way before modern machine language machine learning was available. They were using rule based methods to do NLP.

LLM Large Language Model

Like NLP, Large Language Models (LLM) also perform complex language processing tasks. So they do the same things that NLP do. Well NLP models choose a method relevant to their specific goal, and LLM always uses a transformer-based neural network built with a huge data set.

It has to work like that to be classified as an LLM. It can handle almost any NLP task and are very good at generating human-like text in response to instructions. So because of the way that Transformers work, they're very good at building out sequences. This is really good for creating new text.

Their tasks include chatbots, text summarization of longer articles, translation from one language to another language, and writing original content such as essays or programming code.

GPT Generative Pre-trained Transformers

Generative Pre-trained Transformers (GPTs) are transformer-based LLM. GPT-1, GPT-2, GPT-3, and GPT-4 were developed by the vendor OpenAI, but all LLMs with the same characteristics can be broadly known as GPTs.

ChatGPT, also from OpenAI, is built on its own GPT models, and GPT uses a decoder to generate text from a prompt.

Transformers in Network Operations

The following are examples of how you can use a transformer in network operations. You could provide a prompt to generate device configurations, and you could also provide network data and device configurations to generate network diagrams.

That would be using Multimodal Models because you would be providing network data and device configurations in the form of text, and you're going to generate a network diagram from that information. You could also ask it questions, such as to check what an error message means and how you should troubleshoot it.

If you are going to be using Transformers or really any type of AI and network operations where you are generating text, where you're generating configurations, or you're asking questions, you need to be aware of hallucinations.

Hallucinations are where the generative model gets the information wrong. So if you're using just a general GPT that was not purposefully built for network operations, you need to be careful and double check the information that it is giving you.

GAN Generative Adversarial Networks

With Generative Adversarial Networks (GANs), two deep learning models compete against each other. Those are the generator and the discriminator. The generator learns to create new data such as text, images, audio, or video that resembles the training data set.

Whatever was in that original data set, whatever type of output you want to create, the generator from that original data set learns how to create it. So it can for example, learn how to create images of dogs. That's the generator. It's what creates the content.

Then we've got the discriminator. The discriminator learns to distinguish between the generated data and the real data.

You could be, for example, giving a GAN the generator the task of creating images of dogs. The discriminator checks to see if this looks like fake data or can I not tell it apart from the original data in the training data set.

The discriminator will typically easily identify early efforts as fake. So it's going to be like. This does not look much like a dog at all. I know that's definitely a fake. And it will then tell the generator to retry to try again until it starts making better images.

As training progresses, the generator will produce data that can fool the discriminator and humans. The discriminator itself also improves with training and experience, and GANs are often used to create visual data.

GAN in Network Operations

GAN models can generate network traffic simulations and train AI applications to detect network anomalies and security threats. The generator when we're doing this creates network traffic simulations.

With training, the generator learns to create more realistic simulations, simulations that look more like normal traffic patterns, and the discriminator learns to detect if the traffic patterns are fake. So if this is normal network traffic, or if it has got anomalies.

So this is a way this model can be used to train your AI system. You can see if the network traffic patterns it's seeing are not normal or different than usual.

GANs are also good at creating network diagrams. We can do this in conjunction with transformer models. You could feed them text like the output of show commands like "show cdp".

You could also load your configurations and their other information, and then the GAN can be multimodal. It can create an image of a network diagram from that.

VAE Variational Auto Encoders

Variational Auto Encoders (VAE) are quite similar to GANs. They're also typically used for images as well. They also use two neural networks to generate data. An encoder and decoder work in tandem to generate output that is similar to the original input.

The encoder compresses the input data, optimizing it to retain only the most important information. The decoder then reconstructs the input from the compressed representation. So the encoder creates a compressed copy.

Then, the decoder basically decompresses that, or it creates something similar to what the original input was. The decoder generates content which is optimized for the important information because we did the compression at the start. That reduces the less desired characteristics.

VAEs are good at cleaning noise from images, only keeping the most relevant information. They're also good at finding anomalies because of the way that they work. It differentiates between good and bad characteristics.

Like GAN, VAE can also be used to detect network anomalies and security threats. It can also be used to generate network traffic simulations. GANs are better than VAEs for network diagrams because they produce more detailed output.

Additional Resources

Generative AI (genAI): https://blogs.cisco.com/tag/generative-ai

What is Generative AI?: https://www.nvidia.com/en-us/glossary/generative-ai/

What is generative AI?: https://www.ibm.com/topics/generative-ai

Libby Teofilo

Text by Libby Teofilo, Technical Writer at www.flackbox.com

Libby’s passion for technology drives her to constantly learn and share her insights. When she’s not immersed in the tech world, she’s either lost in a good book with a cup of coffee or out exploring on her next adventure. Always curious, always inspired.