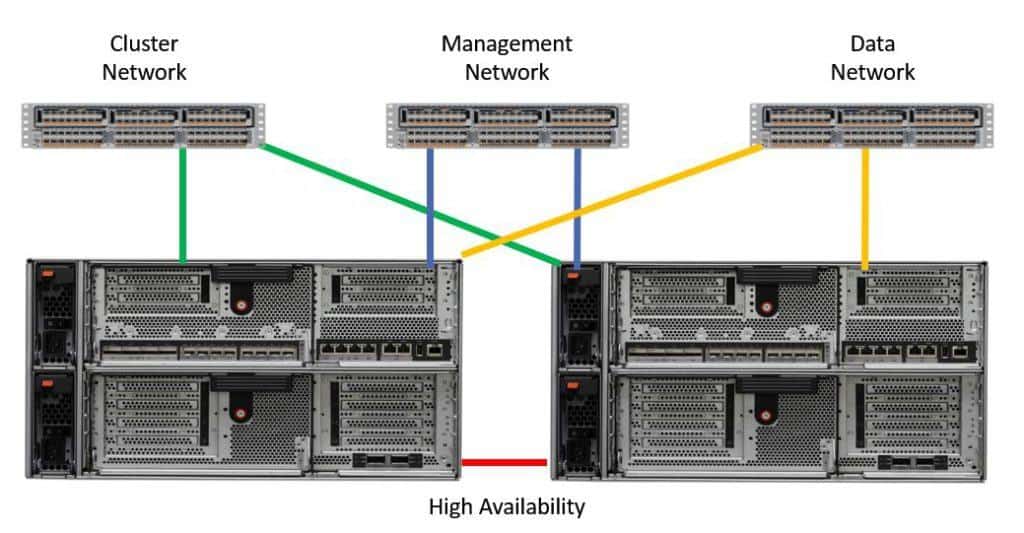

In this NetApp training tutorial, I will describe the different networks that NetApp ONTAP storage systems are connected to; the Data Network, Management Network, Cluster Interconnect, and High Availability connection. Scroll down for the video and also text tutorial.

NetApp Cluster, Management, Data and HA Networks Video Tutorial

Lance Candia

Concise detailed information. Neil is not vague about his explanation. He provides some “what if’s” as well as “for instances” which again take out any ambiguity pertaining to the training. He stays on point, doesn’t go off topic or on a tangent. He keeps it relevant with some historical or legacy information where it makes sense. Overall I would use the word to sum up this training as “impressive”.

NetApp Cluster, Management, Data and HA Networks

NetApp Cluster, Management, Data and HA Networks

The High Availability Network

The first network we’ll look at is the High Availability connection between our controllers.

The FAS2500 Series platforms support either one or two controllers in the chassis. If we have just one controller in the chassis it's a single point of failure, so we’ll usually deploy two for redundancy, configured as a High Availability HA pair.

When we have High Availability for our controllers on the FAS 2500 Series, the FAS8020, the FAS8040, and single chassis FAS8060, there's two controllers in the same physical chassis. In that case, the HA connection between the controllers is internal in the chassis. We don't need to do any cabling for it.

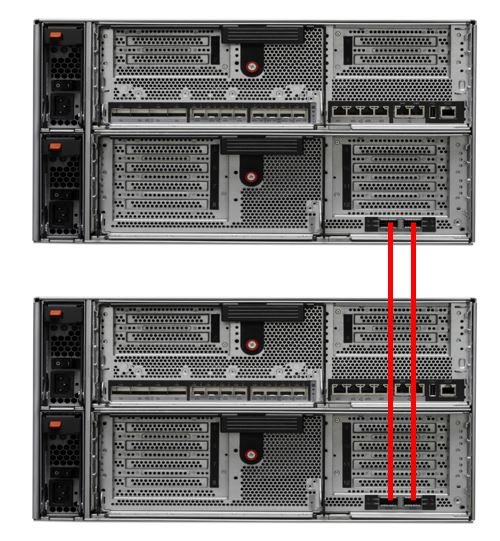

On the dual chassis FAS8060 and the FAS8080 EX, the two controllers in an HA pair are in physically separate chassis', so HA cables must be psychically connected between them.

Let's have a look at those two different types. The picture below shows a FAS8040 or single chassis FAS8060 which have two controllers in the same chassis. In this case the HA connection is internal, we don't need to physically connect anything.

Single Chassis High Availability

The next picture shows a dual chassis FAS8060 or the FAS8080 EX, which have two controllers in two different chassis'. An IO expansion module is fitted in the bottom half of both chassis'. In the bottom right of the expansion modules there are two InfiniBand ports which are used for the High Availability connection, and we connect those together between the two different chassis' in the HA pair.

Dual Chassis High Availability

See my next post for a full description of how High Availability works.

The Cluster Network

The next network we'll look at is the Cluster Network. This is used for traffic that is going between the nodes themselves, such as system information that is being replicated between the nodes. For example, when you make configuration changes it will be written to one node and then replicated to the rest. Also, if incoming client data traffic hits a network port on a different controller than the one which owns the disks, that traffic will also go over the cluster network.

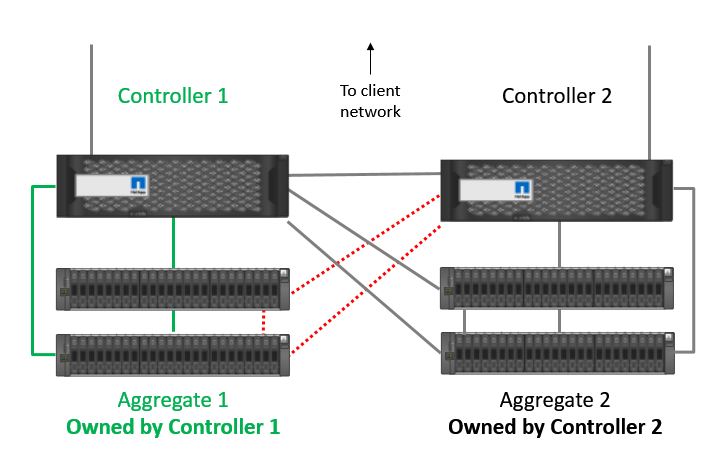

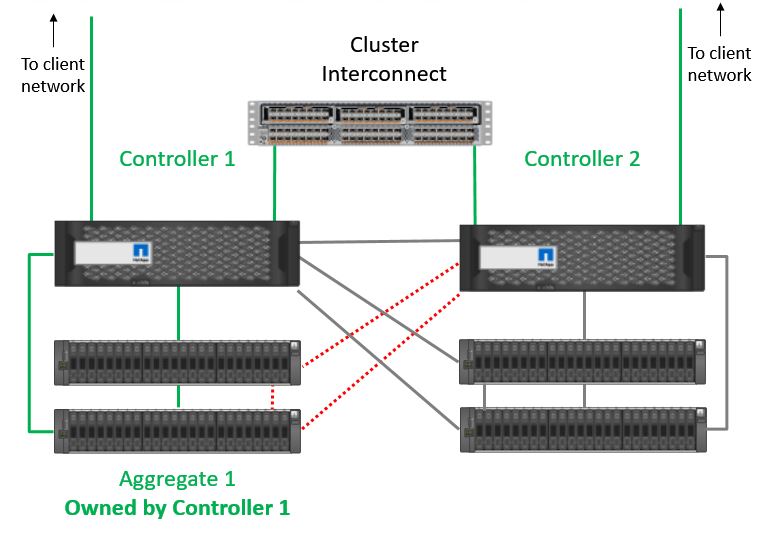

Your disks are always owned by one and only one controller. You can see in the example below we've got two nodes, Controller 1 and Controller 2.

Controller 1 owns Aggregate 1

In our example, Aggregate 1 is owned by Controller 1 and Aggregate 2 is owned by Controller 2. If you configure a Data Network interface on both controllers and give clients connectivity to both, then they can access either aggregate through either controller. Traffic will go over the cluster interconnect if a client hits an interface on a controller with does not own the aggregate.

Clients can access Aggregate 1 through either Controller

The aggregate itself is always owned by only one controller. Both controllers are connected to their own and to their High Availability peer’s disks through SAS cables. The SAS cables are active-standby. We don’t load balance incoming connections over the SAS disk shelf cables, only over the cluster interconnect.

Cluster Network Options

The options for our cluster are:

- Single node cluster

- Switchless 2 node cluster

- Switched cluster (with an even number of nodes, 2 to 8 nodes if we’re running SAN protocols, 2 to 24 if we’re running NAS only)

With a single node cluster you just have one controller without High Availability and it is a single point of failure.

If you have two nodes in your cluster you have the choice of a switched or switchless cluster.

If you've got more than two nodes then you have to connect them all together with a pair of dedicated cluster interconnect switches.

If you've only got two nodes in your cluster then you have the choice of either connecting your two nodes directly to each other or using the external cluster interconnect switches. The advantage of a switchless two node cluster is that you don’t have the external switches, so you save a little on the complexity, hardware cost and rack space. The advantage of having a switched two node cluster is that it's easier to add additional nodes later.

With the exception of the single node cluster, you cannot have an odd number of nodes in the cluster. You can have a single node cluster, a two-node switched or switchless cluster, or a four-node, a six-node, an eight-node etc switched cluster. You can’t have a three or a five-node cluster.

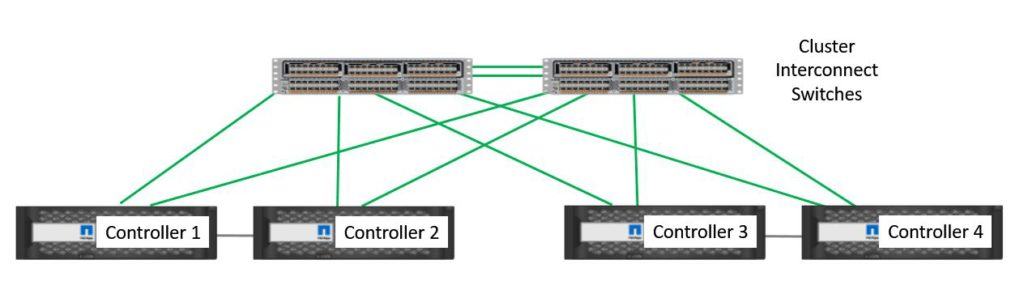

When you do have a switched cluster with more than two nodes, every pair of nodes has to be the same model of controller, and each pair is configured as a High Availability pair. All nodes will be connected to each other through the cluster interconnect. Each pair of nodes (for example Nodes 1 and 2, nodes 3 and 4 etc) will also be connected to each other with a High Availability HA connection.

You can have a mix of controllers in the cluster but the High Availability pairs have to match. For example, you could have a pair of FAS8020’s as nodes 1 and 2, and a pair of FAS8060’s as nodes 3 and 4. You couldn't have nodes 1, 2 and 3 being FAS8020’s and node 4 being a FAS8060.

The cluster network uses 10Gb Ethernet ports on our controller. We have a four node cluster in the example below.

The Cluster Network

Controllers 1 and 2 are connected in an HA pair, and controllers 3 and 4 are also connected in an HA pair. All four controllers are in the same cluster. We have a pair of Cluster Interconnect Switches, and we connect each controller to both switches with 10Gb Ethernet connections. We also have inter-switch links between the two switches.

The cluster interconnect is critical to the running of the system because it carries system information between the nodes. Because it's so important to the system, you have to run it on private dedicated switches, you have to use the supported configuration, and you have to use a supported model of switch.

Cluster Network Switches

The first supported switch is the NetApp CN1610. It can be used for clusters with up to eight nodes. It has sixteen 10Gb Ethernet ports, and four of those ports are used for the inter-switch links.

The NetApp CN1610 Switch

If you have more than eight nodes in your cluster then the NetApp Switch doesn't have enough ports. In that case you need to use the Cisco Nexus 5596, which has 48 10Gb Ethernet ports. It's upgradeable to 96 ports, but 48 ports is plenty for the Cluster Interconnect Switch. Eight of the ports are used for the inter-switch links.

The Cisco Nexus 5596 Switch

In early versions of Clustered ONTAP you always had to install the dedicated cluster interconnect switches, even if you only had two nodes in your cluster.

Two Node Cluster

Customers asked NetApp the question, "I've only got two nodes. Why can't I connect them directly to each other? Why do I have to buy separate switches which costs money, takes up rack space, power, and cooling, and is another component that can go wrong? I’d prefer just to connect the controllers directly to each other."

NetApp listened to their customers and there is now support for 'switchless two node clusters'.

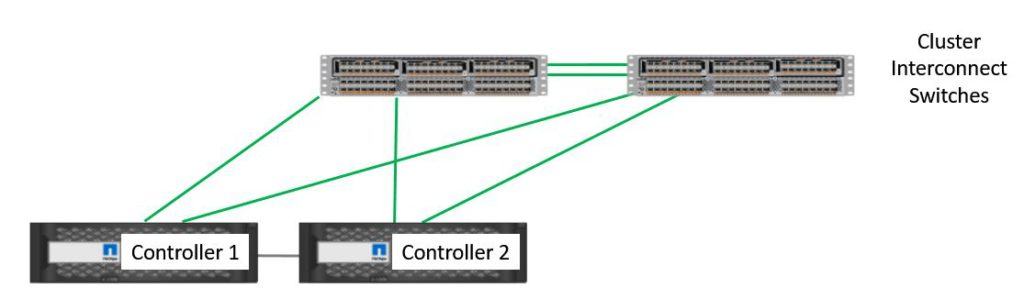

The diagram below shows a FAS8040 or a single chassis FAS8060 configured as a switchless two node cluster. Rather than using separate external switches, we connect two 10Gb Ethernet ports on both controllers directly to each other.

Switchless 2 Node Cluster

Notice that this is different than High Availability HA. For HA, when we're using a single chassis, we don't need to cable anything, the connection is internal. For the Cluster Interconnect, even when the two controllers are in the same chassis, we have to physically cable them together. This uses two of our 10Gb Ethernet ports.

If we have a dual chassis FAS8060 or the FAS8080EX, we're going to have a similar configuration. Again, we connect two of the 10Gb Ethernet ports on both controllers to each other.

Switchless 2 Node Cluster - Dual Chassis

The Management Network

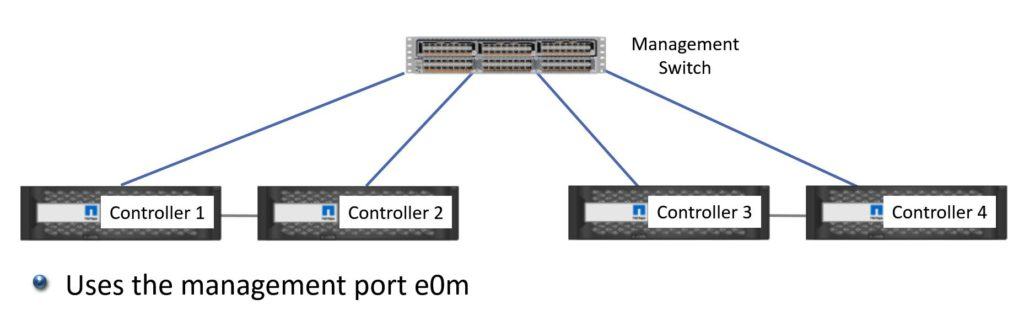

The next network that our system is connected to is the Management Network. It’s use is pretty self-explanatory: it’s used for our incoming management connections, such as when we're using the command line or the System Manager GUI.

The management network uses Ethernet ports on our controller. The e0m port is a 1Gb Ethernet port which is dedicated for management traffic.

The Management Network - Single Switch

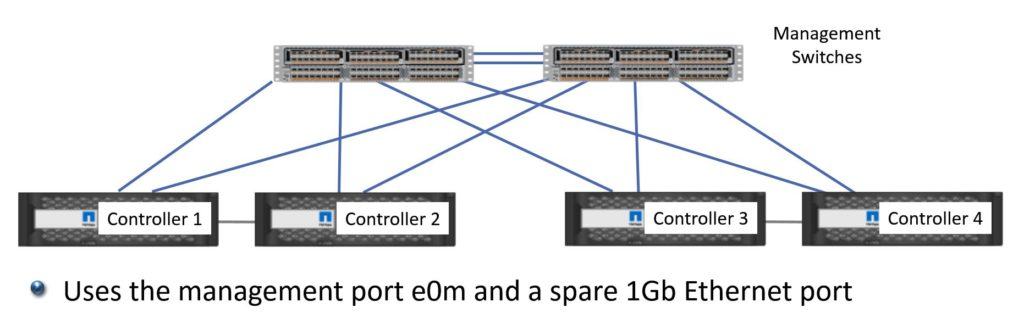

NetApp recommend using dual management connections for redundancy, where you configure two ports in your controller for management, and connect them to two separate switches.

The Management Network - Dual Switches (Recommended)

This is not mandatory. If you only had one Management Network path and you lost it, your clients would still be able to access their data, you just wouldn't be able to make any changes until you'd fixed the problem.

It's not really critical to have dual redundant management connections, but it is recommended by NetApp. If you do configure redundant management connections, it will take up another 1Gb Ethernet port in addition to e0m. You won't be able to use it for client access if you use it for management.

Management Network Switches

If you have up to eight nodes in the cluster then you can use the NetApp CN1601 as a management switch. It has sixteen 1Gb Ethernet ports.

The NetApp CN1601 Switch

If you have more than eight nodes in your cluster you can use the Cisco Catalyst 2960. The Catalyst 2960 is one of Cisco's workhorse switches and it comes with various options on how many ports it has. For the NetApp Management Network, use a 24 port switch.

The Cisco Catalyst 2960 Switch

The Data Network

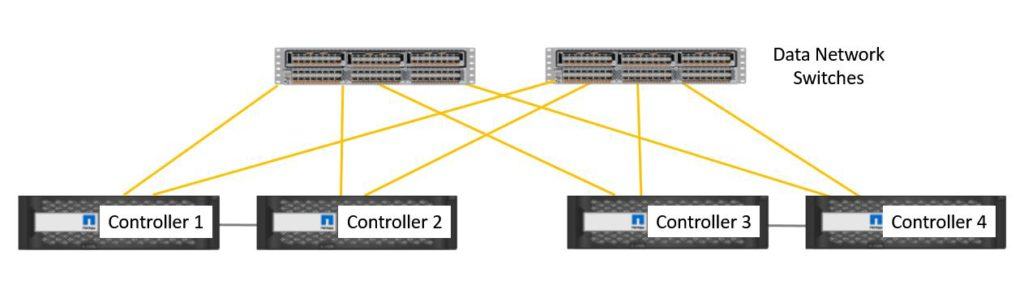

The next network is the Data Network for our client data connections. This supports our NAS protocols of NFS or CIFS, and our SAN protocols of iSCSI, Fibre Channel, or Fibre Channel over Ethernet.

The data network uses Ethernet or Fibre Channel ports on our controller, depending on the client access protocol.

Unlike the Management Network, if you have a single Data Network path and it goes down then your clients will not be able to access their data. The Data Network is almost certainly going to be mission critical for your enterprise, so for sure you're going to have redundant paths.

The Data Network - Dual Switches (Highly Recommended)

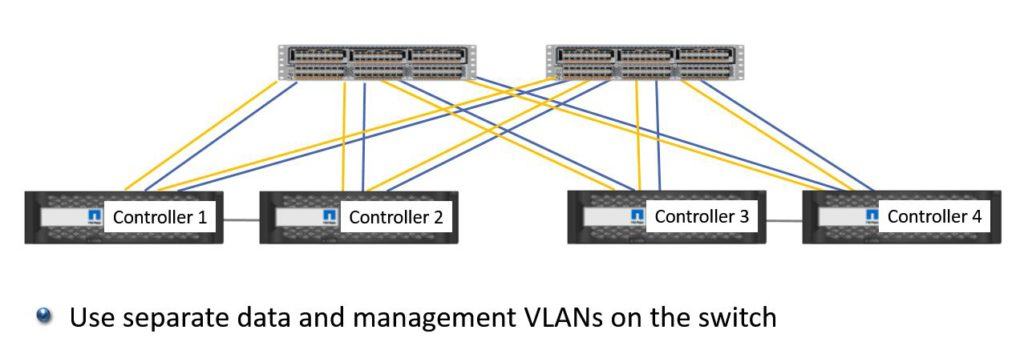

Typically the Data and Management networks will have separate physical switches, but running them both over the same pair of shared physical switches is also a supported configuration. In this case, you'll still use separate physical ports on the controller, but you can have both your data and your management ports connected to the same switch. Use VLANs on the switch to split the networks from each other.

Shared Data and Management Network Switches

You can't do this with the Cluster Interconnect Network. You can share the Data and the Management Network on shared switches, but the Cluster Interconnect has to be on a dedicated private network.

Click Here to get my 'NetApp ONTAP 9 Storage Complete' training course.